Tom Sherborne

@tomsherborne

code MTS @cohere ex: @edinburghnlp @allen_ai @cambridgenlp @ucl @apple.

ID: 971827388

https://tomsherborne.github.io 26-11-2012 11:56:04

336 Tweet

938 Followers

279 Following

mr. pretraining Acyr Locatelli is looking for intern to start in Jan, DM him if u can code

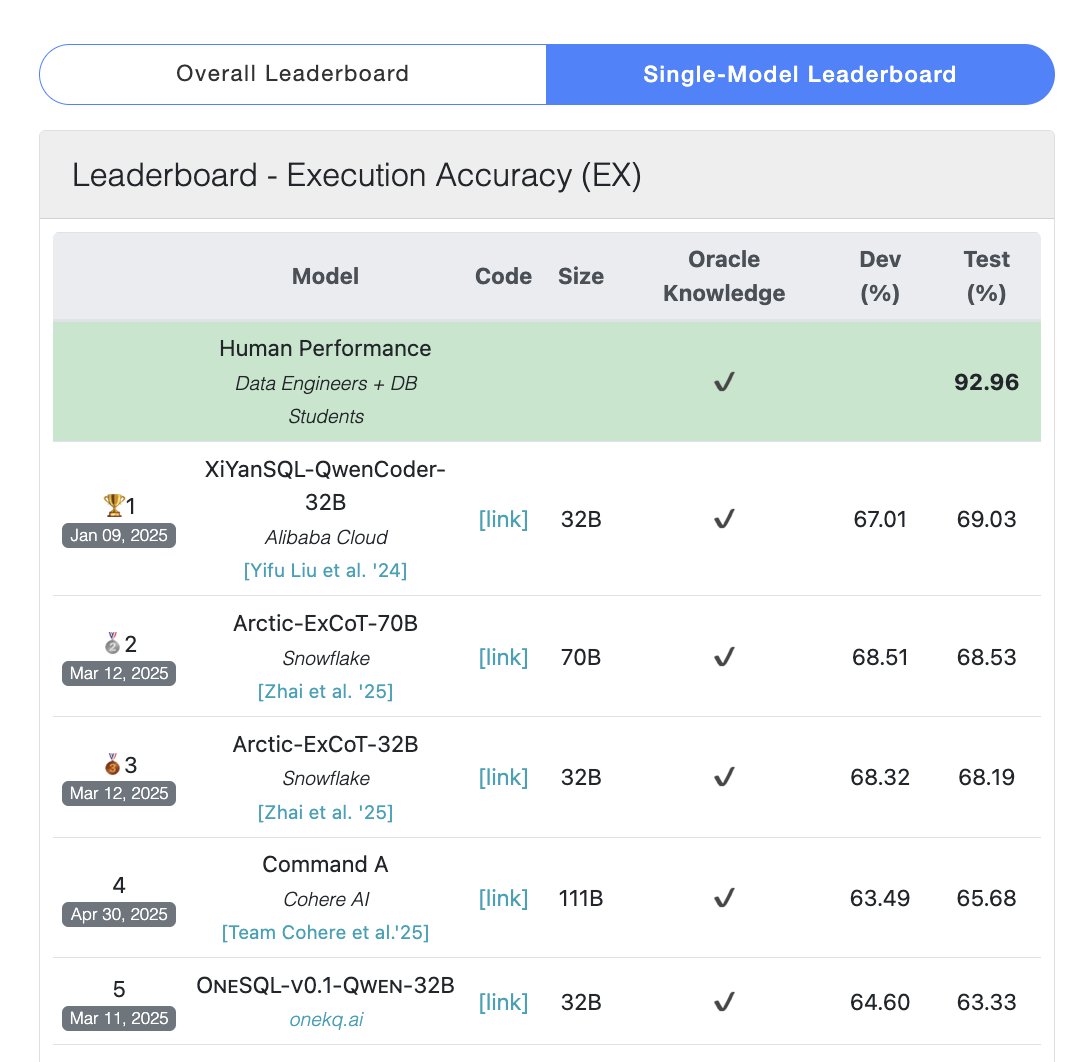

I'm excited to the tech report for our @Cohere Cohere For AI Command A and Command R7B models. We highlight our novel approach to model training including the use of self-refinement algorithms and model merging techniques at scale. Command A is an efficient, agent-optimised