Tolga Bolukbasi

@tolgab0

AI research/Gemini pretraining @GoogleDeepmind, PhD, opinions my own.

ID: 2886508144

http://www.tolgabolukbasi.com 21-11-2014 03:37:31

92 Tweet

296 Followers

239 Following

I am really excited to reveal what Google DeepMind's Open Endedness Team has been up to 🚀. We introduce Genie 🧞, a foundation world model trained exclusively from Internet videos that can generate an endless variety of action-controllable 2D worlds given image prompts.

Great new work from our team and colleagues at Google DeepMind! On the Massive Text Embedding Benchmark (MTEB), Gecko is the strongest model to fit under 768-dim. Try it on Google Cloud. Use it for RAG, retrieval, vector databases, etc.

Machine unlearning ("removing" training data from a trained ML model) is a hard, important problem. Datamodel Matching (DMM): a new unlearning paradigm with strong empirical performance! w/ Kristian Georgiev Roy Rinberg Sam Park Shivam Garg Aleksander Madry Seth Neel (1/4)

andi (twocents.money) I worked on the M series while at Apple. The main advantage that stuck out to me was actually that they were able to acquire dozens of top Intel engineers 5-10 years ago as Intel started struggling and making poor decisions. For example, Intel had a couple sites around the

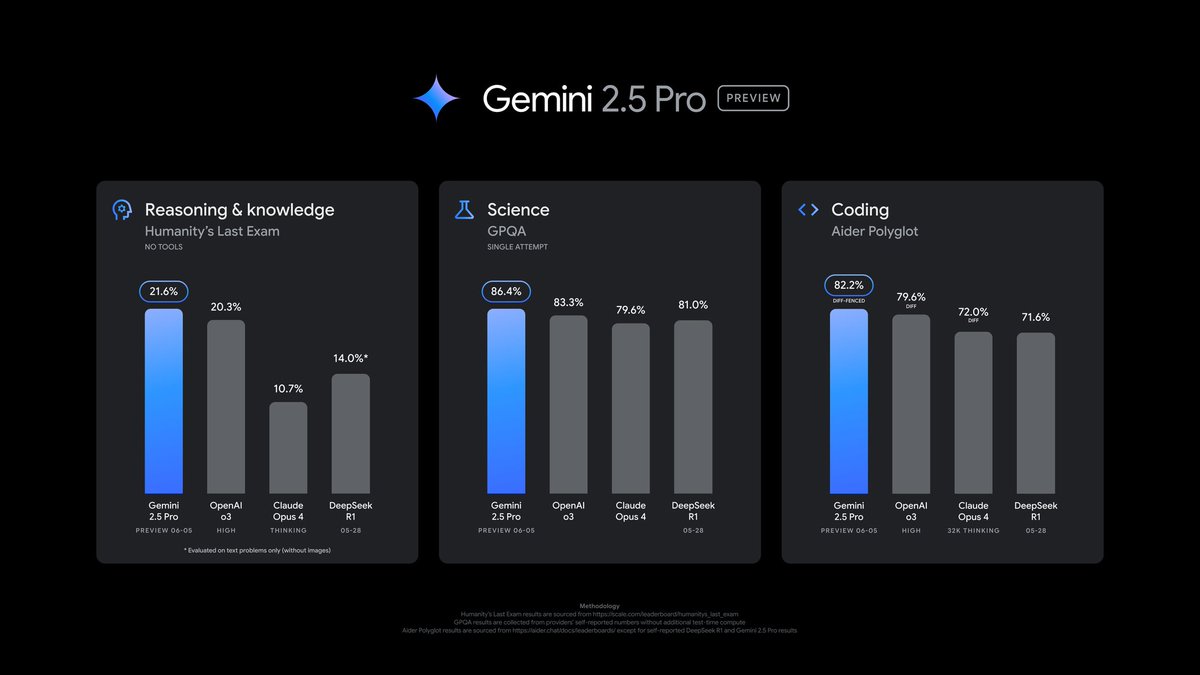

This model’s “thinking” capabilities are driving major gains: 🧑🔬Top performance on math and science benchmarks (AIME, GPQA) 💻Exceptional coding performance (LiveCodeBench) 📈Impressive performance on complex prompts (Humanity’s Last Exam) #1 on lmarena.ai (formerly lmsys.org) leaderboard 🏆

Our latest Gemini 2.5 Pro update is now in preview. It’s better at coding, reasoning, science + math, shows improved performance across key benchmarks (AIDER Polyglot, GPQA, HLE to name a few), and leads lmarena.ai with a 24pt Elo score jump since the previous version. We also