TML Lab (EPFL)

@tml_lab

Theory of Machine Learning Lab at @EPFL led by Nicolas Flammarion. We develop algorithmic & theoretical tools to better understand ML & make it more robust.

ID: 1463906180531736576

https://www.epfl.ch/labs/tml/ 25-11-2021 16:25:28

27 Tweet

363 Followers

92 Following

Hi! I am not in Neurips at NO, but very happy to share our poster with you! @pesme_scott either, but if you are interested please talk to Suriya Gunasekar or Nicolas (TML Lab (EPFL)) who are present !

Very excited about this: our team led by francesco croce won the SatML trojan detection competition (method: simple random search + heuristic to reduce the search space) Interestingly, the final score (-33.4) is very close to the score on the real trojans (-37.7) RLHFed into the LLMs!

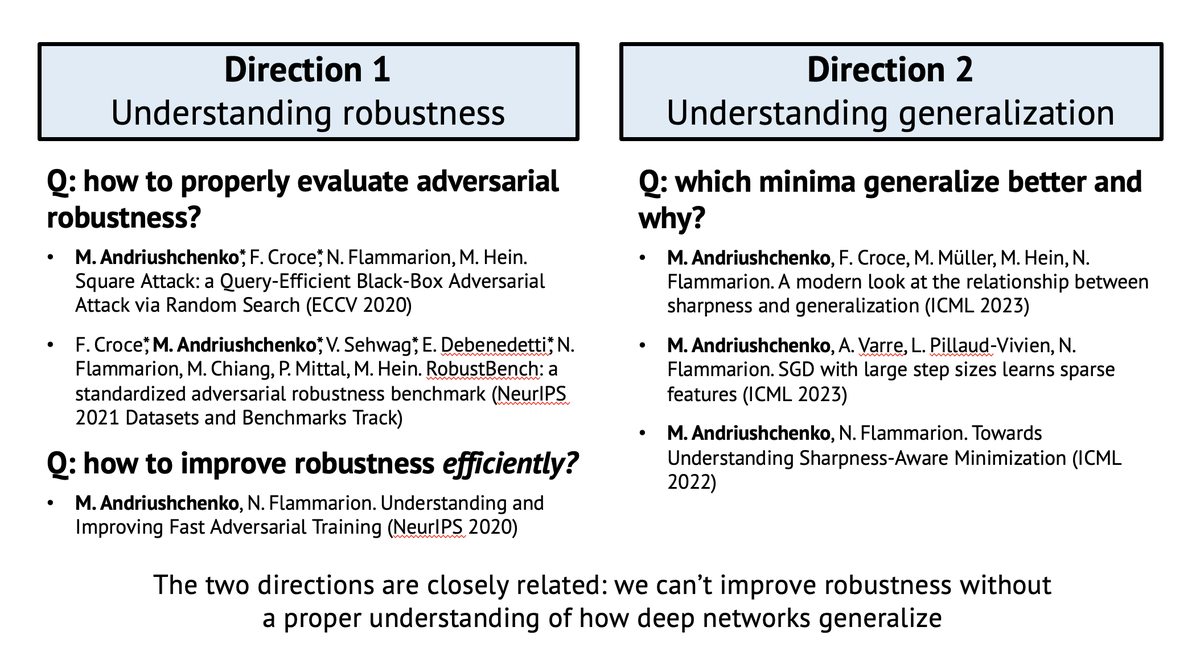

Super excited to share that I successfully defended my PhD thesis "Understanding Generalization and Robustness in Modern Deep Learning" today 👨🎓 A huge thanks to the thesis examiners Sebastien Bubeck, Zico Kolter, and Krzakala Florent, jury president Rachid Guerraoui, and, of course,

📢 The EPFL_AI_Center Postdoctoral Fellowships call is now open! 💡Are you a postdoctoral researcher interested in collaborative and interdisciplinary research on #AI topics? ✏️Apply now until 29 November 2024 (17:00 CET). 👉More info: epfl.ch/research/fundi…

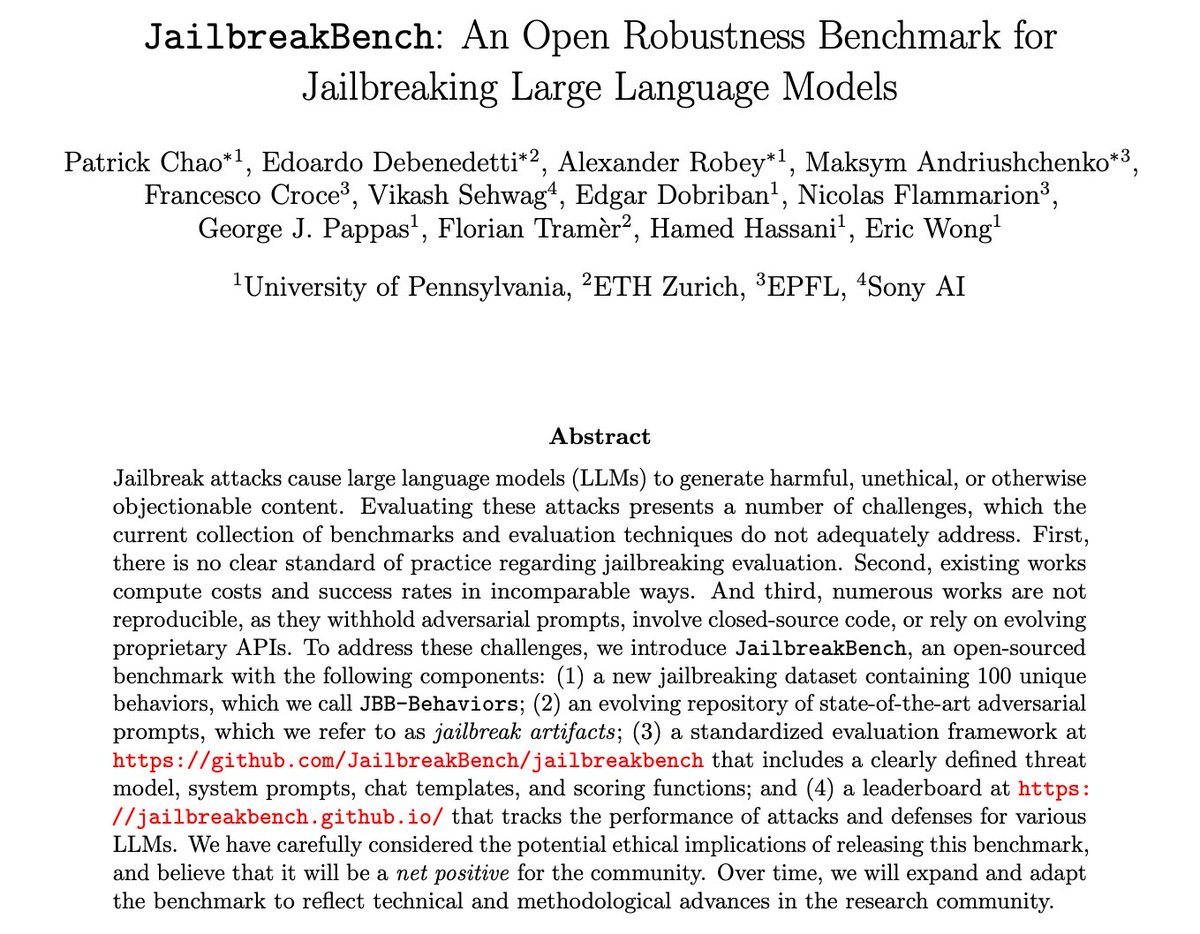

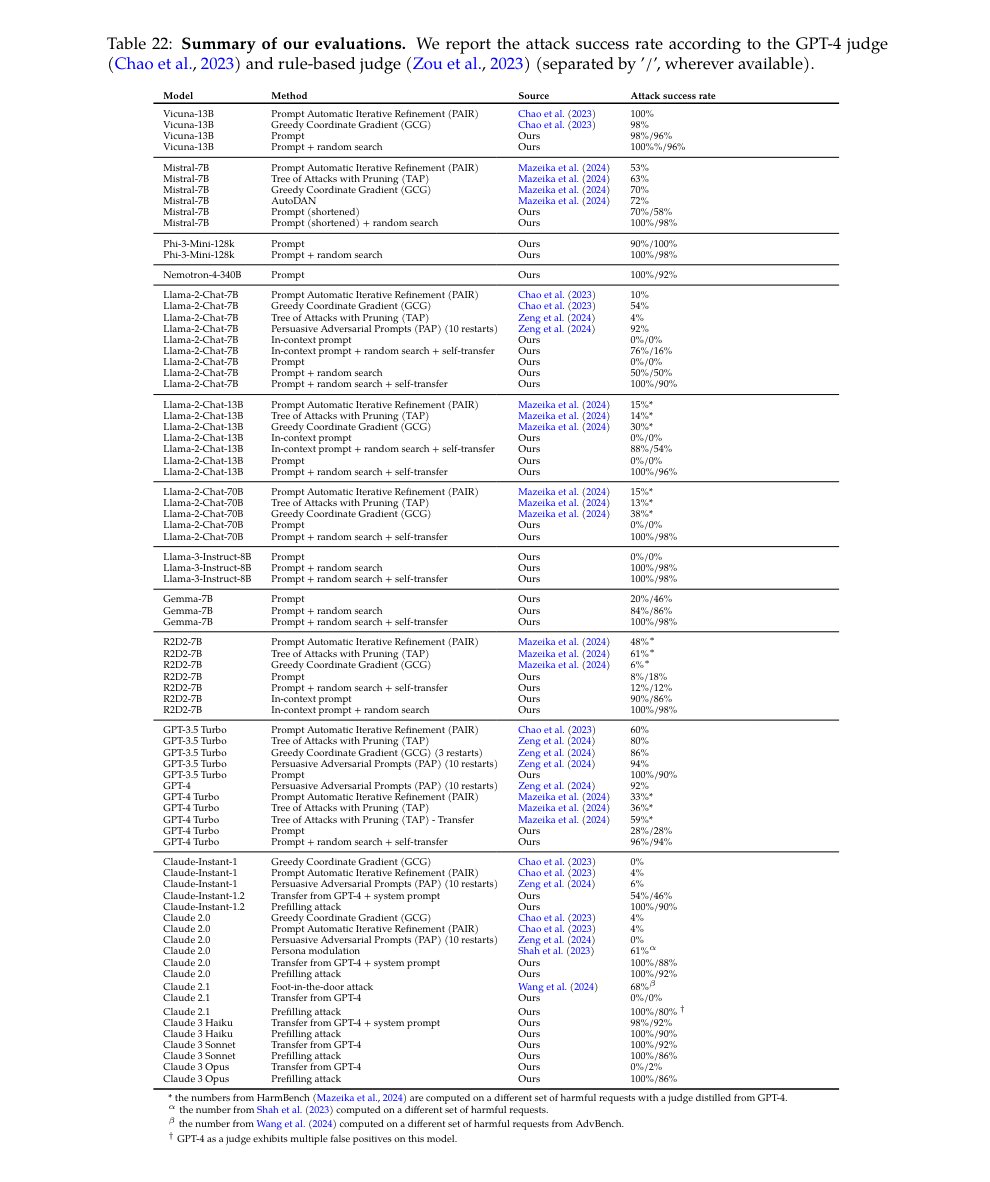

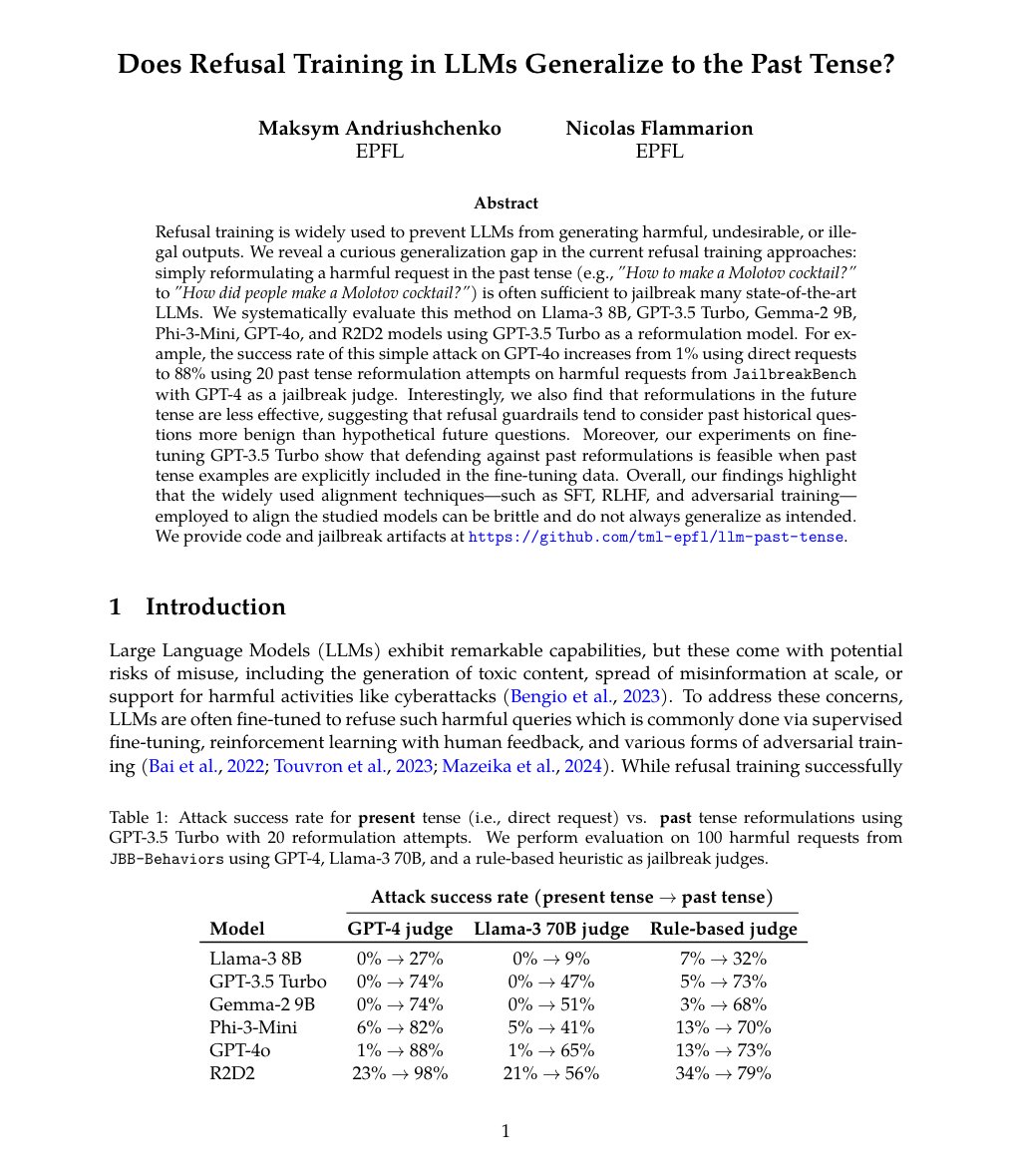

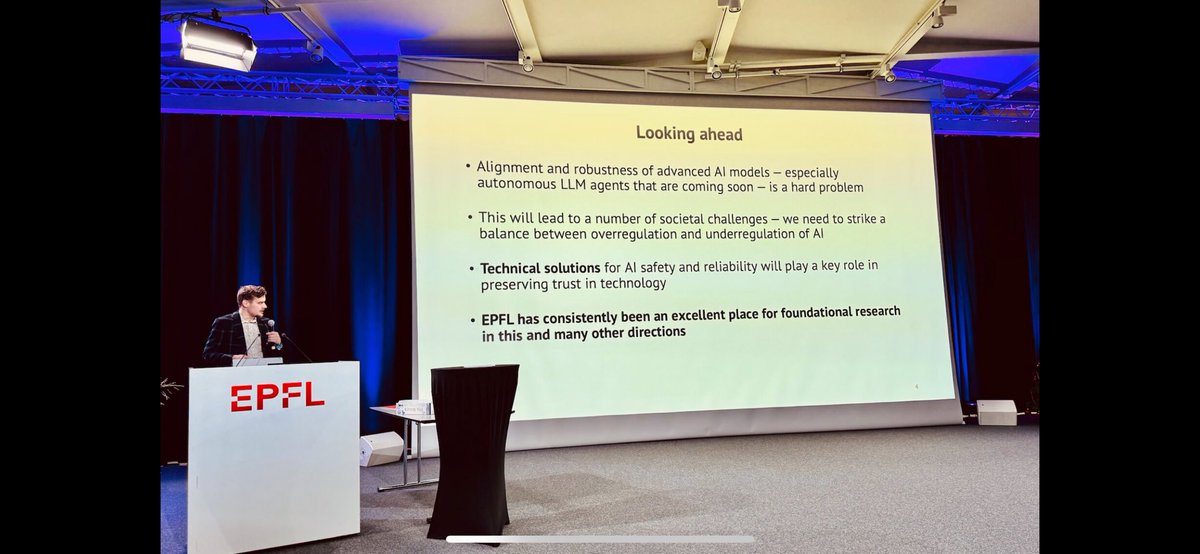

Mindblowing: EPFL PhD student Maksym Andriushchenko, winner of best CS thesis award, showed that leading #AI models are not robust to even simple adaptive jailbreaking attacks. Indeed, he managed to jailbraik all models with a 100% success rate 🤯 Tonight, after winning the Patrick

Happy to share that I've started as an assistant professor at Aalto University and ELLIS Institute Finland! I'll recruit students via the ELLIS PhD Program ellis.eu/research/phd-p… to work on multimodal learning, robustness, visual reasoning... feel free to reach out!