Timothy Hospedales

@tmh31

Professor @ University of Edinburgh.

Head of Samsung AI Research Centre, Cambridge.

ID: 62544367

http://homepages.inf.ed.ac.uk/thospeda/ 03-08-2009 15:49:00

144 Tweet

823 Takipçi

112 Takip Edilen

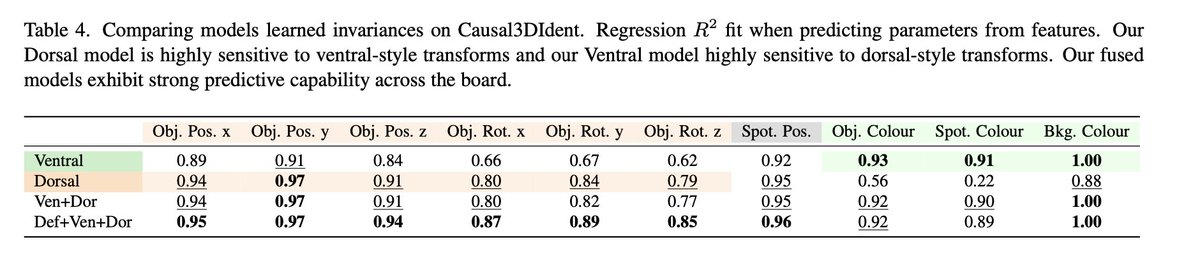

Why Do Self-Supervised Models Transfer? Investigating the Impact of Invariance on Downstream Tasks Linus Ericsson, Henry Gouk, Timothy Hospedales tl;dr: color augmentation helps self-super MoCo2 pose estimation. arxiv.org/abs/2111.11398 1/

Presenting our paper on a new transfer learning setting called “latent domain learning” at #ICLR2022’s poster session 5 tomorrow (10:30am BST). openreview.net/pdf?id=kG0AtPi… — joint work with Timothy Hospedales and Hakan Bilen, hope to see you there!

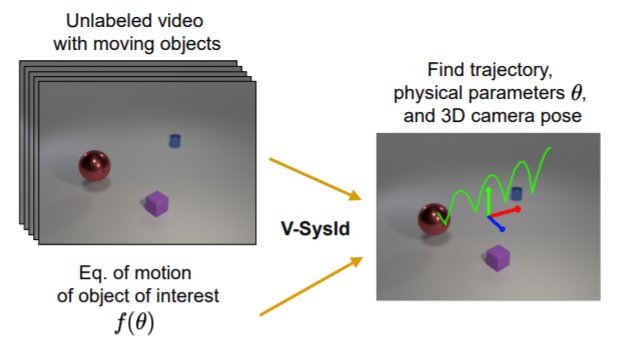

I'll be presenting some work on Vision based key point discovery and system identification at #l4dc2022 this Friday. proceedings.mlr.press/v168/jaques22a… - Work led by Miguel Jaques with Martin Asenov Timothy Hospedales

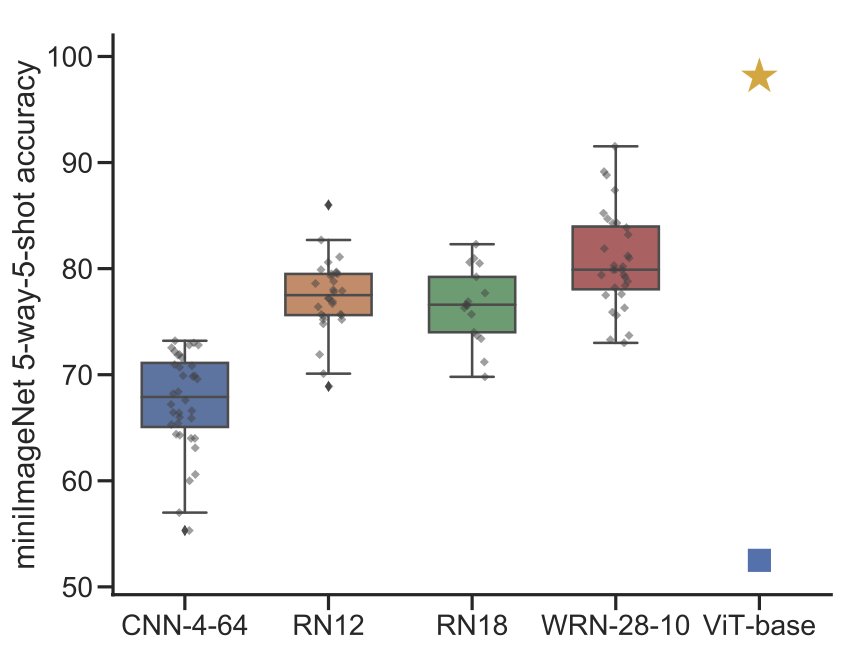

What happens when few-shot meta-learning meets foundation models? Check our paper with Shell Xu Hu at CVPR'22 New Orleans today. hushell.github.io/pmf/

Check out our latest survey on Self-Supervised Multimodal Learning!👇 Great to have the advisory from Oisin Mac Aodha and Timothy Hospedales. Paper: arxiv.org/abs/2304.01008

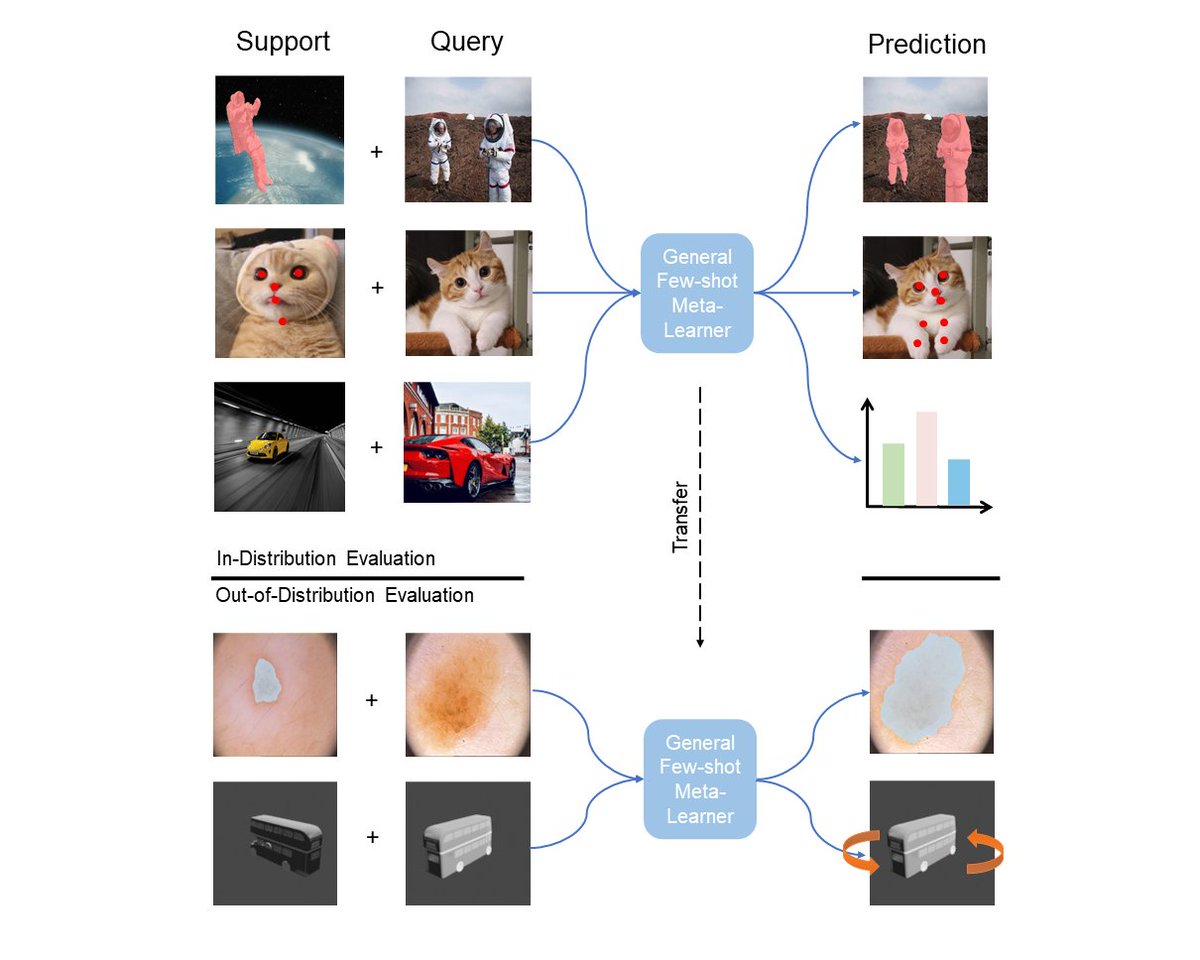

Meta Omnium is a multi-task few-shot learning benchmark to evaluate generalization across CV tasks. Work by Ondrej Bohdal Yinbing Tian, Yongshuo Zong, Ruchika Chavhan, Da Li, Henry Gouk, Timothy Hospedales, will be presented on 20/6 afternoon. Project page: edi-meta-learning.github.io/meta-omnium/

Interested in practical uncertainty quantification? Our new Bayesian NN library from Samsung AI Cambridge scales to large VITs! One line of code wraps any architecture with no modifying your model definition! arxiv.org/abs/2309.12928 github.com/SamsungLabs/Ba… Minyoung Kim

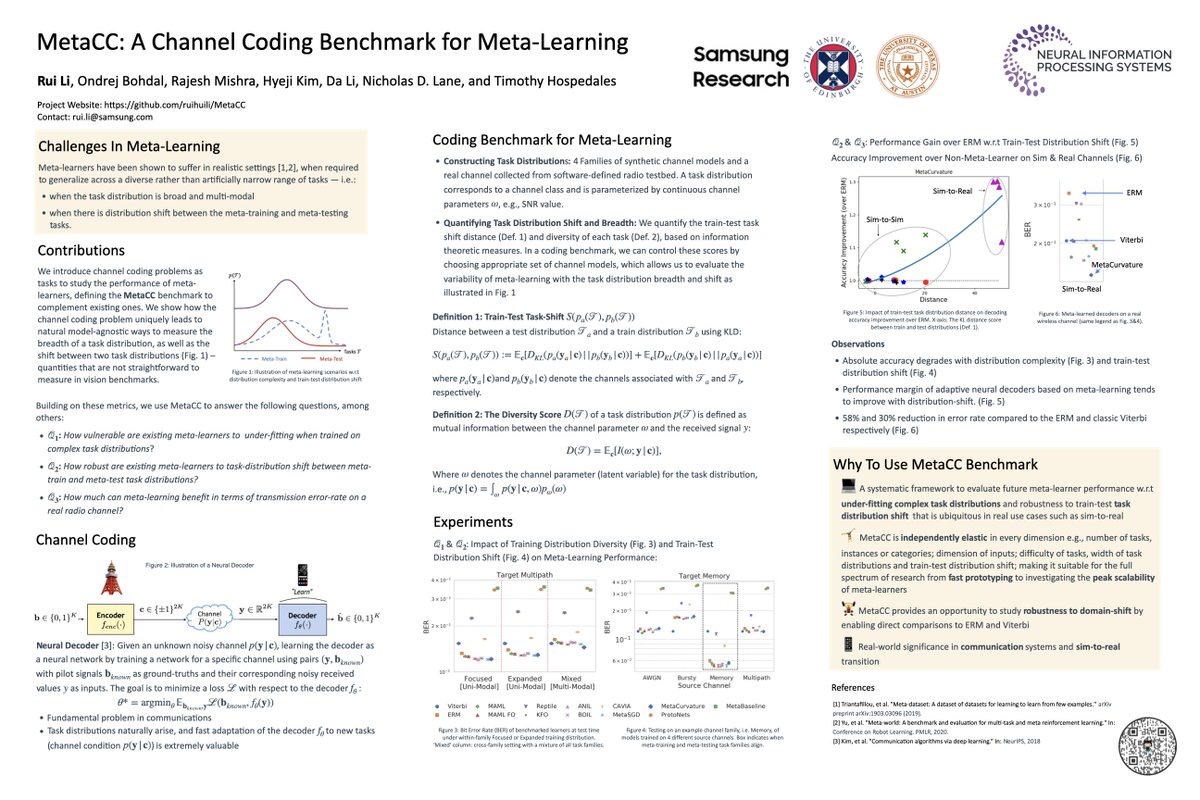

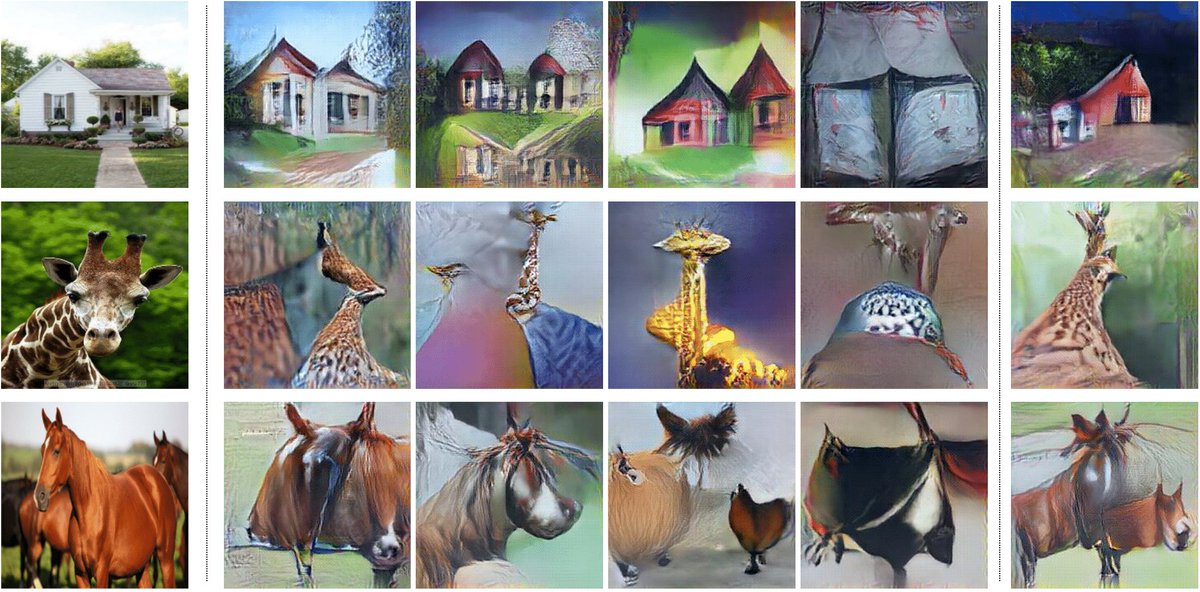

Excited to have been part of DemoFusion, bringing UHD generation to SDXL on your desktop with no training! With Ruoyi Du Yi-Zhe Song Dongliang Chang Project: ruoyidu.github.io/demofusion/dem…, paper: arxiv.org/abs/2311.16973 #GenerativeAI