Tianyuan Zhang

@tianyuanzhang99

PhD students in@MIT, working on vision and ML. M.S. in CMU, B.S. in PKU

ID: 905014077126213632

http://tianyuanzhang.com 05-09-2017 10:25:45

128 Tweet

963 Takipçi

784 Takip Edilen

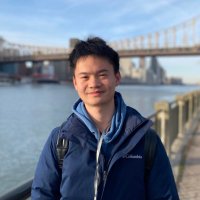

Super excited to share that I’ve officially defended my PhD, wrapped up an incredible journey at Massachusetts Institute of Technology (MIT) and Adobe Research, and joined Reve! Thrilled to be working alongside the same amazing founders I teamed up with back in the Adobe days. That experience gave me deep

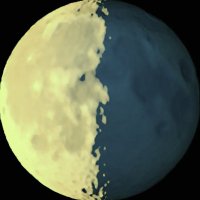

🔥Spatial intelligence requires world generation, and now we have the first comprehensive evaluation benchmark📏 for it! Introducing WorldScore: Unifying evaluation for 3D, 4D, and video models on world generation! 🧵1/7 Web: haoyi-duan.github.io/WorldScore/ arxiv: arxiv.org/abs/2504.00983