TianyLin

@tianylin

DL practitioner #Ihavecats

ID: 1266668735554711558

http://nil9.net 30-05-2020 09:52:24

321 Tweet

320 Takipçi

441 Takip Edilen

It’s worth checking out, and Jeremy Bernstein even shares the slides

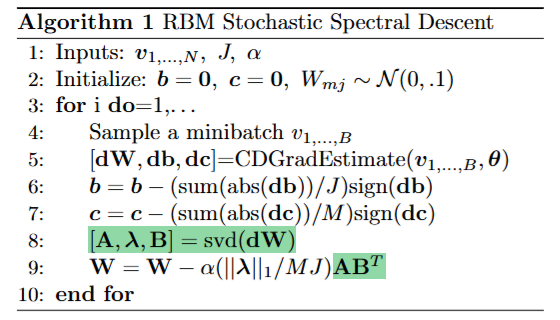

If you cite Muon, I think you should definitely cite SSD (proceedings.mlr.press/v38/carlson15.…) by Volkan Cevher et al. (sorry I can't find the handle of other authors) -- which proposed spectral descent.

huggingface website is down. plz fix it Hugging Face

Join our ML Theory group next week as they welcome Tony S.F. on July 3rd for a presentation on "Training neural networks at any scale" Thanks to Andrej Jovanović Anier Velasco Sotomayor and Thang Chu for organizing this session 👏 Learn more: cohere.com/events/Cohere-…

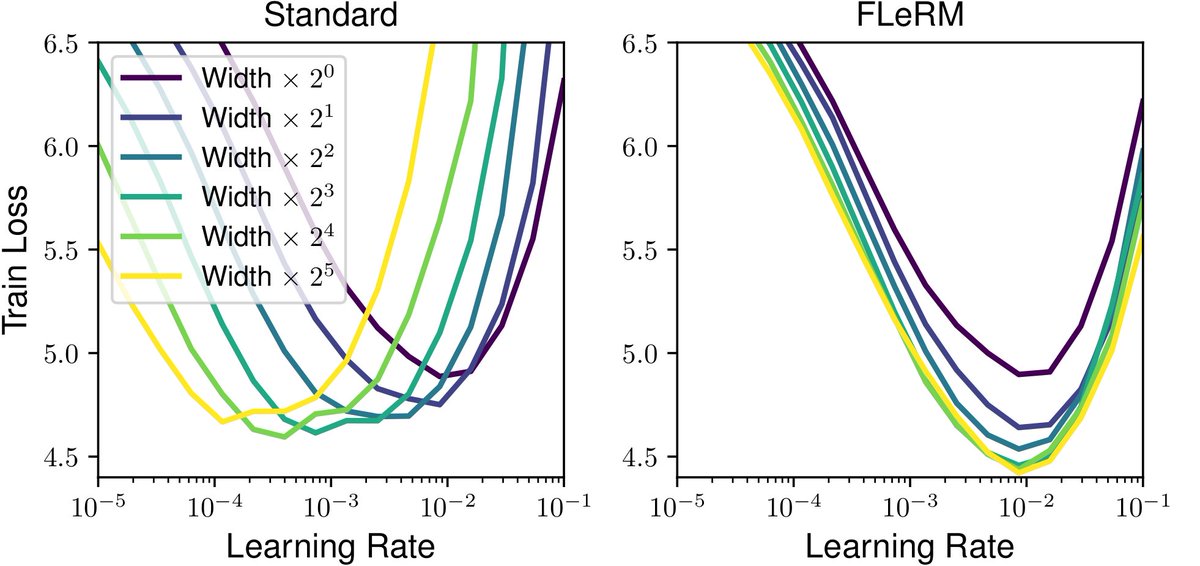

Looks like extremely exciting and useful work by Kwangjun Ahn, Byron Xu, Natalie Abreu, John Langford and Gagik Magakyan github.com/microsoft/dion/ (2/2)