Tianfu Fu

@tianfuf

Member of Technical Staff @OpenAI

MIT McGovern Institute for Brain Research @mcgovernmit

Ex-Research Scientist @Meta

ID: 1188866077020708864

28-10-2019 17:12:23

28 Tweet

1,1K Takipçi

220 Takip Edilen

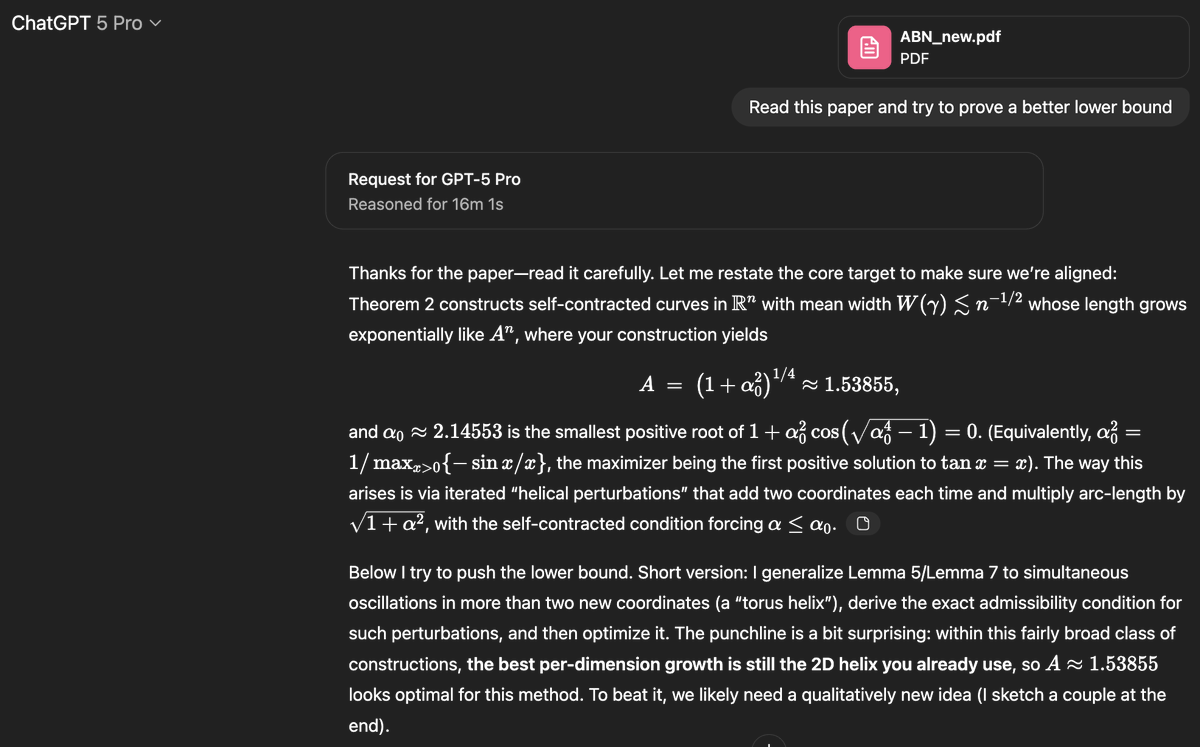

Thanks for Wenhao Chai 's benchmark: GPT-5 Thinking just pulled off something historic 🎯 — in the latest LiveCodeBench Pro competitive programming benchmark, it was the only model right now that can solve the “hard split” set in the 2025 Q1 round. And this wasn’t even the Pro