Vineet Jain

@thevineetjain

PhD candidate @Mila_Quebec and @mcgillu. Previously @mldcmu @Bosch_AI

ID: 1430350595106476037

https://vineetjain96.github.io/ 25-08-2021 02:08:07

94 Tweet

505 Takipçi

372 Takip Edilen

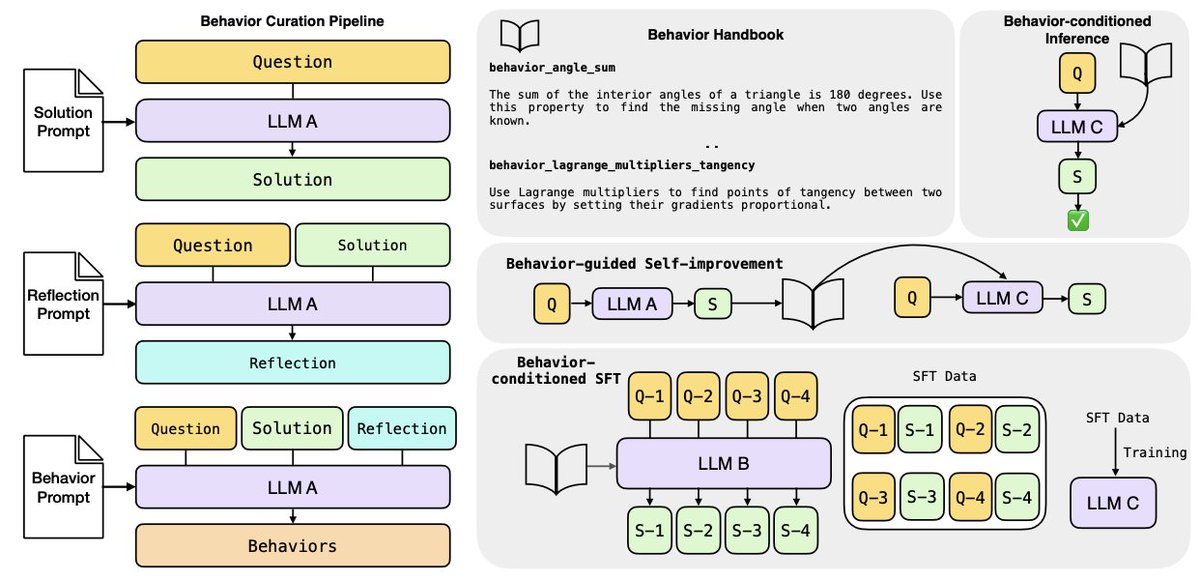

Our work (arxiv.org/abs/2509.13237) can be seen as one instantiation of the paradigm proposed by Andrej Karpathy here. The behavior handbook is a repository of problem solving strategies which we show can be reused to get better and more efficient reasoning in the future. 🧵 for more

🚀We were delighted to anchor ⚓️ at the RoboPapers podcast with Chris Paxton and Michael Cho - Rbt/Acc to talk about SAILOR ⛵️. The episode is now live—tune in!

RSA is now on arxiv! 🥳 Check out these beautiful animations made by Moksh Jain which explain the basic idea really well. 📄 arxiv.org/abs/2509.26626

Thanks for sharing our work Rohan Paul I was honestly surprised to see this simple method works so well across tasks and models. There is definitely a lot more we can get out of models using test time scaling alone.