Pierre Richemond 🇪🇺

@theonekloud

Pretraining @cohere. @ImperialCollege PhD, Paris VI - @Polytechnique, ENST, @HECParis alum. Prev @GoogleDeepMind scientist, @GoldmanSachs trader. Views mine.

ID: 173117297

31-07-2010 13:44:22

13,13K Tweet

1,1K Takipçi

873 Takip Edilen

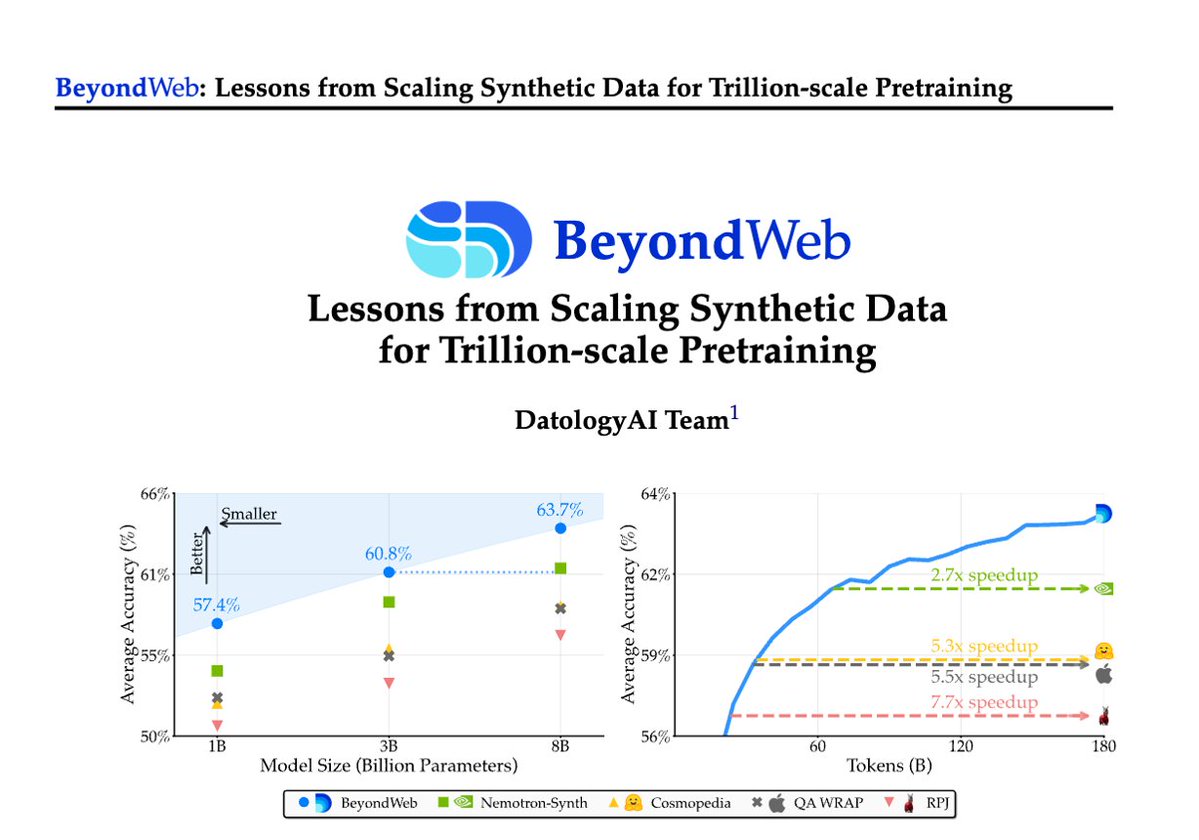

1/Pretraining is hitting a data wall; scaling raw web data alone leads to diminishing returns. Today DatologyAI shares BeyondWeb, our synthetic data approach & all the learnings from scaling it to trillions of tokens🧑🏼🍳 - 3B LLMs beat 8B models🚀 - Pareto frontier for performance

New survey on diffusion language models: arxiv.org/abs/2508.10875 (via Nicolas Perez-Nieves). Covers pre/post-training, inference and multimodality, with very nice illustrations. I can't help but feel a bit wistful about the apparent extinction of the continuous approach after 2023🥲

The freeze went into effect last week, and it’s not clear how long it will last, The Journal’s sources say. Meta is still likely working through its reorg, which split its AI unit, Meta Superintelligence Labs, into four new groups: TBD Labs, run by form... techcrunch.com/2025/08/21/rep…