Tianqing Fang @ ACL24

@tfang229

Incoming Research Scientist @TencentGlobal AI Lab. PhD. @hkust @HKUSTKnowComp #NLProc. Ex. visiting Ph.D. @epfl_en. Ex visiting scholar at LUKA lab @CSatUSC.

ID: 1641361658269540352

http://fangtq.com 30-03-2023 08:48:23

34 Tweet

226 Followers

272 Following

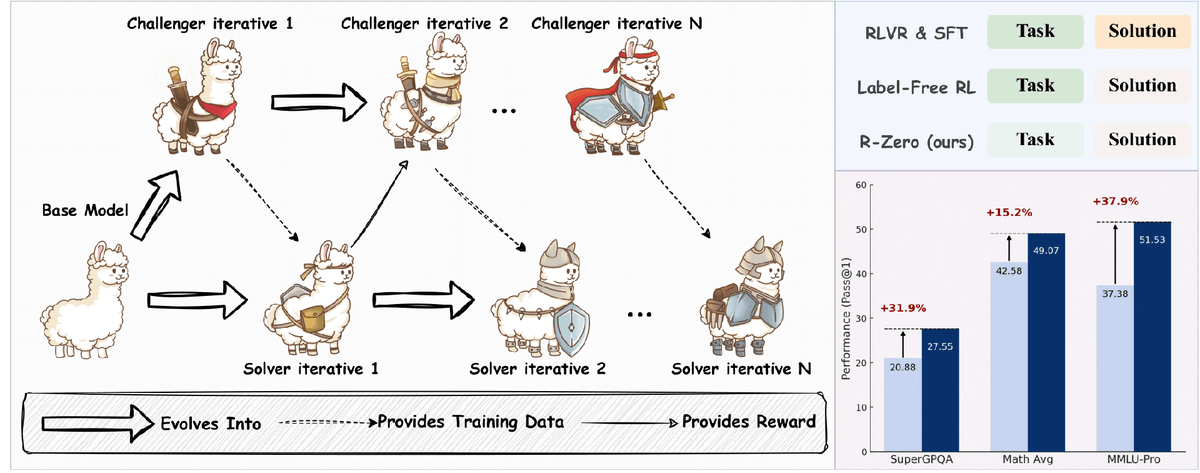

WebEvolver is now accepted to EMNLP 2025. EMNLP 2025 A self-evolving web agent capable of look-ahead simulation.