talia konkle

@talia_konkle

Visual cognitive computational neuroscientist. Professor, Harvard University.

ID: 25995304

http://konklab.fas.harvard.edu 23-03-2009 12:57:55

1,1K Tweet

2,2K Takipçi

588 Takip Edilen

🎉We present DetailGen3D: Generative 3D Geometry Enhancement via Data-Dependent Flow, introducing a flow-based 3D generative model for geometry refinement. Project Page: detailgen3d.github.io/DetailGen3D Github Code: github.com/VAST-AI-Resear… Huggingface Demo: huggingface.co/spaces/VAST-AI…

**ecstatic** to share our ICLR 2025 paper: sparse components distinguish visual pathways & their alignment to neural networks, with Nancy Kanwisher @[email protected] and Meenakshi Khosla (openreview.net/forum?id=IqHeD…) 1/n

Check out our new paper! Vision models often struggle with learning both transformation-invariant and -equivariant representations at the same time. Hafez Ghaemi @ ICML 2025 shows that self-supervised prediction with proper inductive biases achieves both simultaneously.

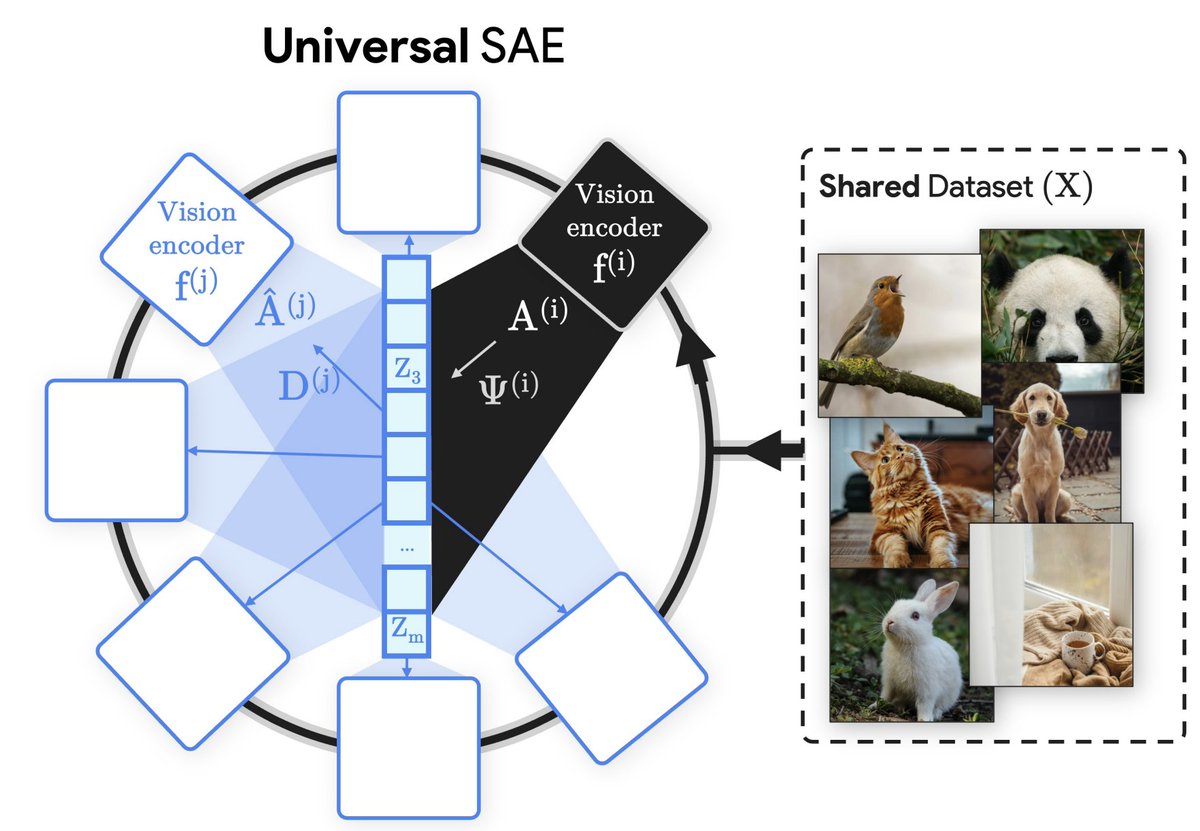

I’m stoked to share our new paper: “Harnessing the Universal Geometry of Embeddings” with jack morris, Collin Zhang, and Vitaly Shmatikov. We present the first method to translate text embeddings across different spaces without any paired data or encoders. Here's why we're excited: 🧵👇🏾

Announcement: Workshop at #CCN2025 🧠 Modeling the Physical Brain: Spatial Organization & Biophysical Constraints 🗓️ Monday, Aug 11 | 🕦 11:30–18:00 CET |📍 Room A2.07 🔗 Register: tinyurl.com/CCN-physical-b… #NeuroAI CogCompNeuro

Around CVPR for the next 2 days—if you're into interpretability, SAEs, complexity, or just wanna know how cool Kempner Institute at Harvard University is, hit me up 👋

This work was a wonderful collaboration with Thomas Fel, talia konkle, and George Alvarez. 🔗 Check out the paper and project for more: Project Page: fenildoshi.com/configural-sha… Paper: arxiv.org/abs/2507.00493 15/15

Why do video models handle motion so poorly? It might be lack of motion equivariance. Very excited to introduce: Flow Equivariant RNNs (FERNNs), the first sequence models to respect symmetries over time. Paper: arxiv.org/abs/2507.14793 Blog: kempnerinstitute.harvard.edu/research/deepe… 1/🧵