🚀 New Blog Post: 'Building an Apache Spark Performance Lab: Tools and Techniques for Spark Optimization.' Get tips, tools, and short demos to boost your Spark performance. Ideal for developers! 🛠️ #ApacheSpark #Performance

Read more: db-blog.web.cern.ch/node/195

🔍 Dandi is hiring Senior Data Engineer (Full Time - Hybrid)

🔗 Apply Now: skillexchange.xyz/job/senior-dat…

#Hiring #Jobs #JobSearch #Engineering #ApacheSpark #Scala #K8S #MLlib #TensorFlow #VertexAI

New🔥 Ep#11: sarah guo // conviction & Elad Gil talk to Matei Zaharia, founder Databricks, creator of Apache Spark, Stanford University CS professor:

- Dolly, betting on small models

- scaling asymptotes

- LLMs in the enterprise

- academic -> founder/CTO of $1B+ revenue co

🎙no-priors.com

Amazon revealed the data arch of their package delivery platform. Since working from home, I witness a steady stream of packages on my porch and I'm starting to wonder how many GBs of data my spouse has contributed to this dataset... 💸

#apachehudi #apachespark

🧵link below👇

Found a low cap DeSci project backed by PolygonDAO and partnered with TensorFlow Apache Spark Cerebras & many more

Sounds interesting?

Scaling AI/ML Infrastructure at Uber.

#apachekafka

#apacheflink

#apachespark

uber.com/en-IE/blog/sca…

Retrieving all rows from a large dataset into memory can cause out-of-memory errors. #ApacheSpark DataFrame delays computations until collect() is called, allowing for row reduction through filtering or aggregating.

This results in more efficient memory usage.

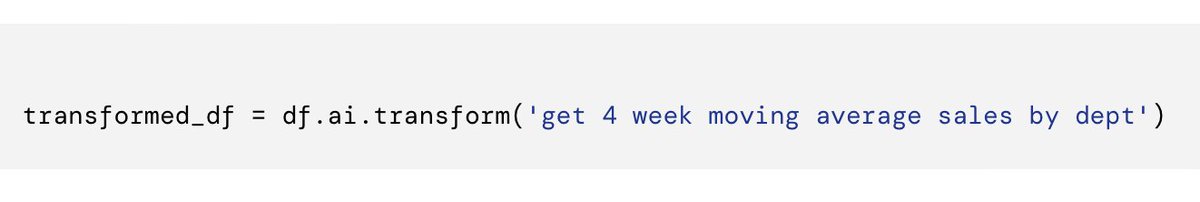

One of my favorite announcements: English SDK for Apache Spark! No more need to remember weird syntax, just chain transformations in natural language with the familiar Spark API. So many fun examples.

databricks.com/blog/introduci…

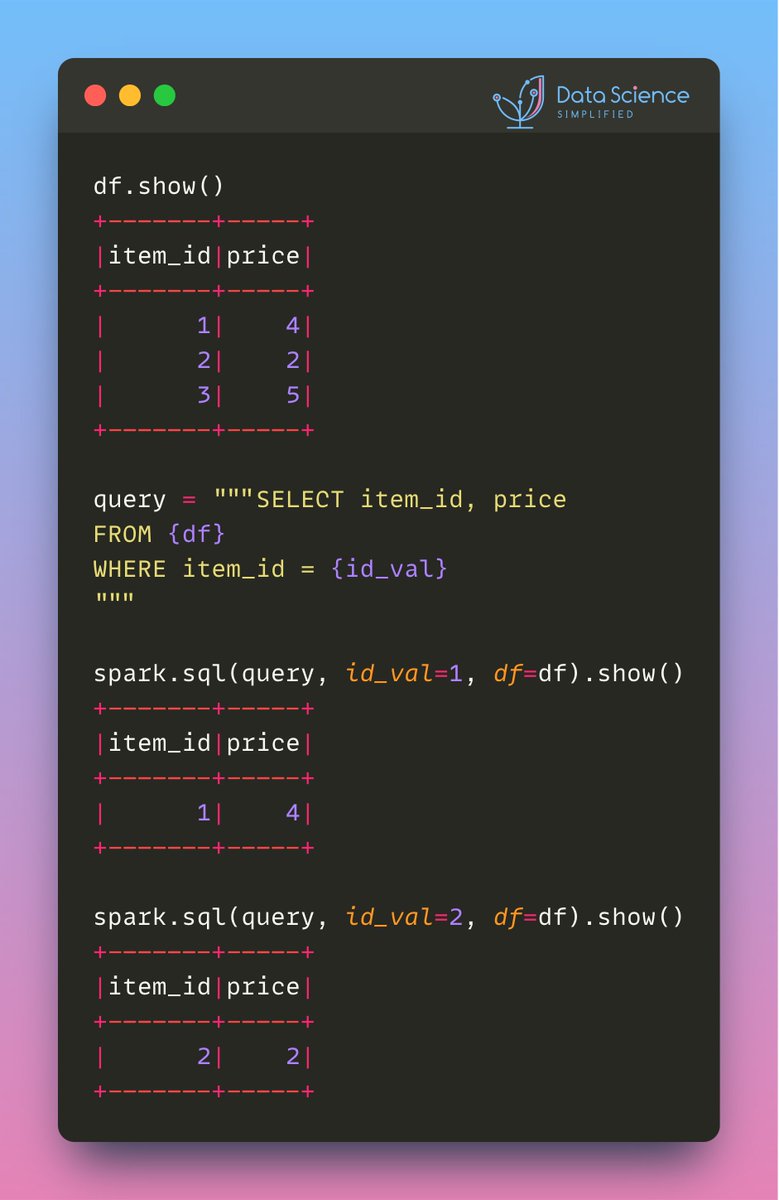

In PySpark, parametrized queries enable the same query structure to be reused with different inputs, without rewriting the SQL.

Additionally, they safeguard against SQL injection attacks by treating input data as parameters rather than as executable code.

#ApacheSpark

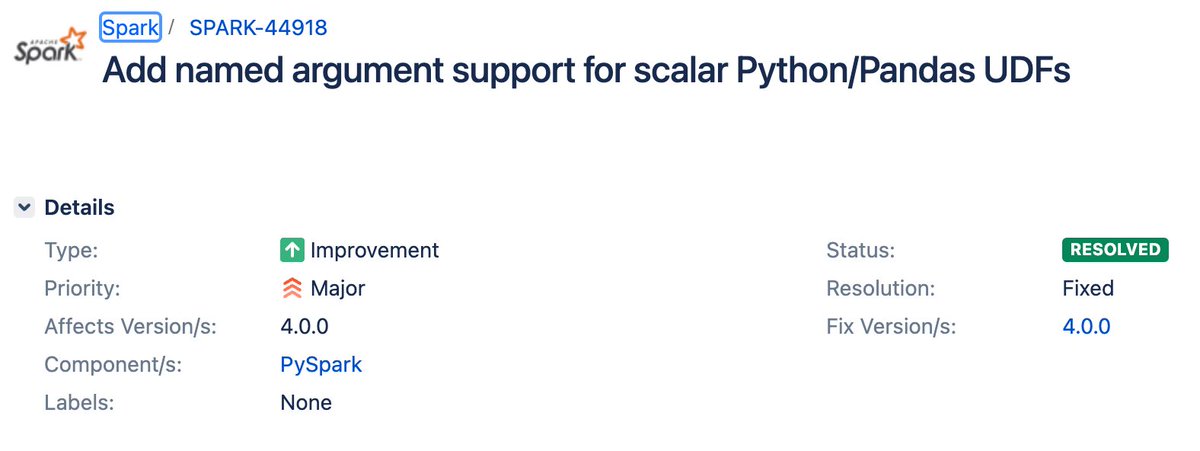

And we know what's coming in #ApacheSpark 4.0.0. This version surely makes us all long-time Spark users soooo OLD! 😆

And I'd not be surprised if some tricks of mine may've happened to be outdated already 😉

Named parameters in SQL statements are already available since 3.5.

Trusted Content to build your AI or ML stack from:

R

Python

LangChain

@Ollama_ai

TensorFlow

PyTorch

Intel Federated Learning

Apache Spark

Project Jupyter

Redis

Amazon Web Services Sagemaker

MLflow

& so many more.

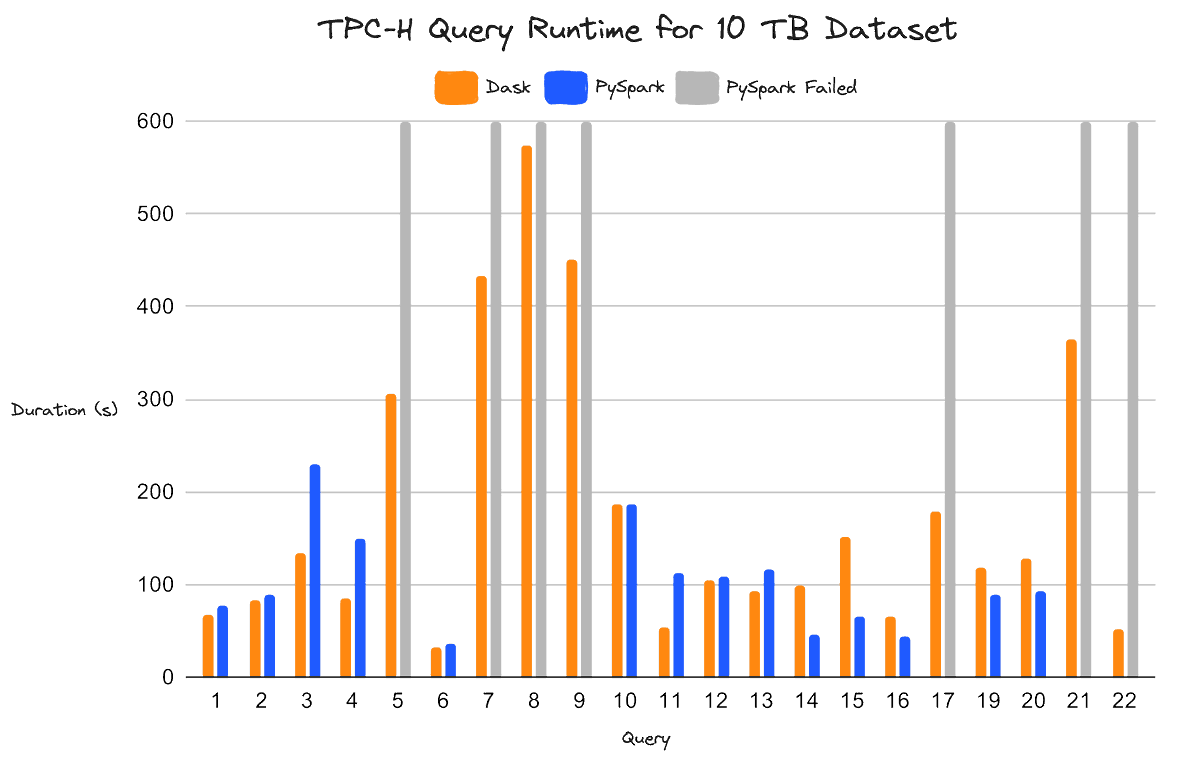

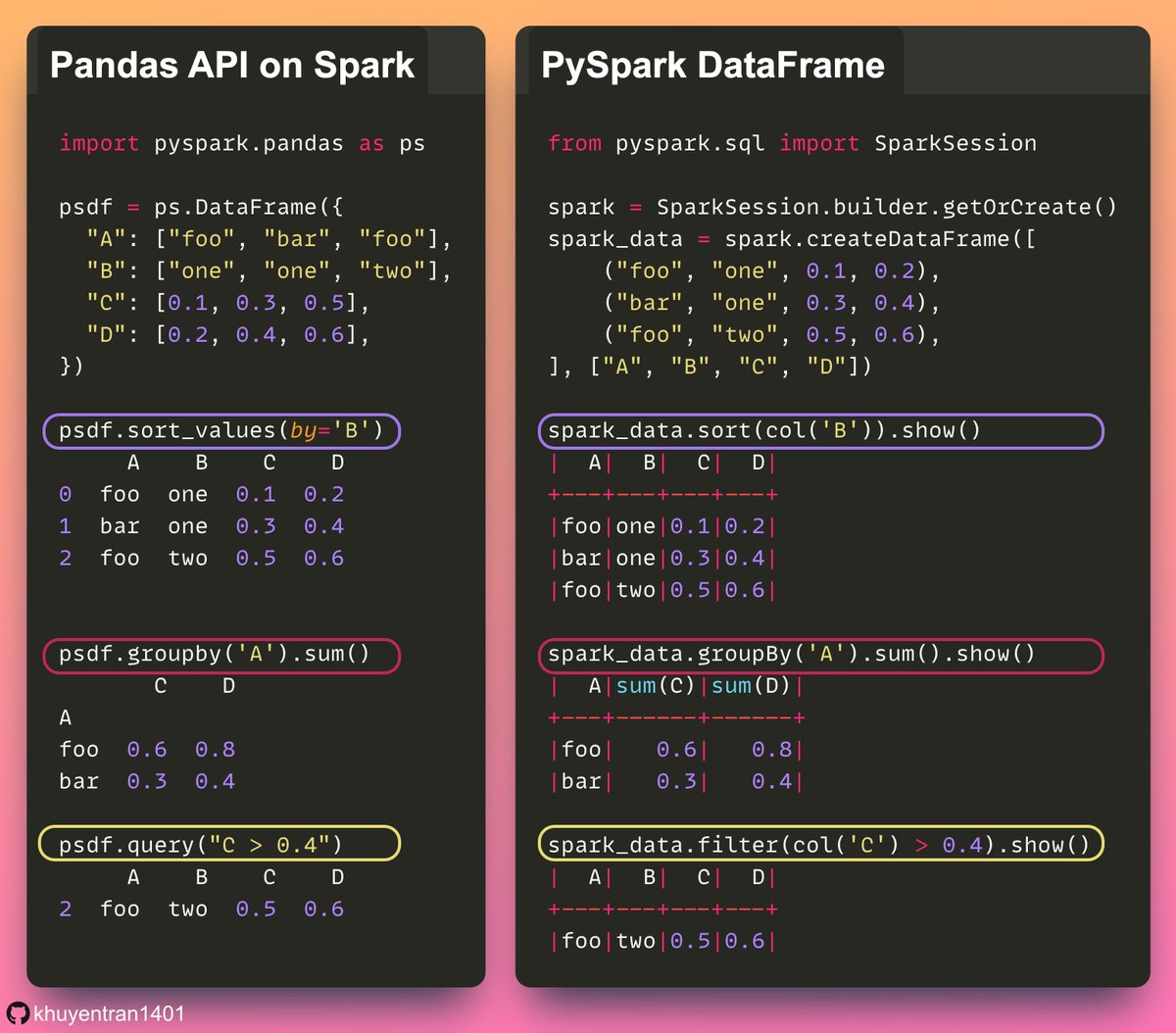

New blog post: a deep dive into dataframes and table abstractions featuring polars data, DuckDB, pandas, dbt, Apache Spark, Dask, Ponder, Fugue Project, ... — when to use which framework and how do they compare or integrate with each other

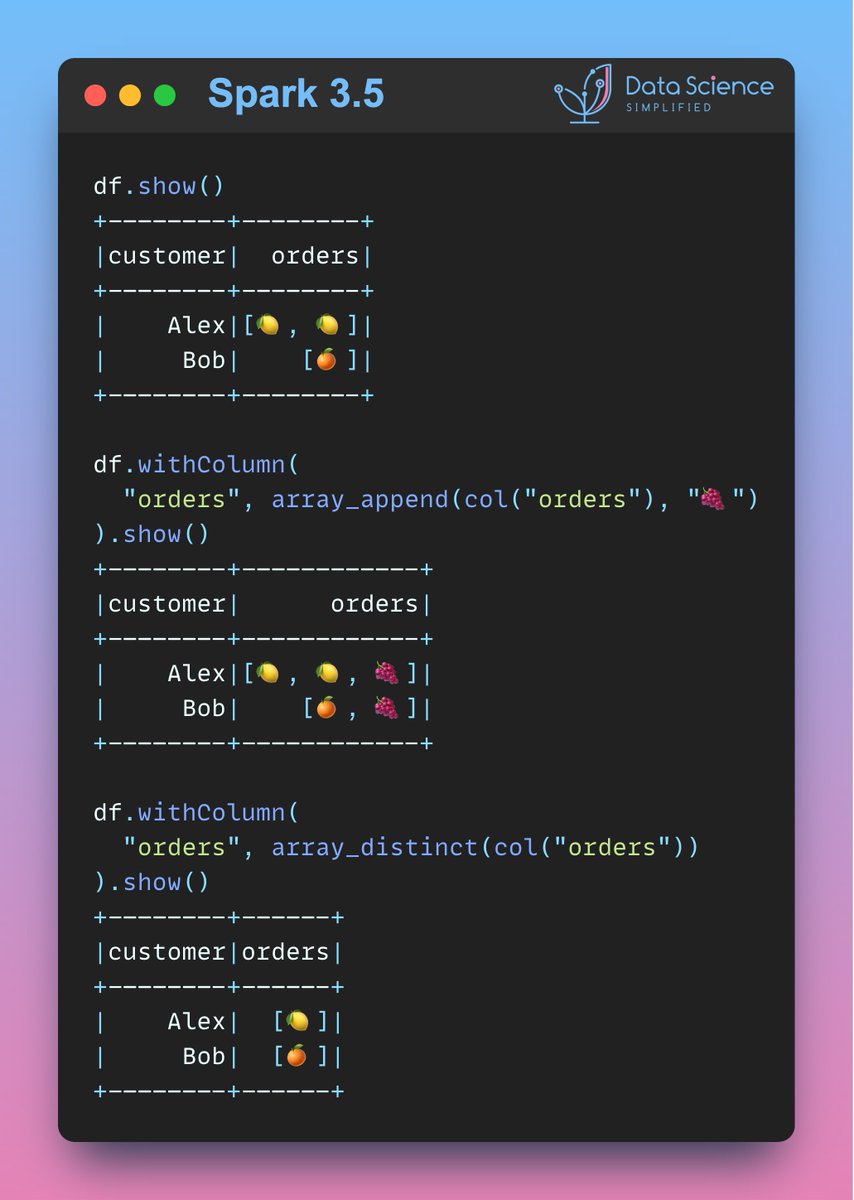

#ApacheSpark 3.5 added new array helper functions that simplify the process of working with array data. Below are a few examples showcasing these new array functions.

🚀 View other array functions: bit.ly/4c0txD1

⭐️ Bookmark this post: bit.ly/3TnNCM3

Salle comble au #VeryTechTrip pour le talk de Claire et Adrien sur le traitement d'images distribué au service de l' #IA ! Un réel gain de temps pour le traitement de données 😉... OVHcloud_Tech

#apachespark #dataprocessing