Sukjun (June) Hwang

@sukjun_hwang

ML PhD student @mldcmu advised by @_albertgu

ID: 1643125892909240320

http://sukjunhwang.github.io 04-04-2023 05:38:46

47 Tweet

236 Followers

241 Following

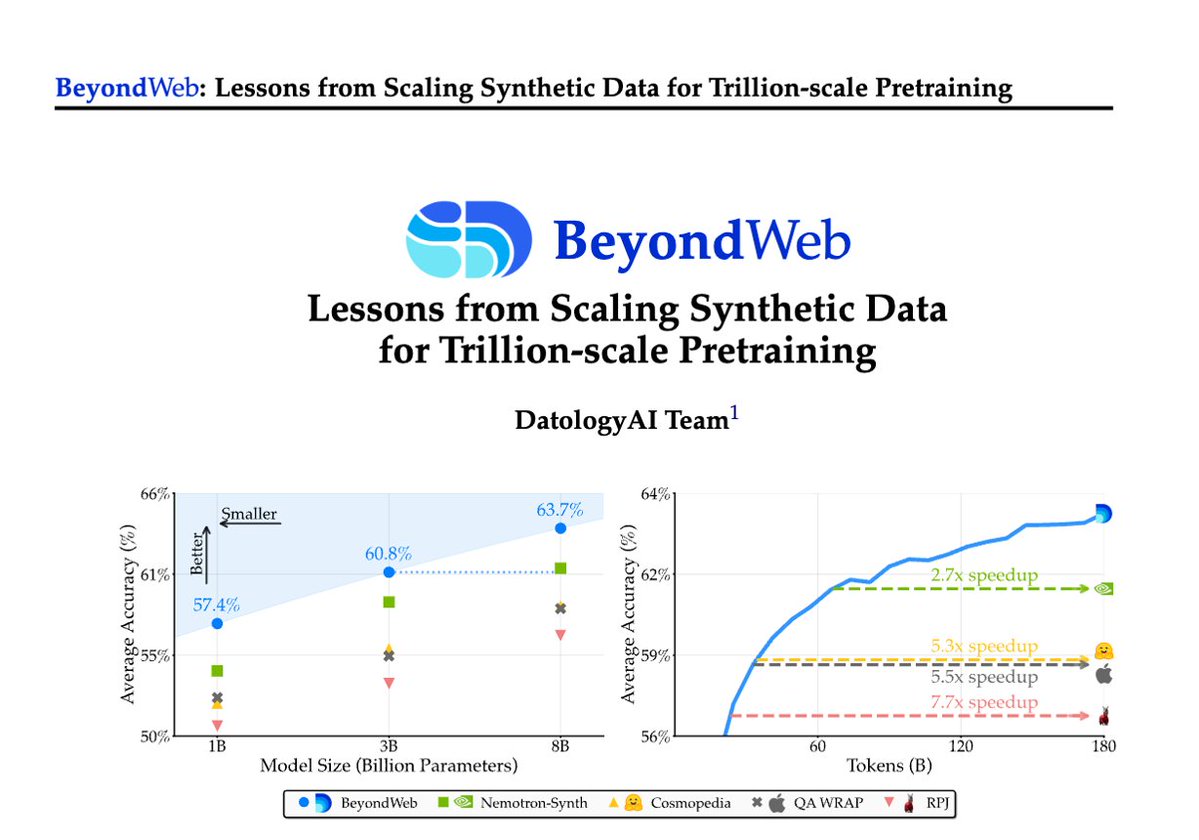

1/Pretraining is hitting a data wall; scaling raw web data alone leads to diminishing returns. Today DatologyAI shares BeyondWeb, our synthetic data approach & all the learnings from scaling it to trillions of tokens🧑🏼🍳 - 3B LLMs beat 8B models🚀 - Pareto frontier for performance