Katrina Drozdov (Evtimova)

@stochasticdoggo

AI researcher | PhD from @NYUDataScience | Bulgarian yogurt, prime numbers, and dogs bring me joy | she/her

ID: 904789658399322112

https://kevtimova.github.io/ 04-09-2017 19:33:59

337 Tweet

391 Followers

347 Following

The recording of the GAN test of time talk by David Warde-Farley 🇺🇦 @[email protected] is now publicly available: neurips.cc/virtual/2024/t…

VideoJAM is our new framework for improved motion generation from AI at Meta We show that video generators struggle with motion because the training objective favors appearance over dynamics. VideoJAM directly adresses this **without any extra data or scaling** 👇🧵

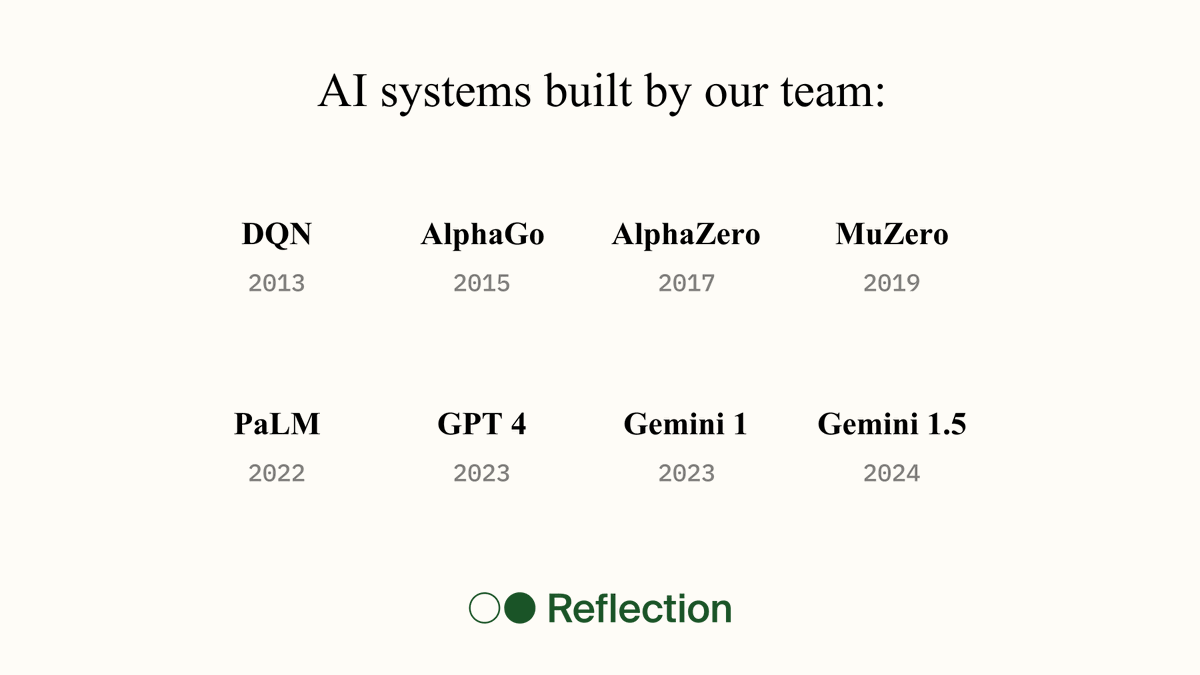

Today I’m launching Reflection AI with my friend and co-founder Ioannis Antonoglou. Our team pioneered major advances in RL and LLMs, including AlphaGo and Gemini. At Reflection, we're building superintelligent autonomous systems. Starting with autonomous coding.

Huge congratulations on the launch! Reflection AI has an incredible team and an ambitious mission—excited to follow your progress!

CDS PhD Vlad Sobal (Vlad Sobal) and Courant PhD Wancong (Kevin) Zhang show that when good data is scarce, planning beats traditional reinforcement learning. With Kyunghyun Cho, Tim G. J. Rudner, and Yann LeCun. nyudatascience.medium.com/when-good-data…

it's been more than a decade since KD was proposed, and i've been using it all along .. but why does it work? too many speculations but no simple explanation. Sungmin Cha and i decided to see if we can come up with the simplest working description of KD in this work. we ended

Really glad to see initiatives like Thinking Machines Tinker grants that support hands-on RL and open-weights LLM work in both research and teaching. What an exciting opportunity for the community!