Steffen Röcker

@sroecker

OG local LLaMA shill. Sr. Solution Architect @RedHat, ex particle physicist. Born @ 347 ppm CO₂. Personal account, potentially unaligned.

ID: 22403036

01-03-2009 20:33:59

3,3K Tweet

1,1K Takipçi

6,6K Takip Edilen

Join us for a deep dive into Zero-Shot Named Entity Recognition with GLiNeR presented by Ihor Stepanov on Tuesday, August 26th. Thanks to our Retrieval and Search program leads Mayank Rakesh and Avinab Neogy for organizing this session ✨ Learn more: cohere.com/events/Cohere-…

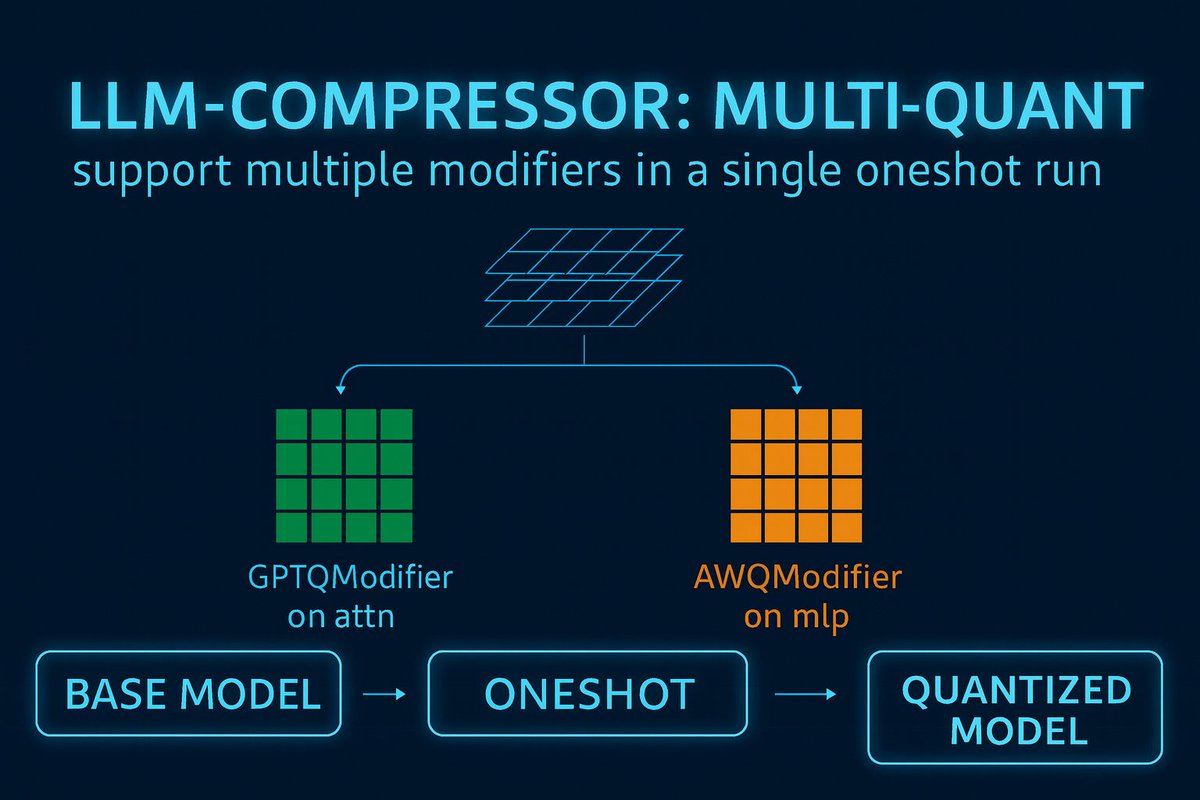

🚀 LLM Compressor v0.7.0 is here! This release brings powerful new features for quantizing large language models, including transform support (QuIP, SpinQuant), mixed precision compression, improved MoE handling with Llama4 support, and more. Full blog: developers.redhat.com/articles/2025/…

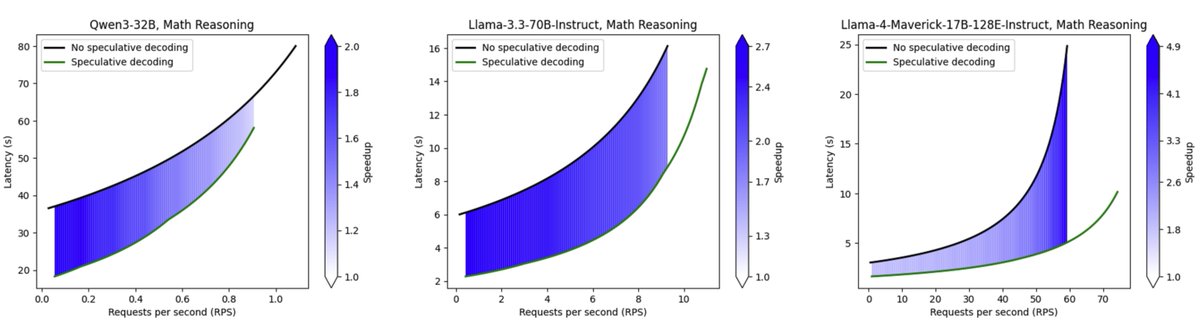

Today, we are officially open-sourcing a set of high-quality speculator models on the Hugging Face Hub. Our first release includes Llamas, Qwens, and gpt-oss. In practice, you can expect 1.5–2.5× speedups on average, with some workloads seeing more than 4× improvements!

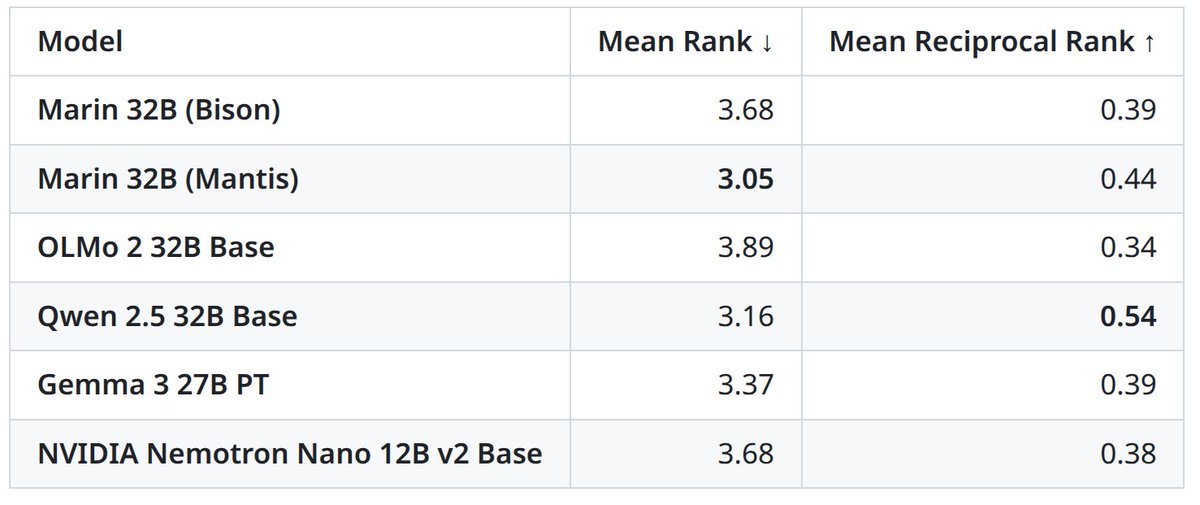

So cool to see Artificial Analysis add openness in their analysis!

![Dan Alistarh (@dalistarh) on Twitter photo 🚀 Excited to announce QuTLASS v0.1.0 🎉

QuTLASS is a high-performance library for low-precision deep learning kernels, following NVIDIA CUTLASS.

The new release brings 4-bit NVFP4 microscaling and fast transforms to NVIDIA Blackwell GPUs (including the B200!)

[1/N] 🚀 Excited to announce QuTLASS v0.1.0 🎉

QuTLASS is a high-performance library for low-precision deep learning kernels, following NVIDIA CUTLASS.

The new release brings 4-bit NVFP4 microscaling and fast transforms to NVIDIA Blackwell GPUs (including the B200!)

[1/N]](https://pbs.twimg.com/media/G0Wh4Q8WwAAAEIr.png)