Sparsity in LLMs Workshop at ICLR 2025

@sparsellms

Workshop on Sparsity in LLMs: Deep Dive into Mixture of Experts, Quantization, Hardware, and Inference @iclr_conf 2025.

ID: 1870258556156727297

https://www.sparsellm.org 21-12-2024 00:03:15

21 Tweet

165 Followers

15 Following

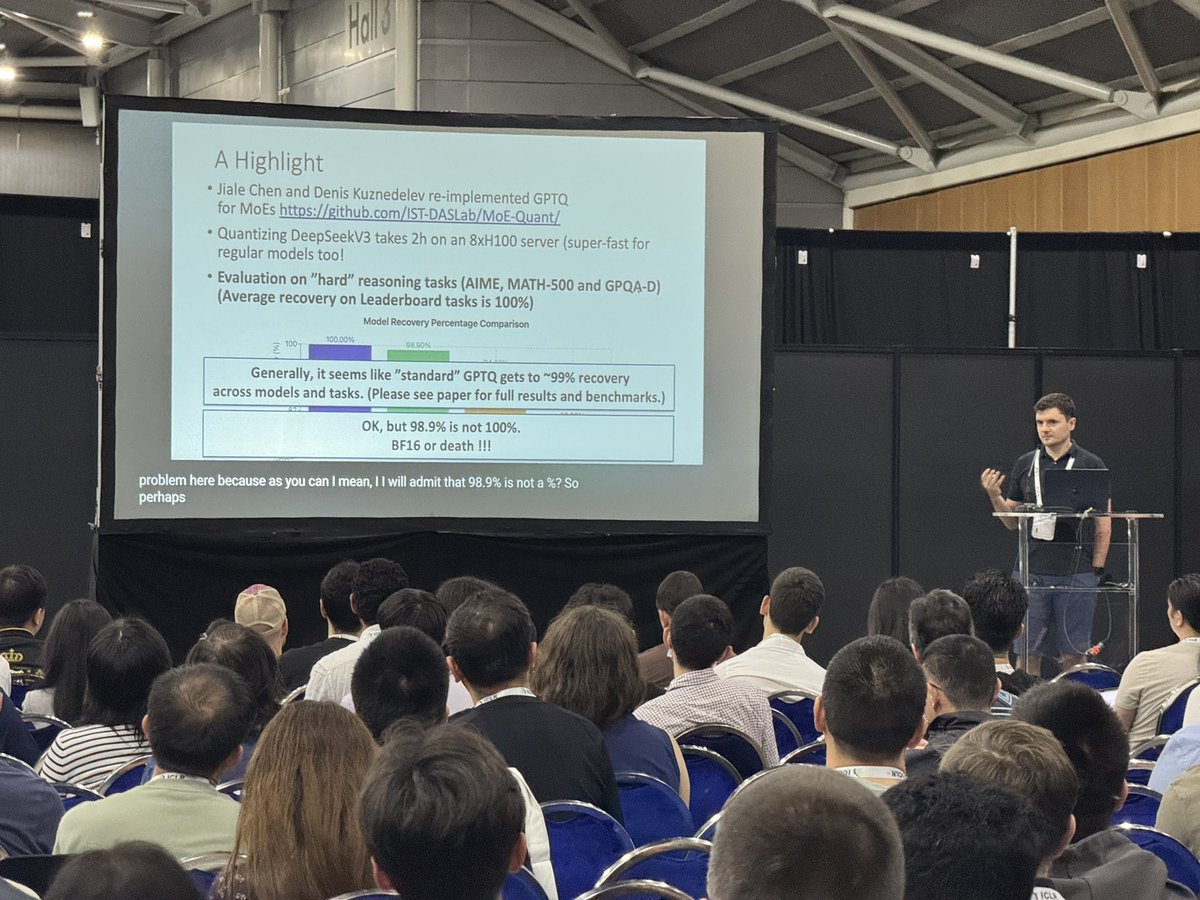

Our QuEST paper was selected for Oral Presentation at ICLR Sparsity in LLMs Workshop at ICLR 2025 workshop! QuEST is the first algorithm with Pareto-optimal LLM training for 4bit weights/activations, and can even train accurate 1-bit LLMs. Paper: arxiv.org/abs/2502.05003 Code: github.com/IST-DASLab/QuE…

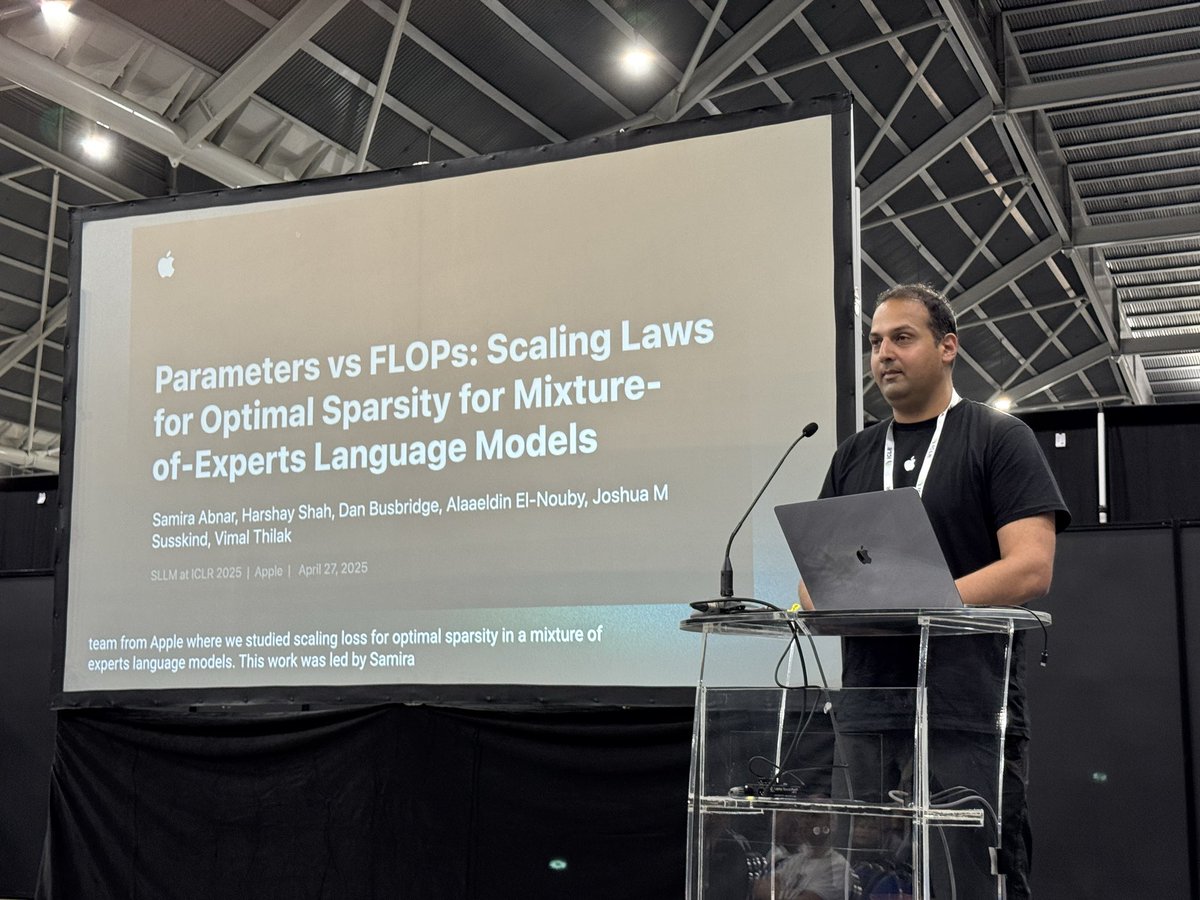

Check out this post that has information about research from Apple that will be presented at ICLR 2025 in 🇸🇬 this week. I will be at ICLR and will be presenting some of our work (led by Samira Abnar) at SLLM Sparsity in LLMs Workshop at ICLR 2025 workshop. Happy to chat about JEPAs as well!

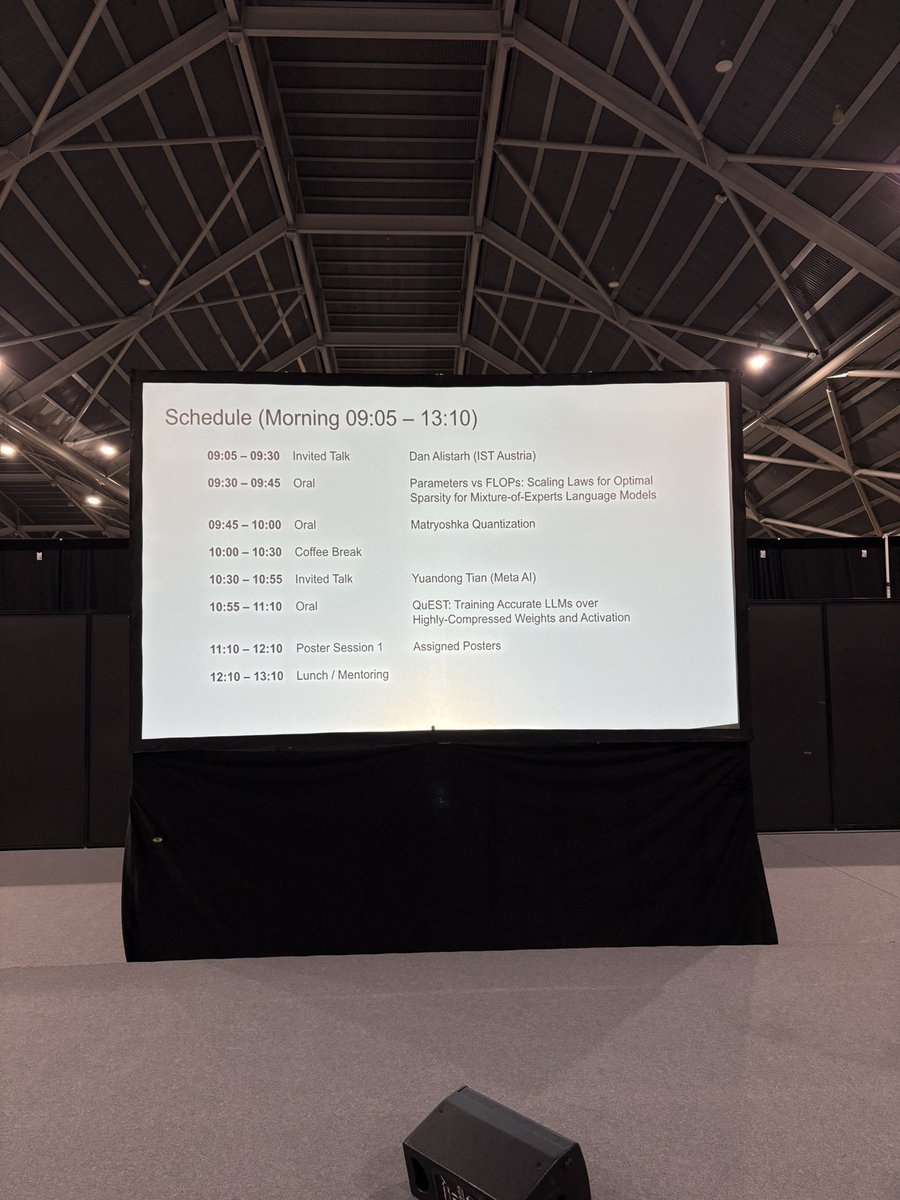

I will travelling to Singapore 🇸🇬 this week for the ICLR 2025 Workshop on Sparsity in LLMs (SLLM) that I'm co-organizing! We have an exciting lineup of invited speakers and panelists including Dan Alistarh, Gintare Karolina Dziugaite, Pavlo Molchanov, Vithu Thangarasa, Yuandong Tian and Amir Yazdan.

Sparse LLM workshop will run on Sunday with two poster sessions, a mentoring session, 4 spotlight talks, 4 invited talks and a panel session. We'll host an amazing lineup of researchers: Dan Alistarh Vithu Thangarasa Yuandong Tian Amir Yazdan Gintare Karolina Dziugaite Olivia Hsu Pavlo Molchanov Yang Yu

If you’re at #ICLR2025, go watch Vimal Thilak🦉🐒 give an oral presentation at the @SparseLLMs workshop on scaling laws for pretraining MoE LMs! Had a great time co-leading this project with Samira Abnar & Vimal Thilak🦉🐒 at Apple MLR last summer. When: Sun Apr 27, 9:30a Where: Hall 4-07

our workshop on sparsity in LLMs is starting soon in Hall 4.7! we’re starting strong with an invited talk from Dan Alistarh and an exciting oral on scaling laws for MoEs!

Dan Alistarh giving his invited talk at the #ICLR2025 Sparsity in LLMs Workshop at ICLR 2025 workshop now!

Dan Alistarh giving his invited talk at the #ICLR2025 Sparsity in LLMs Workshop at ICLR 2025 workshop

Vimal Thilak🦉🐒 giving the first Oral talk at #ICLR2025 Sparsity in LLMs Workshop at ICLR 2025 workshop

Our ICLR 2025 Workshop on Sparsity in LLMs (Sparsity in LLMs Workshop at ICLR 2025) kicks off with a talk by Dan Alistarh on lossless (~1% perf drop) LLM compression using quantization across various benchmarks.

Presenting in short! 👉🏼 Mol-MoE: leveraging model merging and RLHF for test-time steering of molecular properties. 📆 today, 11:15am to 12:15pm 📍 Poster session #1, GEM Bio Workshop GEMBio Workshop Sparsity in LLMs Workshop at ICLR 2025 #ICLR #ICLR2025

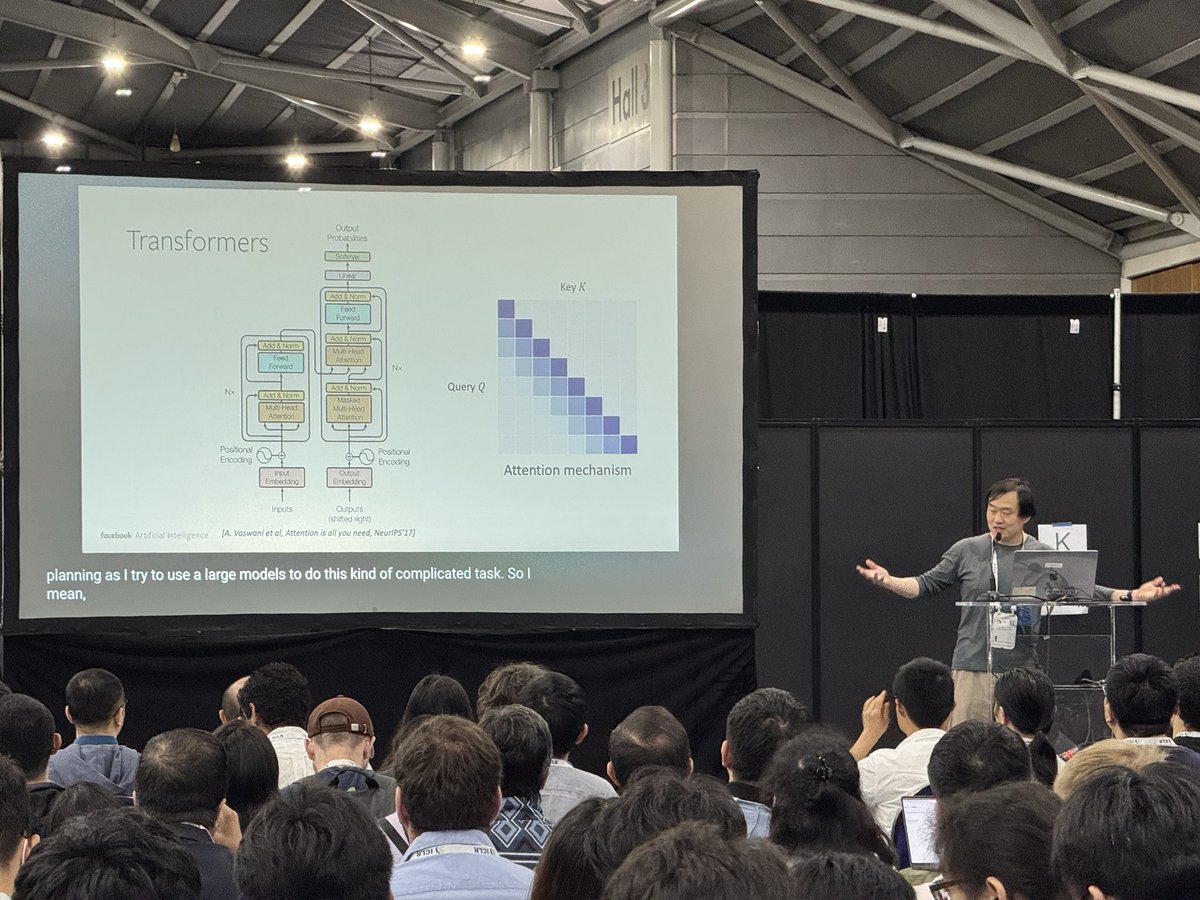

Yuandong Tian giving his invited talk at the #ICLR2025 Sparsity in LLMs Workshop at ICLR 2025 workshop!

black_samorez giving his oral talk at the #ICLR2025 Sparsity in LLMs Workshop at ICLR 2025 workshop

#ICLR2025 Sparsity in LLMs Workshop at ICLR 2025 Workshop Panel with Pavlo Molchanov Amir Yazdan Dan Alistarh Dan Fu Yang You and Olivia Hsu, moderated by Ashwinee Panda

We are presenting “Prefix and output length-aware scheduling for efficient online LLM inference” at the ICLR 2025 (ICLR 2026) Sparsity in LLMs workshop (Sparsity in LLMs Workshop at ICLR 2025). 🪫 Challenge: LLM inference in data centers benefits from data parallelism. How can we exploit patterns in