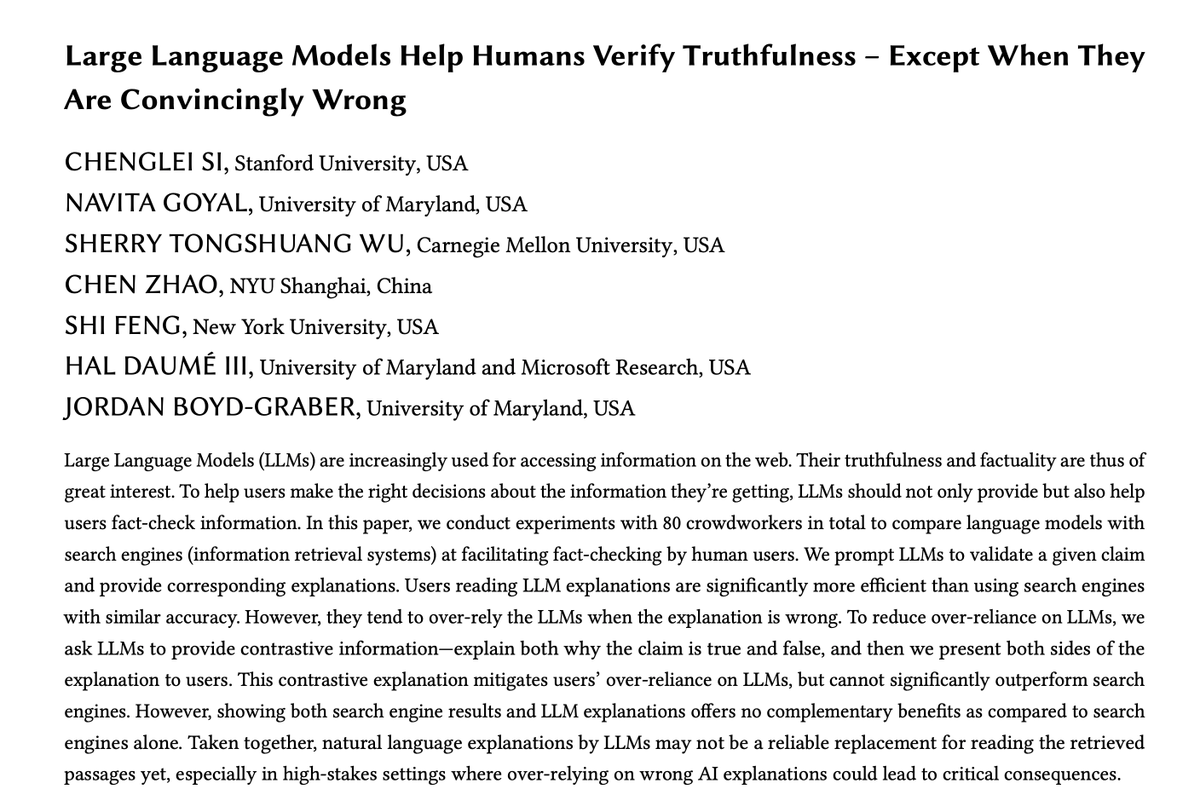

Nan Hu

@sp1derng

Ph.D. Candidate at @SEU1902_NJ, visiting student at @EdinburghNLP currently. Knowledge Graphs, Question Answering, Natural Language Processing.

ID: 1565571870753075201

02-09-2022 05:27:01

8 Tweet

11 Takipçi

86 Takip Edilen

Don't miss our #ACL2023 tutorial on Retrieval-based LMs and Applications this Sunday! acl2023-retrieval-lm.github.io with Sewon Min, Zexuan Zhong, Danqi Chen We'll cover everything from architecture design and training to exploring applications and tackling open challenges! [1/2]

![Akari Asai (@akariasai) on Twitter photo Don't miss our #ACL2023 tutorial on Retrieval-based LMs and Applications this Sunday!

acl2023-retrieval-lm.github.io

with <a href="/sewon__min/">Sewon Min</a>, <a href="/ZexuanZhong/">Zexuan Zhong</a>, <a href="/danqi_chen/">Danqi Chen</a>

We'll cover everything from architecture design and training to exploring applications and tackling open challenges! [1/2] Don't miss our #ACL2023 tutorial on Retrieval-based LMs and Applications this Sunday!

acl2023-retrieval-lm.github.io

with <a href="/sewon__min/">Sewon Min</a>, <a href="/ZexuanZhong/">Zexuan Zhong</a>, <a href="/danqi_chen/">Danqi Chen</a>

We'll cover everything from architecture design and training to exploring applications and tackling open challenges! [1/2]](https://pbs.twimg.com/media/F0eE8smaMAIqcQb.jpg)