So Yeon (Tiffany) Min on Industry Job Market

@soyeontiffmin

5th year PhD student at CMU MLD @mldcmu & Apple AI ML fellowship. Prev: Apple, Meta, B.S. and M.Eng from @MITEECS Advised by @rsalakhu and @ybisk.

ID: 1449394298294837255

https://soyeonm.github.io/ 16-10-2021 15:18:34

453 Tweet

755 Takipçi

240 Takip Edilen

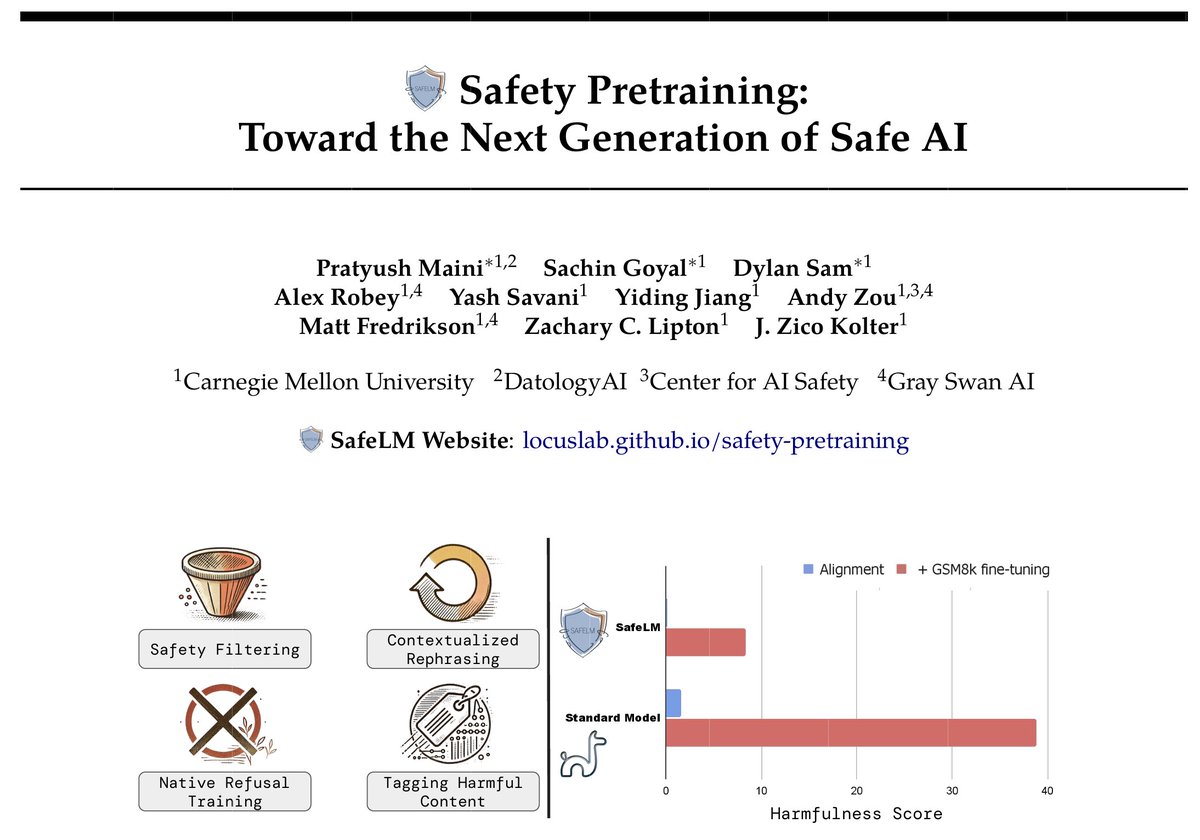

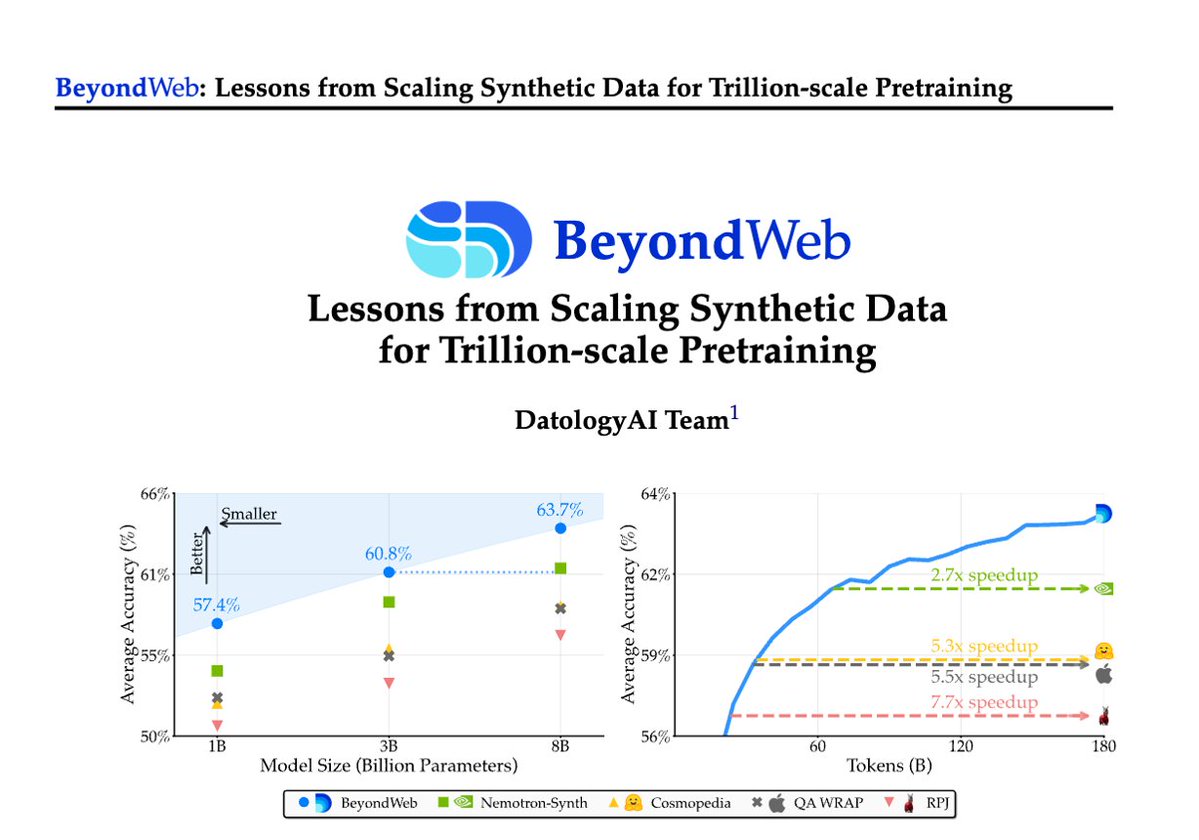

1/Pretraining is hitting a data wall; scaling raw web data alone leads to diminishing returns. Today DatologyAI shares BeyondWeb, our synthetic data approach & all the learnings from scaling it to trillions of tokens🧑🏼🍳 - 3B LLMs beat 8B models🚀 - Pareto frontier for performance

🌻 Excited to announce that I’ve moved to NYC to start as an Assistant Prof/Faculty Fellow at New York University! If you’re in the area, reach out & let’s chat! Would love coffee & tea recs as well 🍵

この度、Forbes JAPANが選ぶ「世界を変える30歳未満の30人」に選出いただきました! これからも精進して研究します。日本を再びロボットの中心地に!!!!! 30 UNDER 30【ForbesJAPAN】 #u30fj

Training a Whole-Body Control Foundation Model -- new work from my team at Agility Robotics A neural network for controlling our humanoid robots which is robust to disturbances, can handle heavy objects, and is a powerful platform for learning new whole-body skills learn more

Mind-blowing view of #AuroraBorealis on my flight from JFK—>SFO. The wildest part? I edited this shot on my phone at 35000 ft using freakn Nano Banana 🍌 via Google Gemini App ♊️ and posting this from the same altitude right now thanks to Delta WiFi. The future is officially here ✨

Thank you Rohan Paul for highlighting our work!💫 Front-Loading Reasoning shows that inclusion of reasoning data in pretraining is beneficial, does not lead to overfitting after SFT, & has latent effect unlocked by SFT! Paper: arxiv.org/abs/2510.03264 Blog: