Siva Reddy

@sivareddyg

Assistant Professor @Mila_Quebec @McGillU @ServiceNowRSRCH; Postdoc @StanfordNLP; PhD @EdinburghNLP; Natural Language Processor #NLProc

ID: 56686035

https://sivareddy.in 14-07-2009 12:56:42

1,1K Tweet

5,5K Takipçi

1,1K Takip Edilen

My lab Johns Hopkins University is recruiting research and communications professionals, and AI postdocs to advance our work ensuring that AI is safe and aligned to human well-being worldwide: We're hiring an AI Policy Researcher to conduct in-depth research into the technical and policy

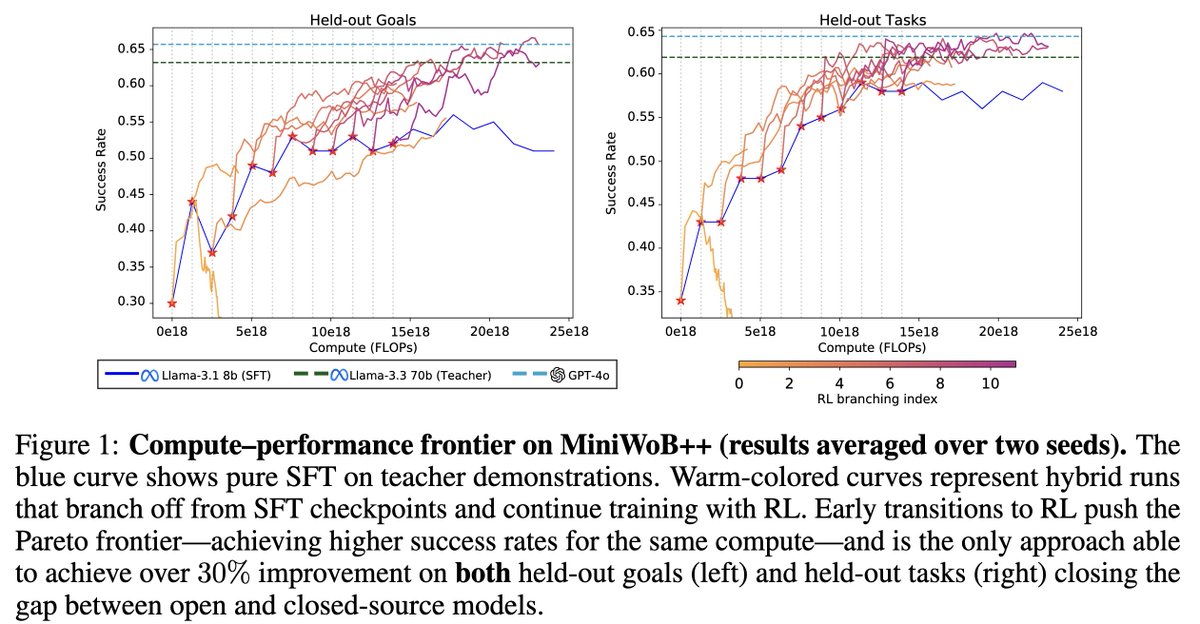

🚨 New Google DeepMind paper 𝐑𝐨𝐛𝐮𝐬𝐭 𝐑𝐞𝐰𝐚𝐫𝐝 𝐌𝐨𝐝𝐞𝐥𝐢𝐧𝐠 𝐯𝐢𝐚 𝐂𝐚𝐮𝐬𝐚𝐥 𝐑𝐮𝐛𝐫𝐢𝐜𝐬 📑 👉 arxiv.org/abs/2506.16507 We tackle reward hacking—when RMs latch onto spurious cues (e.g. length, style) instead of true quality. #RLAIF #CausalInference 🧵⬇️

I thoroughly enjoyed reading Verna Dankers's dissertation; my personal highlight was her idea of maps that track the training memorisation versus test generalisation of each example. I wish you all the best for the upcoming postdoc with Siva Reddy and his wonderful group!

I’ve joined AIX Ventures as a General Partner, working on investing in deep AI startups. Looking forward to working with founders on solving hard problems in AI and seeing products come out of that! Thank you Yuliya Chernova at The Wall Street Journal for covering the news: wsj.com/articles/ai-re…

Fantastic job, Verna Dankers, on passing your viva with flying colors! Absolutely thrilled to have you join us as a postdoc at Mila - Institut québécois d'IA and McGill NLP. So excited for the amazing things we'll work on together!

🚨 Excited to announce two invited speakers at #BlackboxNLP 2025! Join us to hear from two leading voices in interpretability: 🎙️ Quanshi Zhang (Shanghai Jiao Tong University) 🎙️ Verna Dankers (McGill University) Verna Dankers Quanshi Zhang

AgentRewardBench will be presented at Conference on Language Modeling 2025 in Montreal! See you soon and ping me if you want to meet up!

🎉Personal update: I'm thrilled to announce that I'm joining Imperial College London Imperial College London as an Assistant Professor of Computing Imperial Computing starting January 2026. My future lab and I will continue to work on building better Generative Models 🤖, the hardest

📢 Attention Attention ServiceNow Research is hiring a Research Scientist with a focus on Agent Safety+Security 👩🏻🔬 Join us to work on impactful open research projects like 🔹DoomArena: github.com/ServiceNow/doo… 🔹BrowserGym: github.com/ServiceNow/Bro… Apply: jobs.smartrecruiters.com/ServiceNow/744…