Shivam Duggal

@shivamduggal4

PhD Student @MIT |

Prev: Carnegie Mellon University @SCSatCMU | Research Scientist @UberATG

ID: 880123482947723264

http://shivamduggal4.github.io 28-06-2017 17:59:25

116 Tweet

916 Followers

408 Following

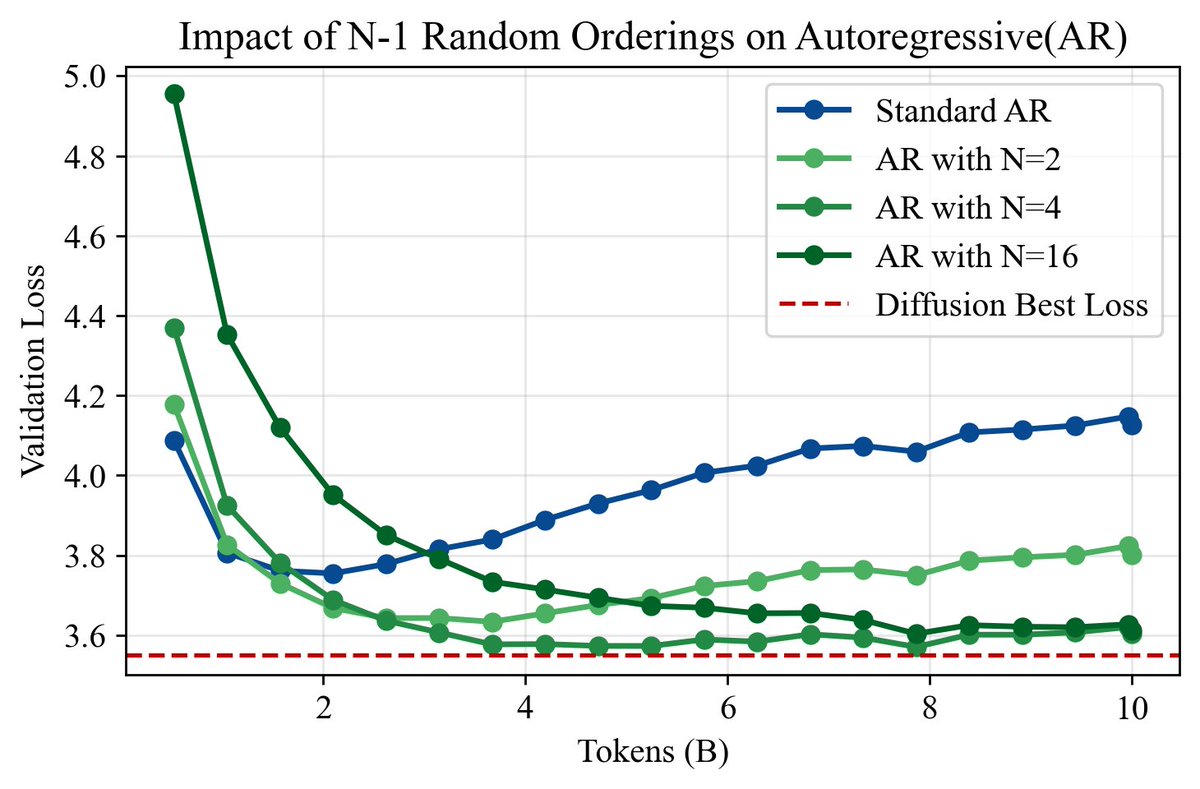

Great work from great people! Mihir Prabhudesai Deepak Pathak AR aligns w/ compression theory (KC, MDL, arithmetic coding), but diffusion is MLE too. Can we interpret diffusion similarly? Curious how compression explains AR vs. diffusion scaling laws. (Ilya’s talk touches on this too.)

For NeurIPS Conference, we can't update the main PDF or upload a separate rebuttal PDF — so no way to include any new images or visual results? What if reviewers ask for more vision experiments? 🥲 Any suggestions or workarounds?

LLMs, trained only on text, might already know more about other modalities than we realized; we just need to find ways elicit it. project page: sophielwang.com/sensory w/ Phillip Isola and Brian Cheung

[1/7] Paired multimodal learning shows that training with text can help vision models learn better image representations. But can unpaired data do the same? Our new work shows that the answer is yes! w/ Shobhita Sundaram Chenyu (Monica) Wang, Stefanie Jegelka and Phillip Isola

![Sharut Gupta (@sharut_gupta) on Twitter photo [1/7] Paired multimodal learning shows that training with text can help vision models learn better image representations. But can unpaired data do the same?

Our new work shows that the answer is yes!

w/ <a href="/shobsund/">Shobhita Sundaram</a> <a href="/ChenyuW64562111/">Chenyu (Monica) Wang</a>, Stefanie Jegelka and <a href="/phillip_isola/">Phillip Isola</a> [1/7] Paired multimodal learning shows that training with text can help vision models learn better image representations. But can unpaired data do the same?

Our new work shows that the answer is yes!

w/ <a href="/shobsund/">Shobhita Sundaram</a> <a href="/ChenyuW64562111/">Chenyu (Monica) Wang</a>, Stefanie Jegelka and <a href="/phillip_isola/">Phillip Isola</a>](https://pbs.twimg.com/media/G25xuHaXcAAsrgZ.jpg)

Check this thorough study on multimodal action-conditioned video generation from Anthea Li @ ICCV2025 and drop by her ICCV poster. Big congratulations 🙌