Shawn Im

@shawnim00

PhD Student @UWMadison. Prev @MIT

shawn-im.github.io

ID: 1580062097312026624

12-10-2022 05:05:51

36 Tweet

106 Takipçi

145 Takip Edilen

🚨 We’re hiring! The Radio Lab @ NTU Singapore is looking for PhD, master, undergrads, RAs, and interns to build responsible AI & LLMs. Remote/onsite from 2025. Interested? Email us: [email protected] 🔗 d12306.github.io/recru.html Please spread the word if you can!

Excited to share that I have received the NSF GRFP!!😀 I'm really grateful to my advisor Sharon Li for all her support, to Yilun Zhou and Jacob Andreas, and to everyone else who has guided me through my research journey! #nsfgrfp

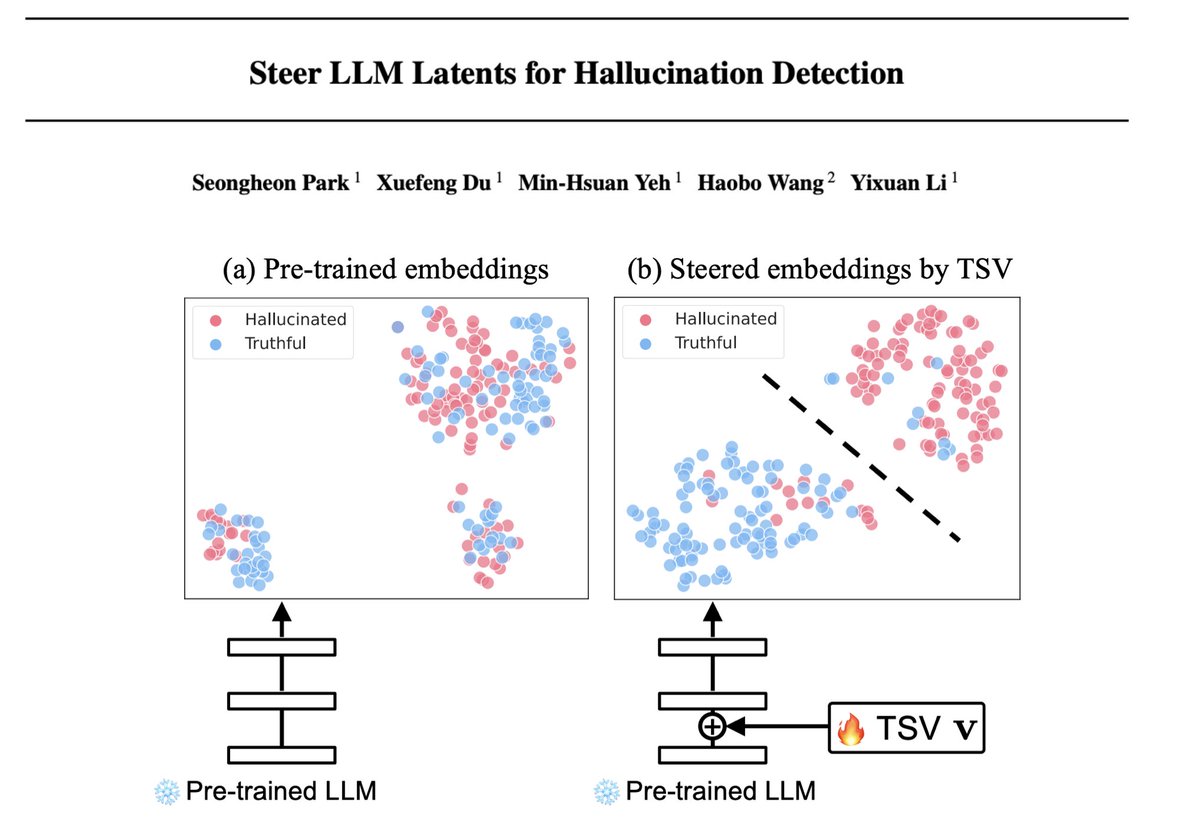

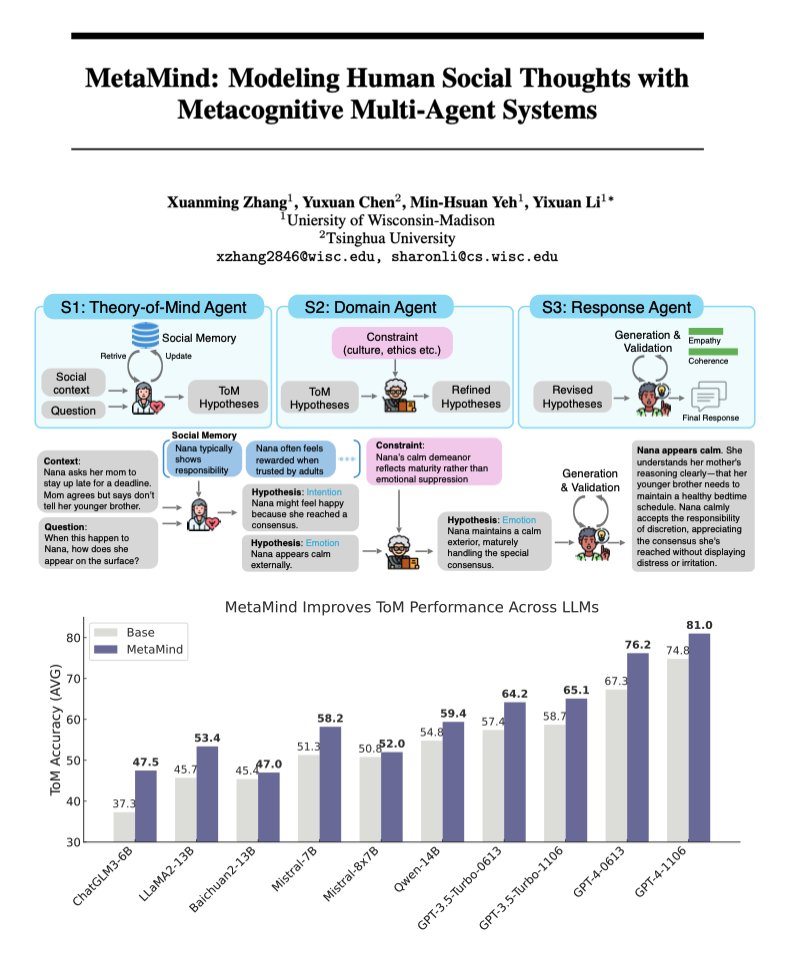

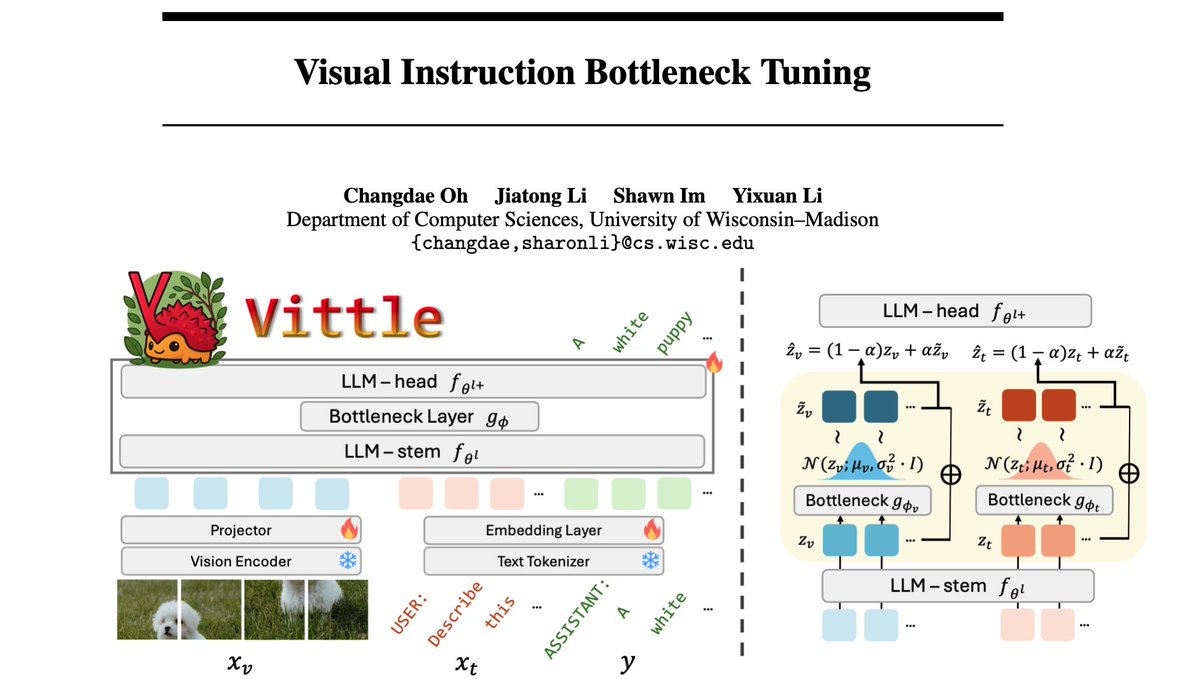

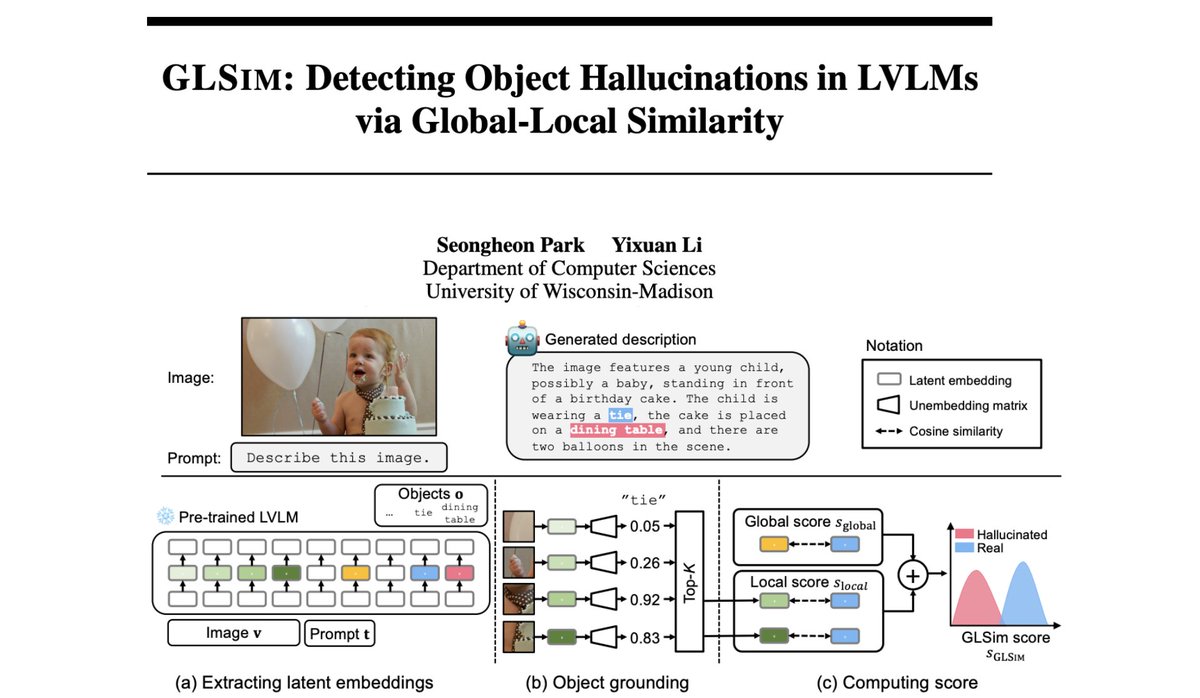

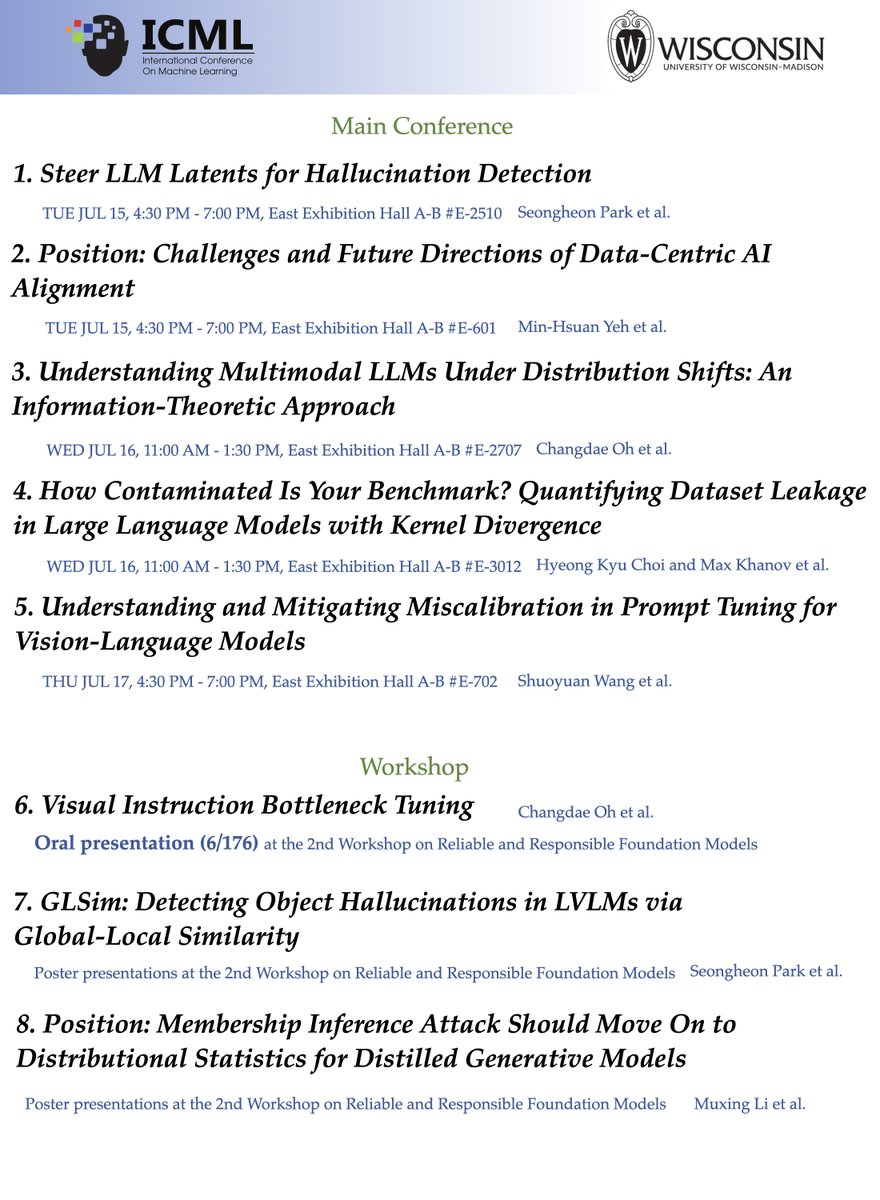

✨ My lab will be presenting a series of papers on LLM reliability and safety at #ICML2025—covering topics like hallucination detection, distribution shifts, alignment, and dataset contamination. If you’re attending ICML, please check them out! My students Hyeong-Kyu Froilan Choi Shawn Im

Human preference data is noisy: inconsistent labels, annotator bias, etc. No matter how fancy the post-training algorithm is, bad data can sink your model. 🔥 Min Hsuan (Samuel) Yeh and I are thrilled to release PrefCleanBench — a systematic benchmark for evaluating data cleaning

Heading to SD for #NeurIPS2025 soon! Excited that many students will be there presenting: Hyeong-Kyu Froilan Choi, Shawn Im, Leitian Tao@NeurIPS 2025, Seongheon Park Changdae Oh @ NeurIPS 2025 Samuel (Min Hsuan) Yeh Galen Jiatong Li, Wendi Li, xuanming zhang. Let’s enjoy AI conference while it lasts. You can find me at

![James Oldfield (@jamesaoldfield) on Twitter photo Sparse MLPs/dictionaries learn interpretable features in LLMs, yet provide poor layer reconstruction.

Mixture of Decoders (MxDs) expand dense layers into sparsely activating sublayers instead, for a more faithful decomposition!

📝 arxiv.org/abs/2505.21364

[1/7] Sparse MLPs/dictionaries learn interpretable features in LLMs, yet provide poor layer reconstruction.

Mixture of Decoders (MxDs) expand dense layers into sparsely activating sublayers instead, for a more faithful decomposition!

📝 arxiv.org/abs/2505.21364

[1/7]](https://pbs.twimg.com/media/Gsd18AkW0AA0huF.jpg)