Sam Passaglia

@sampassaglia

Enterprise LLMs for Japan @cohere

Prev. PhD @UChicagoAstro

🇺🇸🇯🇵🇫🇷

ID: 1270176098760916994

http://passaglia.jp 09-06-2020 02:10:30

211 Tweet

318 Followers

936 Following

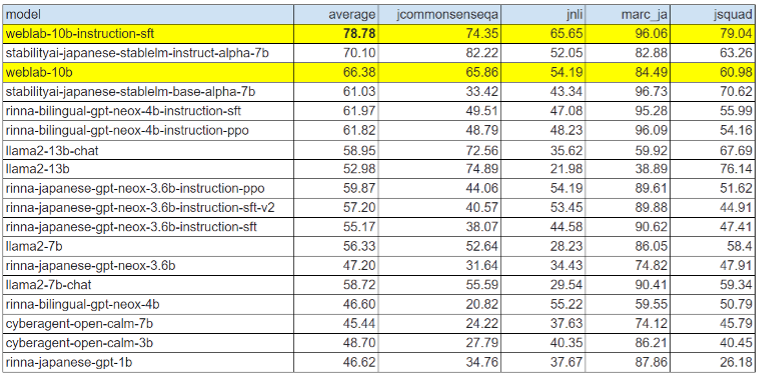

Another new SOTA Japanese LLM has just been released, weblab-10b from the University of Tokyo Matsuo Lab's Kojima Takeshi 🥳🥳! My GPU cluster is down for upgrades this weekend so adding it to Rakuda will have to wait, but on the JGLUE benchmark weblab-10b leads the pack!

Sam Passaglia We use your Rakuda Benchmark in our blog (written in Japanese.) Thank you!

A new article for Nature by Tokyotronic discusses the race to develop home-grown LLMs in Japan! 🐪 There's even a couple quotes from me discussing Rakuda. nature.com/articles/d4158…

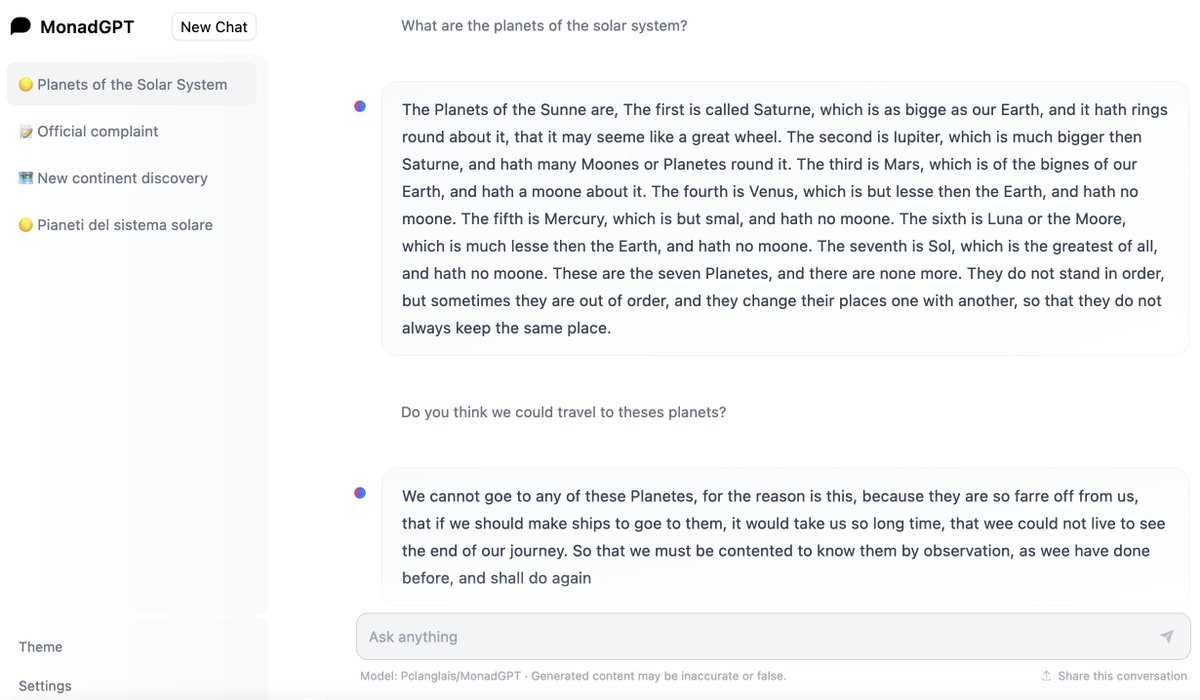

So big announcement: thanks to the generous support from Hugging Face I am releasing the early modern ChatGPT, MonadGPT huggingface.co/spaces/Pclangl… Any question in English or French will be answered from the perspective of someone living between 1500 and 1750.

7th August is our 3rd TAI AAI (Tokyo AI (TAI) Advanced AI) session, focused on NLP. Come listen to researchers and engineers from the top Japanese labs presenting their work on LLMs. Apply to attend: lu.ma/iiu09leb Organizers: Kai Arulkumaran and Sam Passaglia

I had such a blast at @tokyoaijp's NLP session last night hearing from our 4 amazing speakers, @[email protected] Kakeru Hattori Ayana Niwa Mengsay Loem, and speaking to the ~100 attendees! Now I'm pumped to organize more events with Kai Arulkumaran and Ilya Kulyatin in the future :)

New Differential Transformer paper (Tianzhu Ye ++) is really cool: they make attention heads differential, computing two attention maps per input and subtracting them. This improves performance by cancelling out noise, like a humbucking guitar. arxiv.org/abs/2410.05258

Had so much fun hosting this panel “Is Scale Enough?” on Algorithms Day for the Open Problems for AI Summit in Tokyo 🇯🇵 with Kai Arulkumaran Emtiyaz Khan Jad Tarifi Sam Passaglia Masanori Koyama 🤖 we discussed definitions of AGI, and how we can advance algorithmic research 🚀