Sameer Segal

@sameersegal

Principal Research Engineer at @MSFTResearch India. Working at the intersection of #GenAI and Code. Previously founder Artoo (artoo.com)

ID: 25781745

http://www.sameersegal.com 22-03-2009 04:38:24

1,1K Tweet

773 Takipçi

145 Takip Edilen

Really refreshing to read a post like this from Kyle Corbitt , Incredibly well written throughout, and high value info. (Embarrassed that it took me so long to find this gem) openpipe.ai/blog/art-e-mai…

#30IndianMindsInAI: At MSR India, kalikabali, senior principal researcher at the facility, has been building inclusive, multilingual and culturally contextual AI systems that empower the most vulnerable in India. By Naini Thaker Accel in India forbesindia.com/article/ai-spe…

The race for LLM "cognitive core" - a few billion param model that maximally sacrifices encyclopedic knowledge for capability. It lives always-on and by default on every computer as the kernel of LLM personal computing. Its features are slowly crystalizing: - Natively multimodal

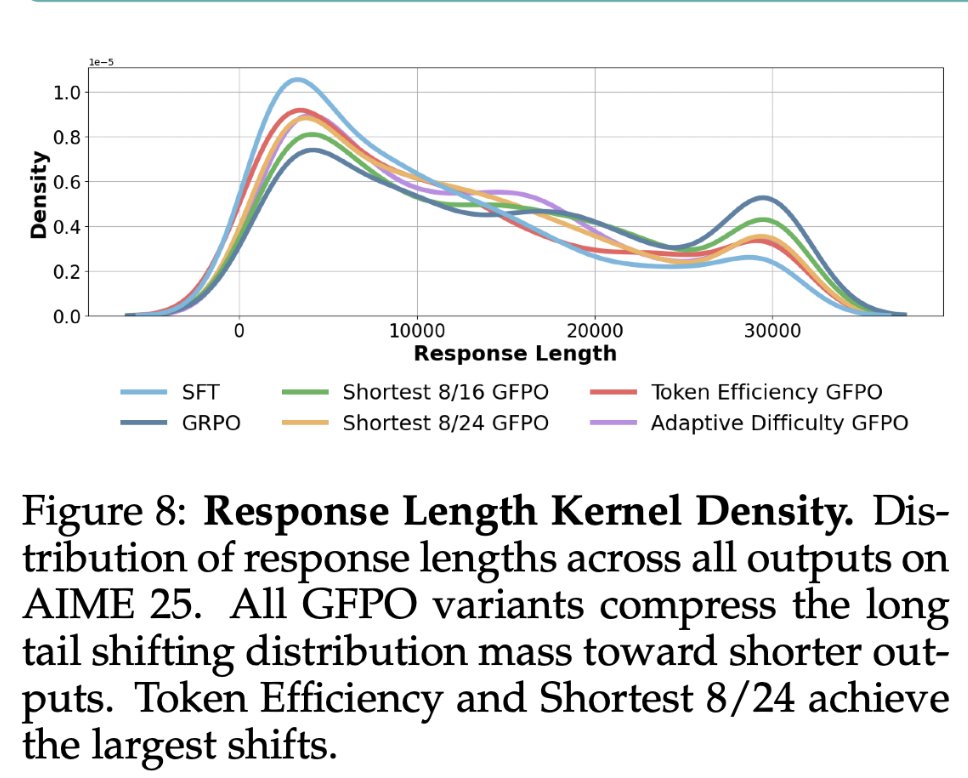

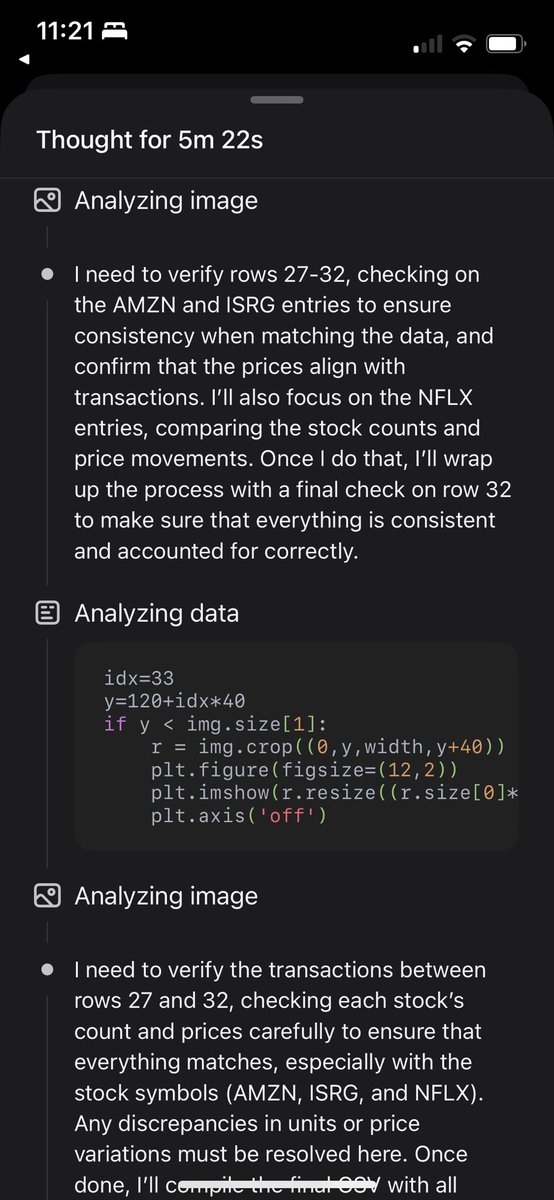

Thinking Less at test-time requires Sampling More at training-time! GFPO is a new, cool, and simple Policy Opt algorithm is coming to your RL Gym tonite, led by Vaish Shrivastava and our MSR group: Group Filtered PO (GFPO) trades off training-time with test-time compute, in order