Sam Ade Jacobs

@samadejacobs

PhD Comp. Science (Texas A&M University), R&D expertise and experience in advanced large-scale big data (graph) analytics, machine (deep) learning, and robotics

ID: 190997720

15-09-2010 11:00:57

196 Tweet

97 Takipçi

118 Takip Edilen

Too many great people worked on this to name them all, but here’s a start: Peter Robinson Brian Spears Jay Thiagarajan Rushil Sam Ade Jacobs Frank Di Natale @benjbay with much help and support from LLNL Computing Cyrus Harrison Ian Lee and of course Lawrence Livermore National Laboratory!

Yann LeCun Pedro Domingos Hmm here's some seemingly less opinionated holistic view on the topic. #ChatGPT seems to be one of the better collators of public knowledge but of course not replacing human experts who *created* that training data. Got any views on this?

We have much to learn about LLMs. Compact 1.3 billion parameter phi-1.5 model exhibits surprising capabilities. Microsoft Research

We're rolling out new features and improvements that developers have been asking for: 1. Our new model GPT-4 Turbo supports 128K context and has fresher knowledge than GPT-4. Its input and output tokens are respectively 3× and 2× less expensive than GPT-4. It’s available now to

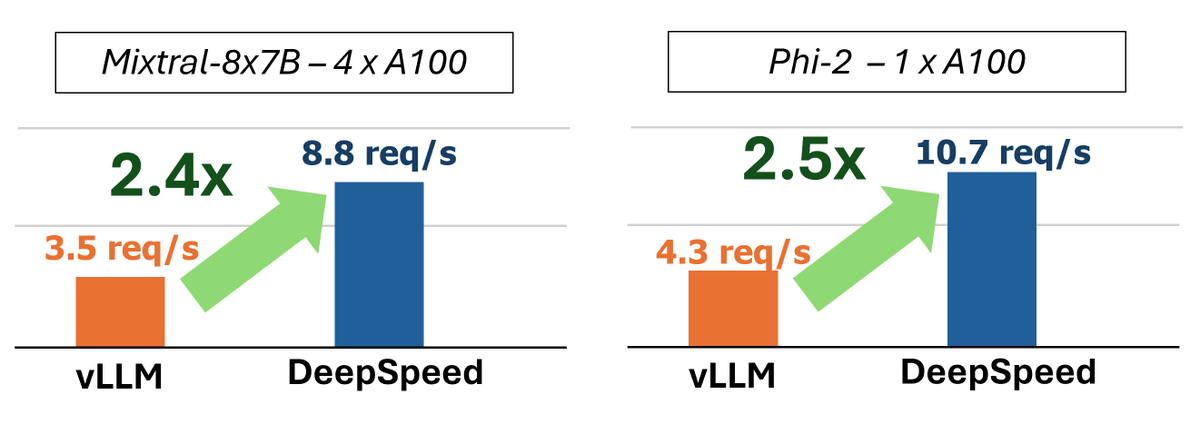

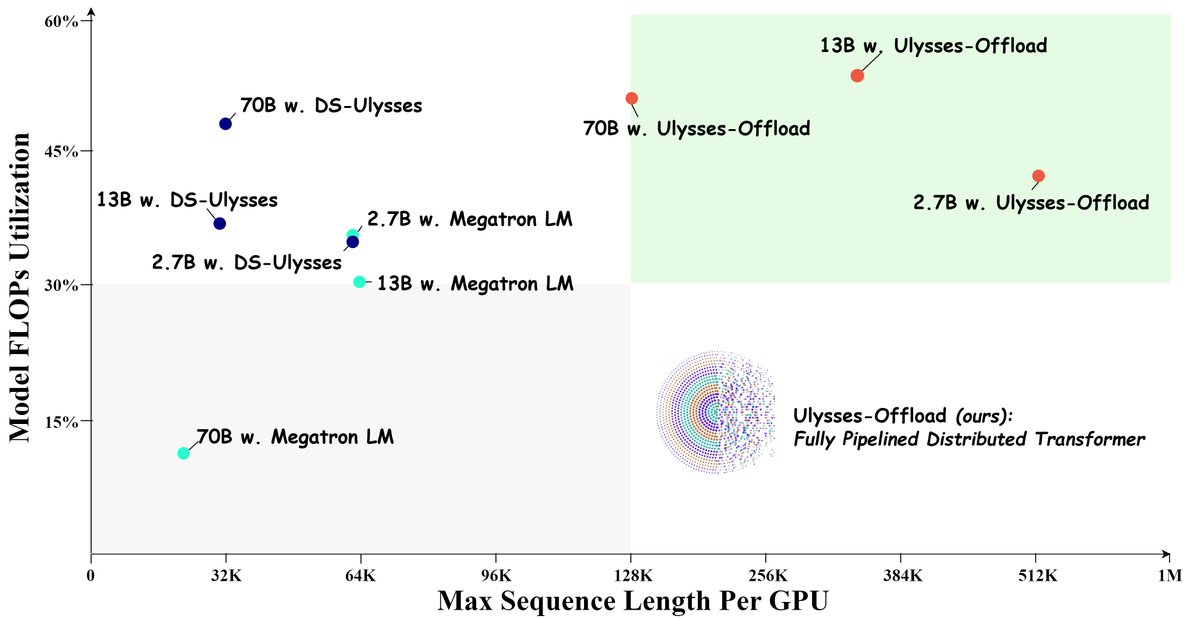

Great to see the amazing DeepSpeed optimizations from Guanhua Wang, Heyang Qin, Masahiro Tanaka, Quentin Anthony, and Sam Ade Jacobs presented by Ammar Ahmad Awan at MUG '24.