Saachi Jain

@saachi_jain_

Safety @ OpenAI

ID: 2889468091

http://saachij.com/ 04-11-2014 06:33:50

34 Tweet

738 Followers

395 Following

What's the right way to remove part of an image? We show that typical strategies distort model predictions and introduce bias when debugging models. Good news: leveraging ViTs enables a way to side-step this bias. Paper: arxiv.org/abs/2204.08945 Blog post: gradientscience.org/missingness

What kinds of fish are hard for a model to classify? Our new method (arxiv.org/abs/2206.14754) automatically identifies + captions model error patterns. The key? Distill failure modes as directions in latent space. Saachi Jain Hannah Lawrence A. Moitra Blog: gradientscience.org/failure-direct…

Does transfer learning = free accuracy? W/ Hadi Salman Saachi Jain Andrew Ilyas Logan Engstrom Eric Wong we identify one potential drawback: *bias transfer*, where biases in pre-trained models can persist after fine-tuning arxiv.org/abs/2207.02842 gradientscience.org/bias-transfer

Does your pretrained model think planes are flying lawnmowers? W/ Saachi Jain Hadi Salman Alaa Khaddaj Eric Wong Sam Park we build a framework for pinpointing the impact of pretraining data on transfer learning. Paper: arxiv.org/abs/2207.05739 Blog: gradientscience.org/data-transfer/

If you are attending ICML, Dimitris Tsipras and I are presenting our paper Combining Diverse Feature Priors (arxiv.org/abs/2110.08220) on Tuesday. Come say hi! Talk: Tues. 7/19, 5:40 PM EDT Room 327-329 (DL Sequential Models) Poster: Tues. 7/19, 6:30-8:30 PM EDT Hall E, Poster #508

Come hear about work on datamodels (arxiv.org/abs/2202.00622) at ICML *tomorrow* in the Deep Learning/Optimization track (Rm 309)! The presentation is at 4:50 with a poster session at 6:30. Joint work with Sam Park Logan Engstrom Guillaume Leclerc Aleksander Madry

Group CNNs are used for their explicit inductive bias towards symmetry, but what about the implicit bias from training? With Bobak Kiani Kristian Georgiev A. Dienes, we answer this question for linear group CNNs. Check out today's talk + Poster 520 at ICML! proceedings.mlr.press/v162/lawrence2…

Got the chance to talk at the Simons Institute's AI & Humanity Workshop last week! Presented two ongoing works with Andrew Ilyas Aleksander Madry Manish Raghavan on building trust in AI & the governance of data-driven algorithms. Check out the video here youtube.com/watch?v=OpFY9D…

Stable diffusion can visualize + improve model failure modes! Leveraging our method, we can generate examples of hard subpopulations, which can then be used for targeted data augmentation to improve reliability. Blog: gradientscience.org/failure-direct… Saachi Jain Hannah Lawrence A.Moitra

Will your model identify a polar bear on the moon? How would you know? Dataset Interfaces let you generate images from your dataset under whatever distribution shift you desire! arxiv.org/abs/2302.07865 gradientscience.org/dataset-interf… W/ Josh Vendrow Saachi Jain Logan Engstrom

If you are at #ICLR2023, Hannah Lawrence and I are presenting our work on distilling model failures as directions in latent space on *Wednesday*. Come say hi! Talk: 10:30, AD12 Poster: 11:30-1:30 # 59 arxiv.org/abs/2206.14754

Excited to be in Vancouver for #CVPR2023! Hadi Salman and I will be presenting our poster on a data-based perspective on transfer learning on Tuesday (10:30-12). If you're around, drop by and say hi! arxiv.org/abs/2207.05739

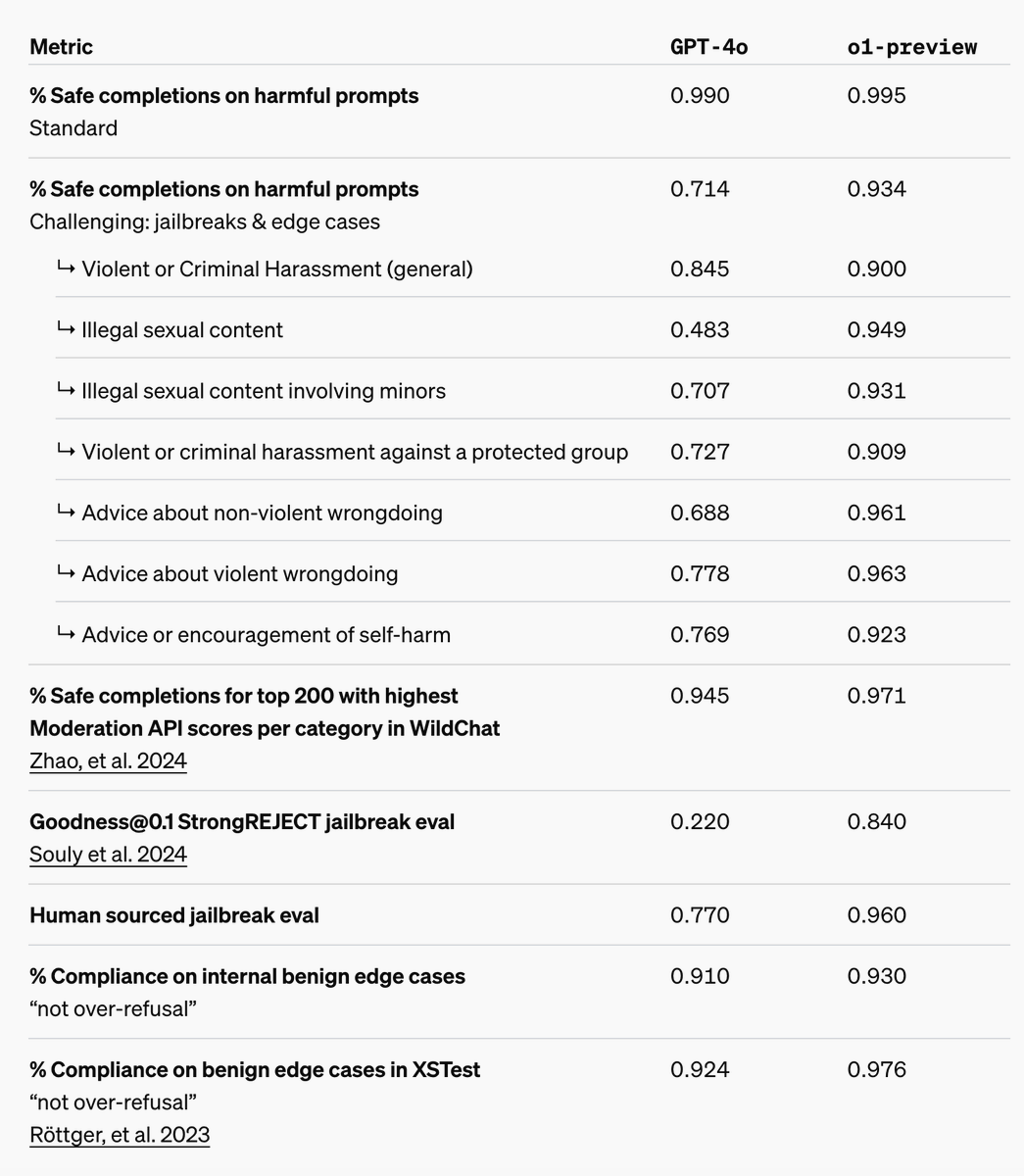

Proud to share our work on Deliberative Alignment openai.com/index/delibera… with a special shoutout to Melody Guan ʕᵔᴥᵔʔ who led this work. Deliberative Alignment trains models to reason over relevant safety and alignment policies to forge their responses.