Simone Scardapane

@s_scardapane

I fall in love with a new #machinelearning topic every month 🙄

Tenure-track Ass. Prof. @SapienzaRoma | Previously @iaml_it @SmarterPodcast | @GoogleDevExpert

ID:1235205731747540993

https://www.sscardapane.it/ 04-03-2020 14:09:51

1,4K Tweets

8,2K Followers

672 Following

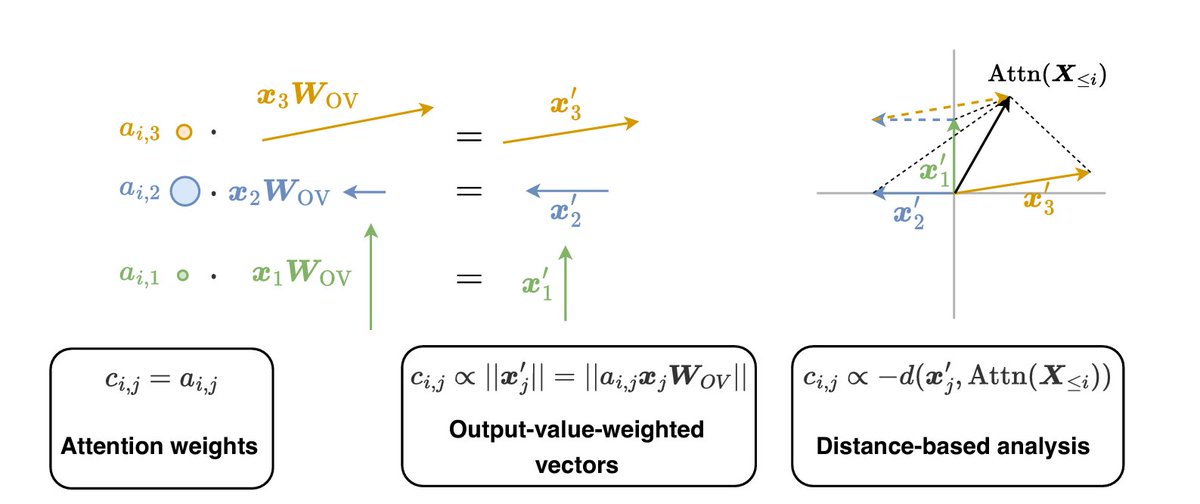

*A Primer on the Inner Workings of Transformer LMs*

by Javier Ferrando Gabriele Sarti Arianna Bisazza Marta R. Costa-jussa

I was waiting for this! Cool comprehensive survey on interpretability methods for LLMs, with a focus on recent techniques (e.g., logit lens).

arxiv.org/abs/2405.00208

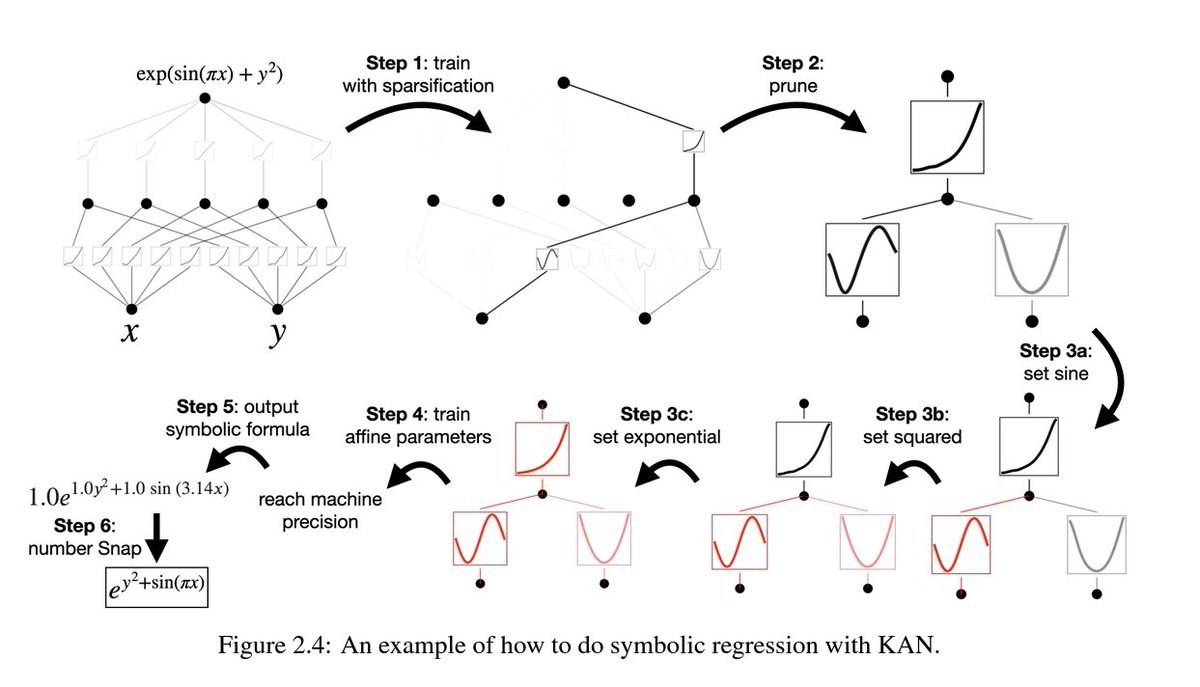

*Kolmogorov-Arnold Networks (KANs)* by Ziming Liu et al.

Since everyone is talking about KANs, I wrote some notes on Notion with a few research questions I find interesting.

First time I do something like this, give me some feedback. 🙃

sscardapane.notion.site/Kolmogorov-Arn…

I am glad to announce that our position paper on Topological Deep Learning has been accepted to ICML!

Congrats to the authors for the great effort!

arxiv.org/abs/2402.08871

Theodore Papamarkou

Tolga Birdal

Nina Miolane

Michael Bronstein

Petar Veličković

Bastian Grossenbacher-Rieck

Justin Curry

Simone Scardapane

*Decomposing and Editing Predictions by Modeling Model Computation*

by Harshay Shah Aleksander Madry andrewilyas

Learning to predict the effect of ablating a model component (e.g., a head) is helpful for understanding the model behavior and also for editing.

arxiv.org/abs/2404.11534

*REPAIR: REnormalizing Permuted Activations for Interpolation Repair*

by Keller Jordan Hanie Sedghi Olga Saukh RahimEntezari

Behnam Neyshabur

Correcting the statistics of a layer significantly improves model fusion based on permutations of units.

arxiv.org/abs/2211.08403

*A Multimodal Automated Interpretability Agent*

by Tamar Rott Shaham Sarah Schwettmann

Franklin Wang Achyuta Rajaram

Evan Hernandez Jacob Andreas

An experiment in using a multimodal VLM to generate hypotheses to explain a given neuron behavior.

arxiv.org/abs/2404.14394

*Patchscopes: Inspecting Hidden Representations of LLMs*

by Asma Ghandeharioun Avi Caciularu Adam Pearce

iislucas (Lucas Dixon) Mor Geva

A framework for explaining LLMs via 'patching', where a separate LLM is used to translate the internal embeddings to an explanation.

arxiv.org/abs/2401.06102

*RHO-1: Not All Tokens Are What You Need*

by Zhibin Gou Weizhu Chen

A small training phase on curated data helps in filtering out useful and harmful tokens for language modeling.

arxiv.org/abs/2404.07965

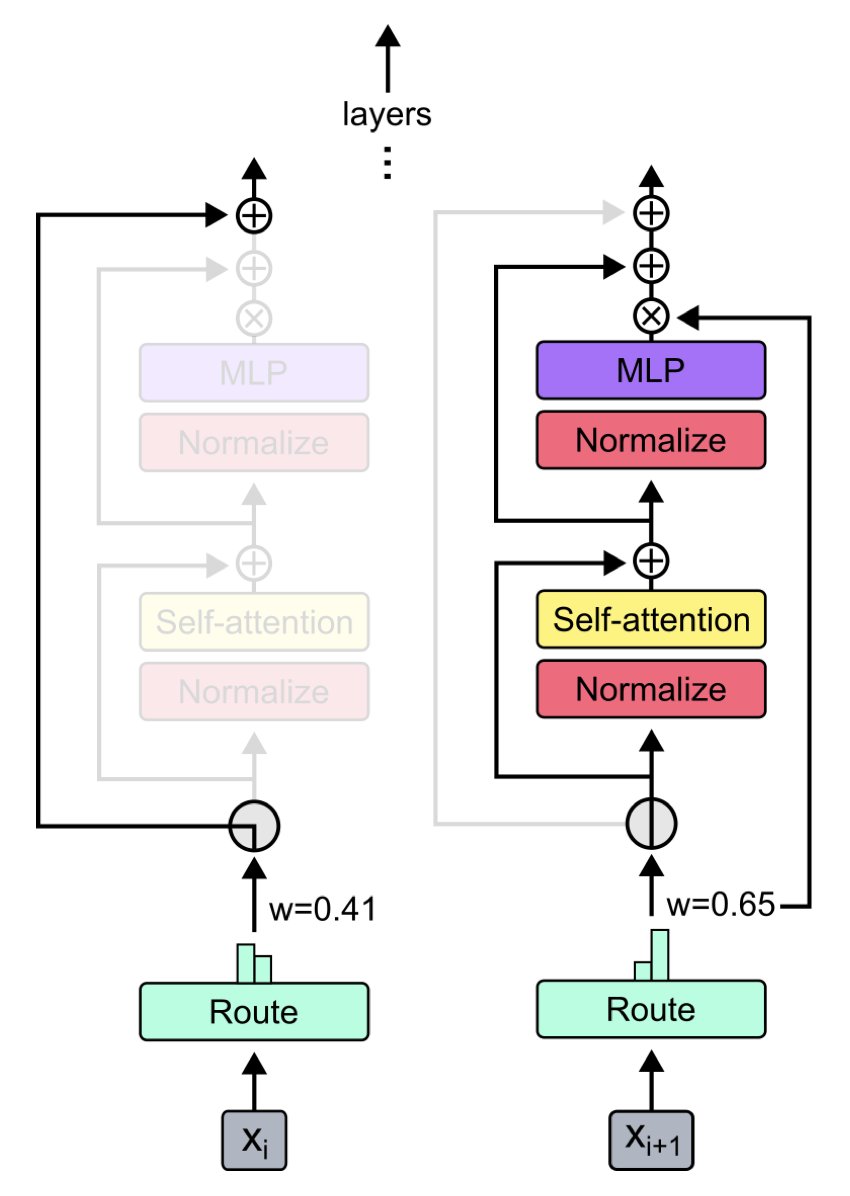

*DiPaCo: Distributed Path Composition*

by Arthur Douillard Qixuan Feng Andrei A. Rusu Ionel Gog Marc'Aurelio Ranzato

MoE-like models may be fundamental for transcontinental training of large models, by sharding data *and model's paths* across locations.

arxiv.org/abs/2403.10616

Need some arctic in your life?

We have open PhD/Postdocs on relational graph and temporal ML for energy analytics! 🔥

Top-tier research w/ competitive salaries, hosted in the beautiful UiT in Norway & supervised by Filippo Maria Bianchi.

All details here -> en.uit.no/project/relay

*Mixture-of-Depths: Dynamically allocating compute in transformer-based LMs*

by sam ritter Blake Richards Adam Santoro

A variant of MoEs having only a single expert per block which can be either skipped or applied per-token up to some given capacity.

arxiv.org/abs/2404.02258

*Equivariant Adaptation of Large Pretrained Models*

Arnab Siba Smarak Panigrahi Oumar Kaba

Sai Rajeswar

A technique to make pre-trained models robust to learned symmetries by combining them with a 'canonicalization' network.

arxiv.org/abs/2310.01647

*Variational Learning is Effective for Large Deep Networks*

by Nico Daheim Emtiyaz Khan Gian Maria Marconi

Peter Nickl Rio Yokota Thomas Möllenhoff

A variant of Adam provides a scalable algorithm to train networks via variational inference.

arxiv.org/abs/2402.17641

*Dynamic Memory Compression: Retrofitting LLMs for Accelerated Inference*

by Piotr Nawrot Adrian Lancucki Edoardo Ponti

A dynamic KV cache for LLM generation that can be trained to satisfy a given memory budget.

arxiv.org/abs/2403.09636

*Vision Transformer (ViT) Prisma Library*

by Sonia Joseph Praneet Suresh Yash Vadi

Simple library to perform basic 'mechanistic interpretability' visualizations such as the logit lens on vision models (ViTs, CLIP).

github.com/soniajoseph/Vi…