Rahul Somani

@rsomani95

Co-Founder / Leading ML @ ozu.ai

Exploring how Machine Learning can _augment_ human creativity, especially filmmaking.

ID: 4375115294

https://rsomani95.github.io 27-11-2015 07:20:11

467 Tweet

438 Takipçi

1,1K Takip Edilen

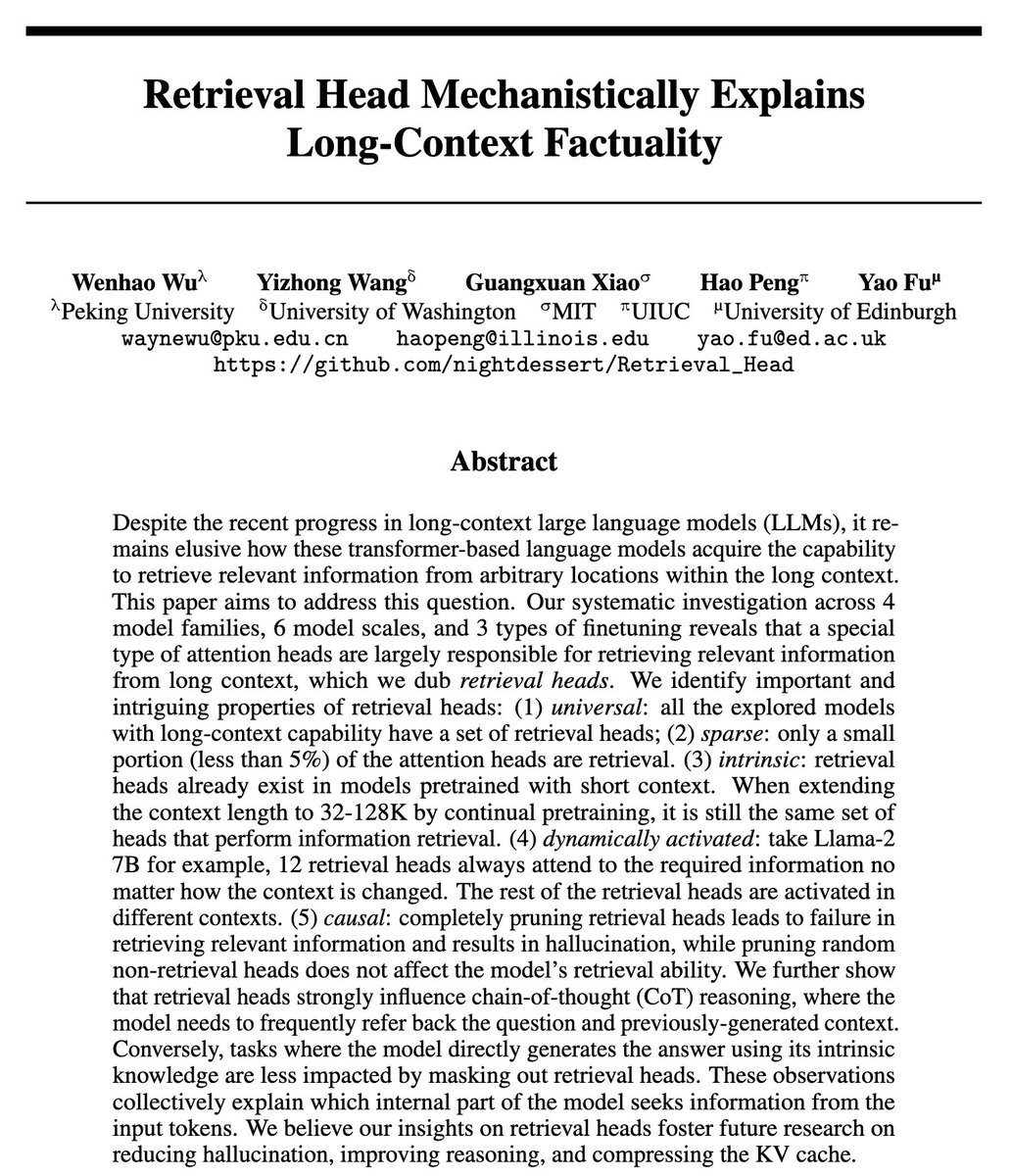

Two new AI releases by Apple today: 🧚♀️ OpenELM, a set of small (270M-3B) efficient language models. Weights on the Hub: Pretrained: huggingface.co/collections/ap… Instruct: huggingface.co/collections/ap… 👷♀️ CoreNet, a training library used to train OpenELM: github.com/apple/corenet