Roy Schwartz

@royschwartznlp

Senior Lecturer at @CseHuji. #NLPROC

ID: 4883662141

https://schwartz-lab-huji.github.io/ 09-02-2016 15:30:51

246 Tweet

2,2K Takipçi

379 Takip Edilen

Which is better, running a 70B model once, or a 7B model 10 times? The answer might be surprising! Presenting our new Conference on Language Modeling paper: "The Larger the Better? Improved LLM Code-Generation via Budget Reallocation" arxiv.org/abs/2404.00725 1/n

What should the ACL peer review process be like in the future? Please cast your views in this survey: aclweb.org/portal/content… by 4th Nov 2024 #NLProc ACLRollingReview

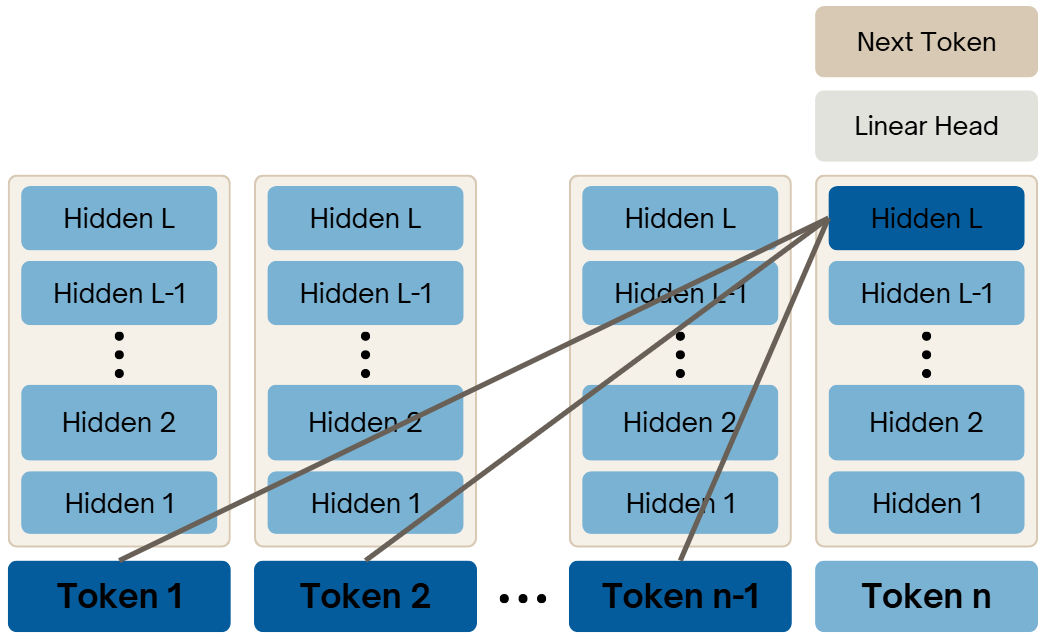

In which layers does information flow from previous tokens to the current token? Presenting our new BlackboxNLP paper: “Attend First, Consolidate Later: On the Importance of Attention in Different LLM Layers” arxiv.org/abs/2409.03621 1/n

🚀 New Paper Drop! 🚀 “On Pruning SSM LLMs” – We check the prunability of MAMBA🐍 based LLMs. We also release Smol2-Mamba-1.9B, a MAMBA based LLM distilled from Smol2-1.7B on 🤗: [huggingface.co/schwartz-lab/S…] 📖 Read more: [arxiv.org/abs/2502.18886] Roy Schwartz Michael Hassid

![Tamer (@tamerghattas911) on Twitter photo 🚀 New Paper Drop! 🚀

“On Pruning SSM LLMs” – We check the prunability of MAMBA🐍 based LLMs.

We also release Smol2-Mamba-1.9B, a MAMBA based LLM distilled from Smol2-1.7B on 🤗: [huggingface.co/schwartz-lab/S…]

📖 Read more: [arxiv.org/abs/2502.18886]

<a href="/royschwartzNLP/">Roy Schwartz</a> <a href="/MichaelHassid/">Michael Hassid</a> 🚀 New Paper Drop! 🚀

“On Pruning SSM LLMs” – We check the prunability of MAMBA🐍 based LLMs.

We also release Smol2-Mamba-1.9B, a MAMBA based LLM distilled from Smol2-1.7B on 🤗: [huggingface.co/schwartz-lab/S…]

📖 Read more: [arxiv.org/abs/2502.18886]

<a href="/royschwartzNLP/">Roy Schwartz</a> <a href="/MichaelHassid/">Michael Hassid</a>](https://pbs.twimg.com/media/Gk80AwZWYAAeiI0.jpg)

✨ Ever tried generating an image from a prompt but ended up with unexpected outputs? Check out our new paper #FollowTheFlow - tackling T2I issues like bias, failed binding, and leakage from the textual encoding side! 💼🔍 arxiv.org/pdf/2504.01137 guykap12.github.io/guykap12.githu… 🧵[1/7]

![Guy Kaplan ✈️🇸🇬 ICLR2025 (@gkaplan38844) on Twitter photo 📢Paper release📢 :

🔍 Ever wondered how LLMs understand words when all they see are tokens? 🧠

Our latest study uncovers how LLMs reconstruct full words from sub-word tokens, even when misspelled or previously unseen.

arxiv.org/pdf/2410.05864 (preprint)

👀 👇

[1/7] 📢Paper release📢 :

🔍 Ever wondered how LLMs understand words when all they see are tokens? 🧠

Our latest study uncovers how LLMs reconstruct full words from sub-word tokens, even when misspelled or previously unseen.

arxiv.org/pdf/2410.05864 (preprint)

👀 👇

[1/7]](https://pbs.twimg.com/media/GZ1Pl5tW8BI-ZoM.jpg)