Robert Shaw

@robertshaw21

@redhat | @neuralmagic | @vllm_project

ID: 1524186223102676992

11-05-2022 00:35:04

745 Tweet

455 Followers

357 Following

Our first official vLLM Meetup is coming to Europe on Nov 6! 🇨🇭 Meet vLLM committers Michael Goin, Tyler Michael Smith, Thomas Parnell, + speakers from Red Hat AI, IBM, Mistral AI. Topics: vLLM updates, quantization, Mistral+vLLM, hybrid models, distributed inference luma.com/0gls27kb

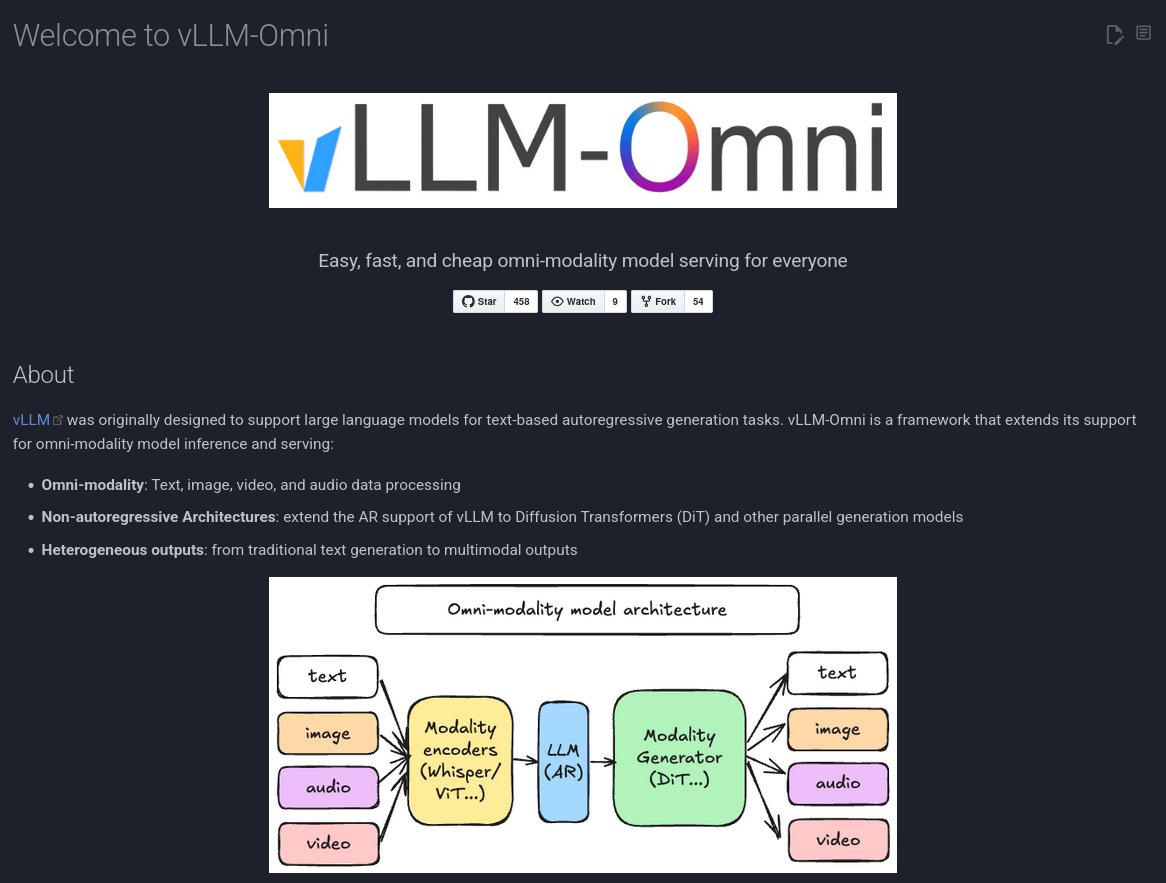

🚀Excited to team up with NVIDIA AI Developer to bring Nemotron Nano 2 VL to vLLM - a multimodal model powered by a hybrid Transformer–Mamba language backbone, built for video understanding and document intelligence✨ Full post here👇blog.vllm.ai/2025/10/31/run…

Love the retrospective on disaggregated inference. If you wonder where the technique named "PD" in vLLM comes from, read on! Thank you Hao AI Lab for pushing the idea forward.

vLLM is proud to support Prime Intellect 's post-training of the INTELLECT-3 model🥰

🎉 Congratulations to the Mistral team on launching the Mistral 3 family! We’re proud to share that Mistral AI, NVIDIA AI Developer, Red Hat AI, and vLLM worked closely together to deliver full Day-0 support for the entire Mistral 3 lineup. This collaboration enabled: • NVFP4