Yingru Li

@richardyrli

AI, RL, LLMs, Data Science | PhD@CUHK | ex-intern @MSFTResearch @TencentGlobal | On Job Market

ID: 2152232932

https://richardli.xyz 25-10-2013 07:28:08

549 Tweet

414 Takipçi

1,1K Takip Edilen

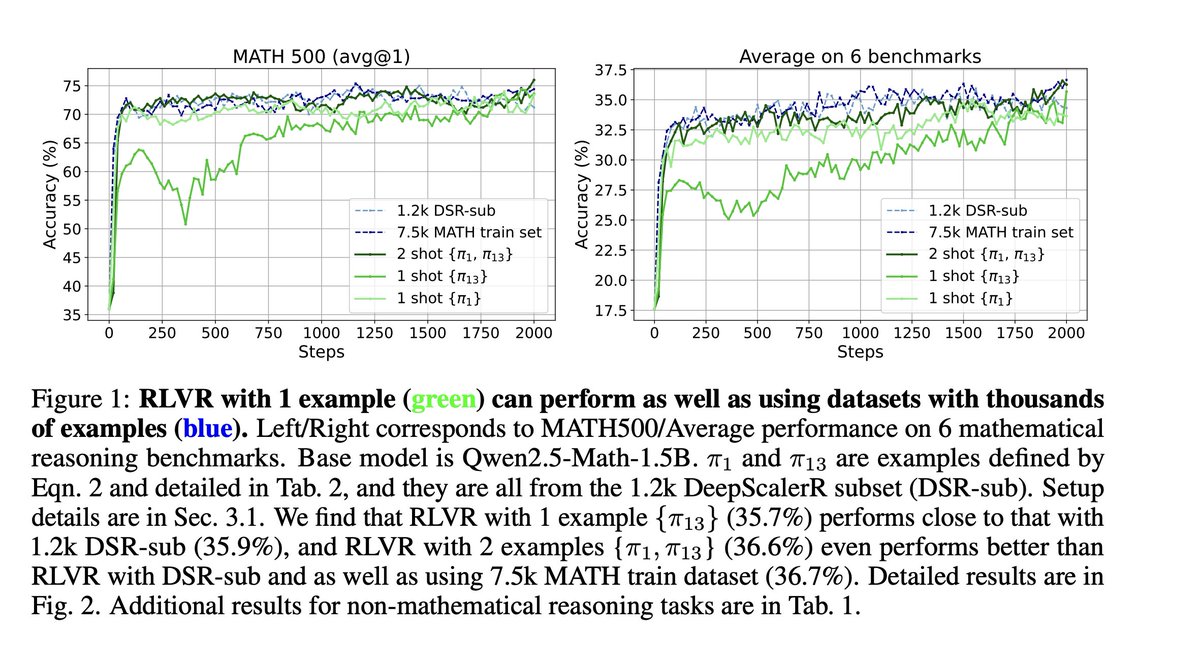

Excited to share our work led by Yiping Wang RLVR with only ONE training example can boost 37% accuracy on MATH500.

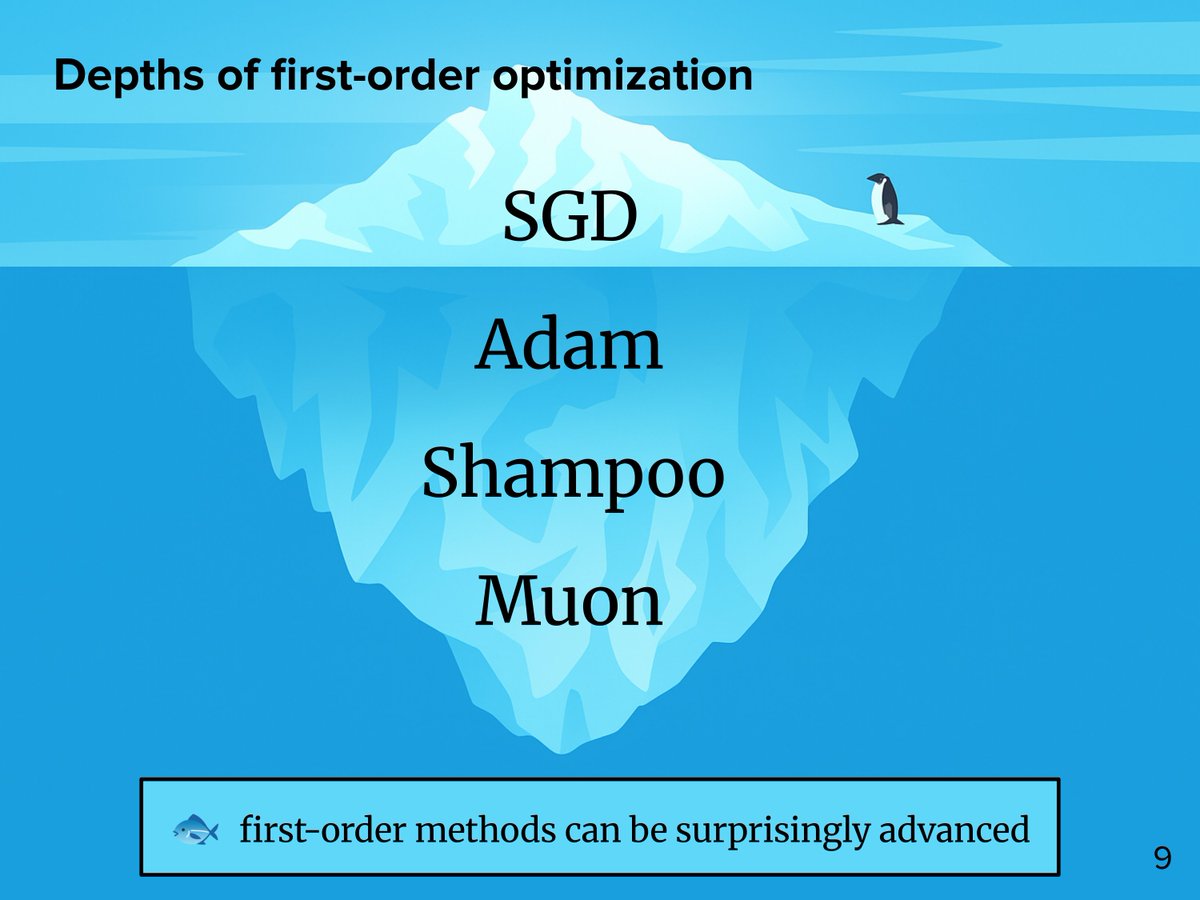

I was really grateful to have the chance to speak at Cohere Labs and ML Collective last week. My goal was to make the most helpful talk that I could have seen as a first-year grad student interested in neural network optimization. Sharing some info about the talk here... (1/6)

Padding a transformer’s input with blank tokens (...) is a simple form of test-time compute. Can it increase the computational power of LLMs? 👀 New work with Ashish Sabharwal addresses this with *exact characterizations* of the expressive power of transformers with padding 🧵

![Xinyu Zhu (@tianhongzxy) on Twitter photo 🔥The debate’s been wild: How does the reward in RLVR actually improve LLM reasoning?🤔

🚀Introducing our new paper👇

💡TL;DR: Just penalizing incorrect rollouts❌ — no positive reward needed — can boost LLM reasoning, and sometimes better than PPO/GRPO!

🧵[1/n] 🔥The debate’s been wild: How does the reward in RLVR actually improve LLM reasoning?🤔

🚀Introducing our new paper👇

💡TL;DR: Just penalizing incorrect rollouts❌ — no positive reward needed — can boost LLM reasoning, and sometimes better than PPO/GRPO!

🧵[1/n]](https://pbs.twimg.com/media/GsdM6V9b0AE7zx8.png)