Hariharan Ramasubramanian

@rf_hari

PhD student at @CarnegieMellon | Prev Research Intern: at @Applied4Tech, @argonne, @genentech

ID: 1432864325677498373

01-09-2021 00:34:23

14 Tweet

70 Followers

392 Following

Super excited to finally release our "Hitchhiker's Guide to Geometric GNNs for 3D Atomic Systems" !🤗 Link: arxiv.org/pdf/2312.07511… Written with Chaitanya K. Joshi Simon Mathis Victor Schmidt 💀🐔 Santiago Miret Fragkiskos Malliaros Taco Cohen Pietro Lio, Yoshua Bengio Michael Bronstein 😍 See thread below 👇 (1/8)

📢Mingda Li and colleagues propose a virtual node graph neural network to enable the prediction of materials properties with variable output dimension. MIT School of Engineering MIT Science MIT Chemistry MIT EECS MIT Nuclear Science and Engineering Oak Ridge Lab nature.com/articles/s4358… ➡️rdcu.be/dNzJh

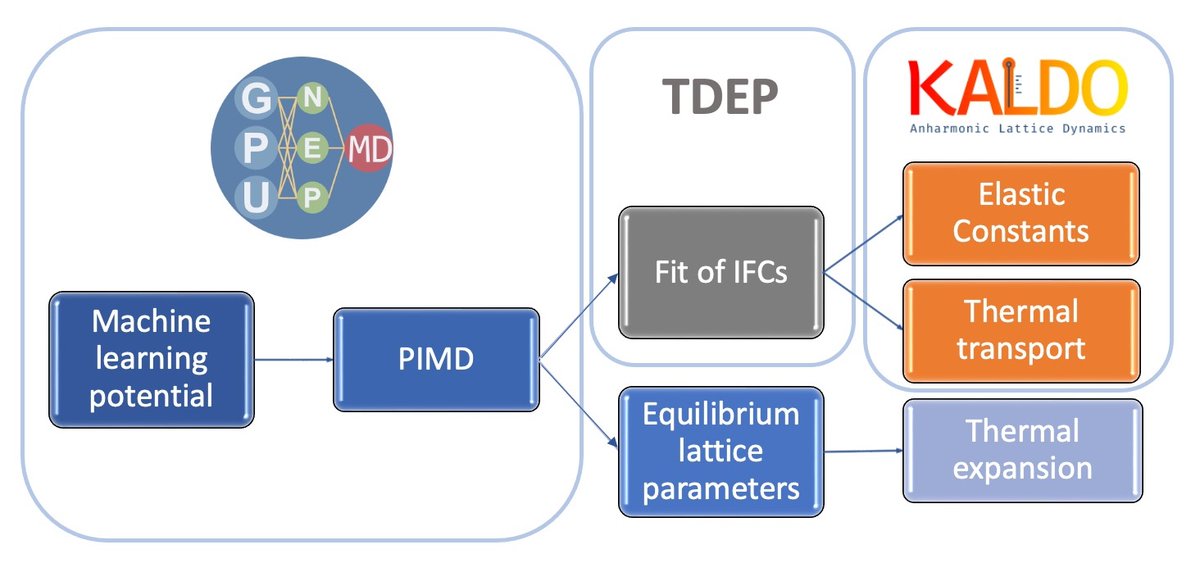

We have written a tutorial manuscript about using GPUMD, TDEP, and kALDo together to get accurate temperature-dependent thermal conductivity and elastic properties. Great work by Dylan Folkner (first paper!) Zekun Chen Florian Knoop Giuseppe Barbalinardo Nanotheory @UCD

Our new paper in Nature Machine Intelligence tells a story about how, and why, ML methods for solving PDEs do not work as well as advertised. We find that two reproducibility issues are widespread. As a result, we conclude that ML-for-PDE solving has reached overly optimistic conclusions.