Reyna Abhyankar

@reyna_abhyankar

I like computers

ID: 1164413313800785920

22-08-2019 05:45:50

12 Tweet

19 Takipçi

16 Takip Edilen

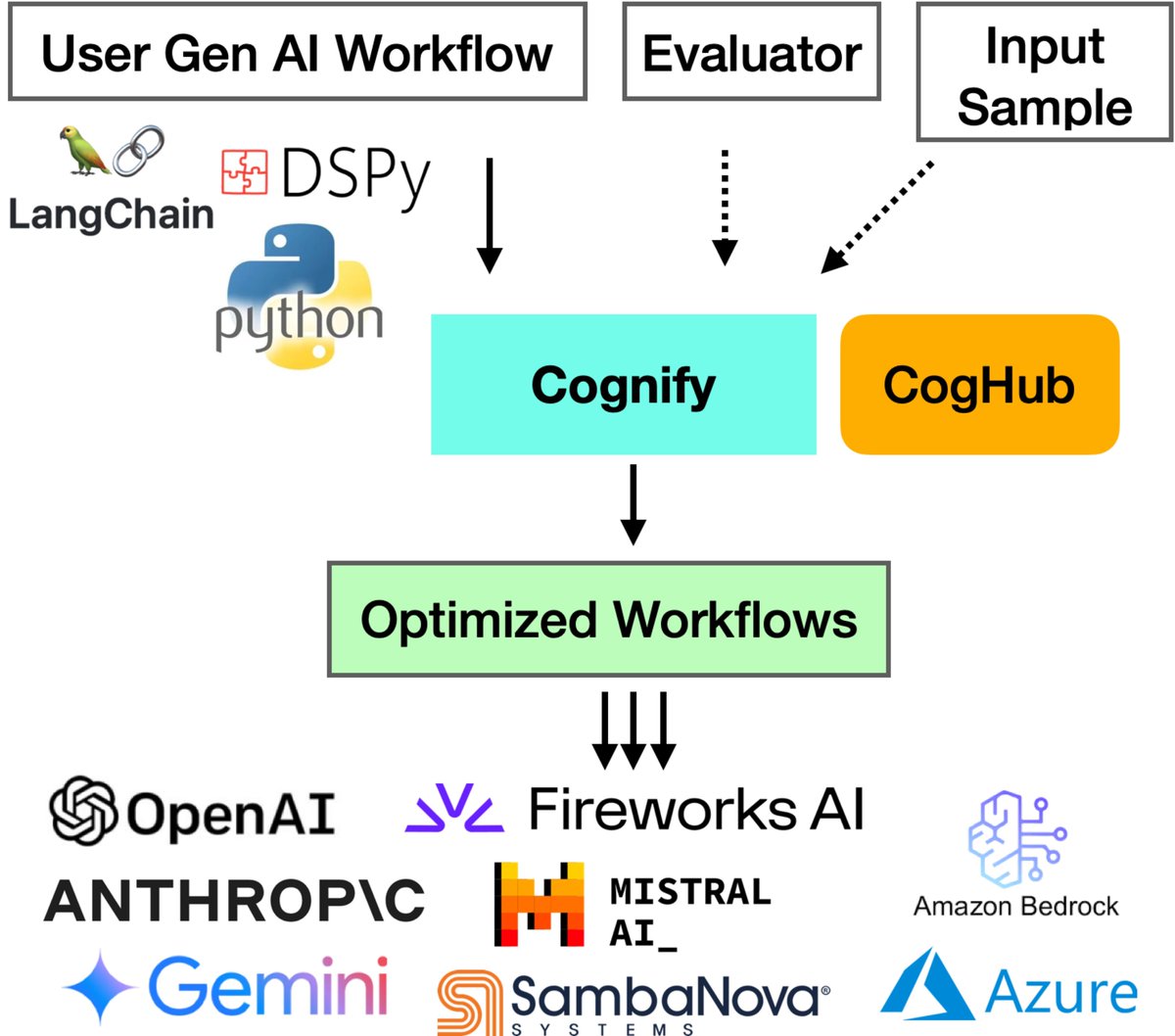

Today, LLMs are constantly being augmented with tools, agents, models, RAG, etc. We built InferCept [ICML'24], the first serving framework designed for augmented LLMs. InferCept sustains a 1.6x-2x higher serving load than SOTA LLM serving systems. #AugLLM mlsys.wuklab.io/posts/infercep…