PromptLayer

@promptlayer

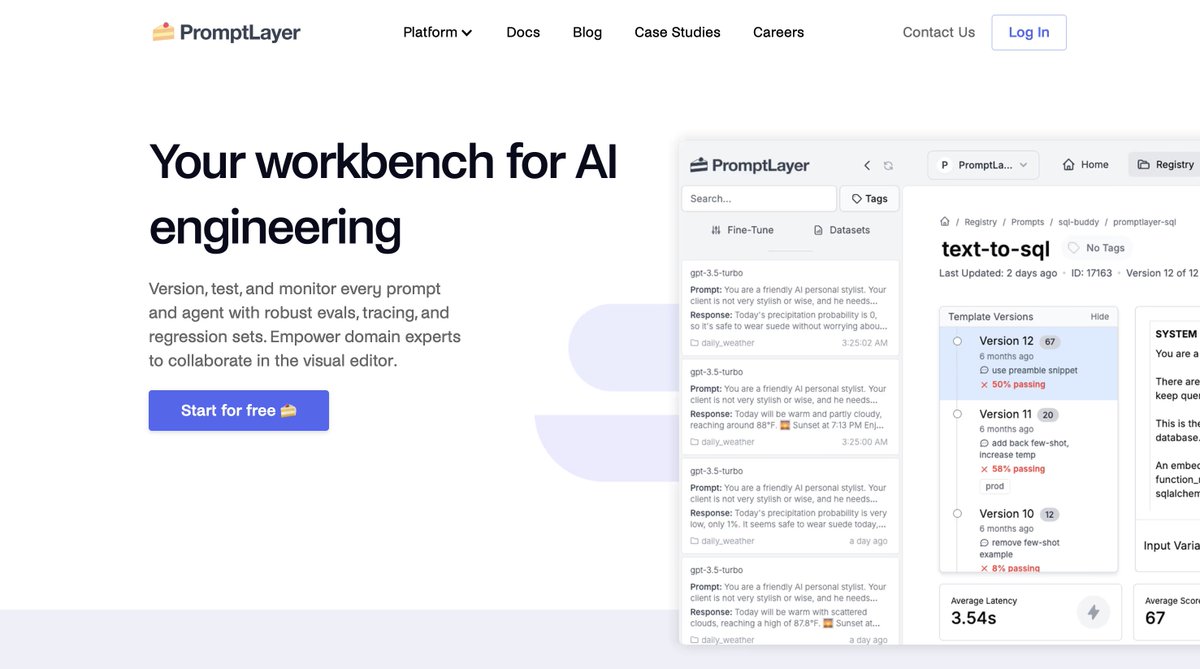

The first platform for prompt engineering. Collaborate, manage, and evaluate prompts 🍰

ID: 1507178263294095370

https://promptlayer.com/ 25-03-2022 02:11:28

532 Tweet

4,4K Takipçi

267 Takip Edilen

🛠️ Tool of the Day: PromptLayer What it does: → Tracks every GPT request + response → Lets you debug / compare / improve → Works with OpenAI + Claude + Groq etc. Why it matters: You can’t improve what you don’t track Bonus: → Auto-tags outputs that underperform →