Praneet

@praneet_suresh_

ML PhD @Mila_Quebec

ID: 3780161412

https://praneetneuro.github.io 04-10-2015 10:47:53

72 Tweet

82 Followers

230 Following

Neel Nanda FWIW I disagree that sparse probing experiments test the "representing concepts crisply" and "identify a complete decomposition" claims about SAEs. In other words, I expect that—even if SAEs perfectly decomposed LLM activations into human-understandable latents with nothing

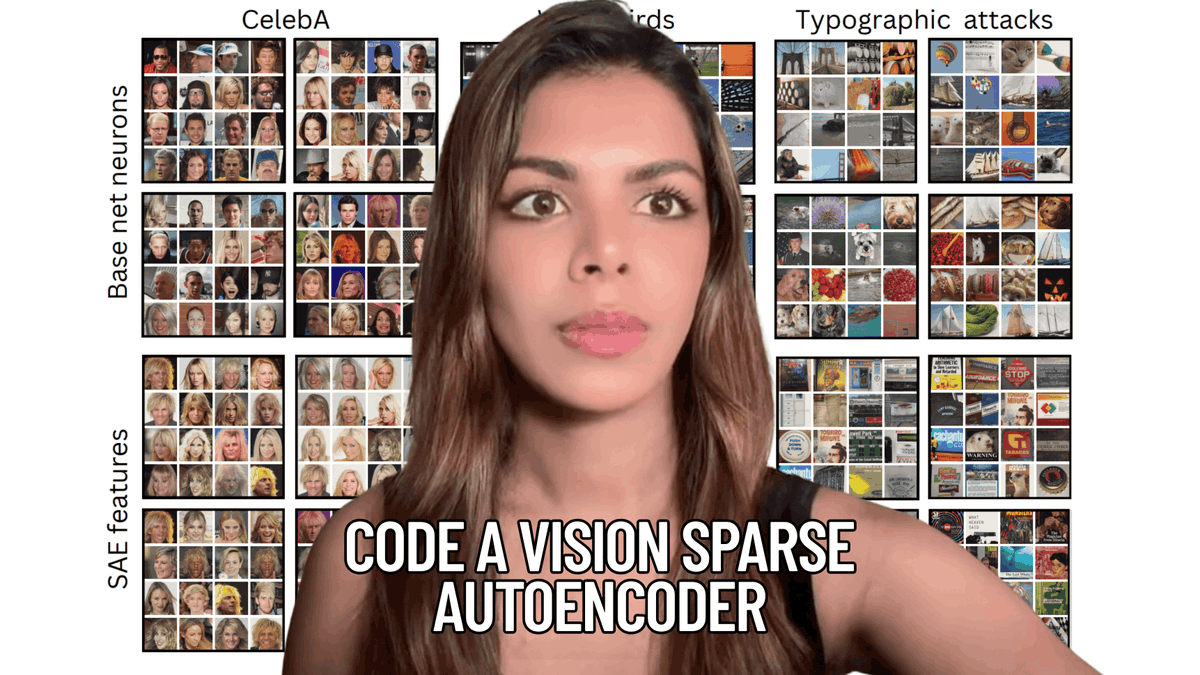

We visualized the features of 16 SAEs trained on CLIP in collaboration between Fraunhofer HHI and Mila - Institut québécois d'IA! Search thousands of interpretable CLIP features in our vision atlas, with autointerp labels, & scores like clarity and polysemanticity. Some fun features in thread:

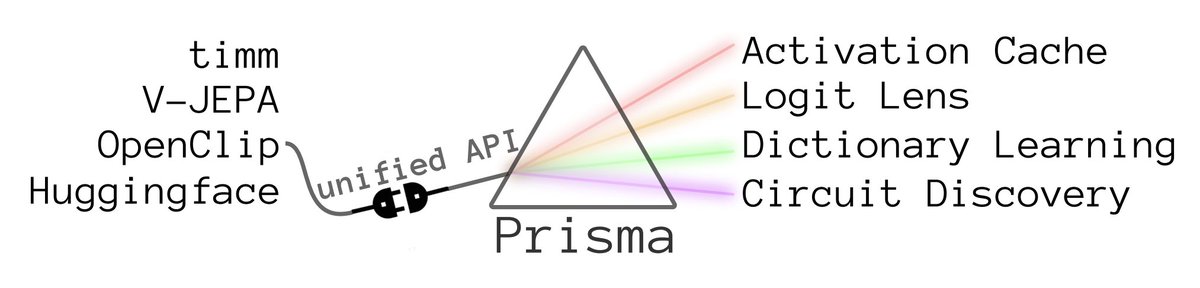

Our paper Prisma: An Open Source Toolkit for Mechanistic Interpretability in Vision and Video received an Oral at the Mechanistic Interpretability for Vision Workshop at CVPR 2025! 🎉 We’ll be in Nashville next week. Come say hi 👋 #CVPR2025 Mechanistic Interpretability for Vision @ CVPR2025

We are releasing: - 80+ SAEs covering every layer of CLIP and DINO, plus CLIP transcoders - Transformer-Lens-style circuit code for 100+ models, including CLIP, DINO, and video transformers from Hugging Face & OpenCLIP - Interactive notebooks for training and evaluating sparse

Some of the Prisma SAE features get odd in the best way: "twin objects," "outlier/chosen object," and Oktoberfest-related concepts. We visualize the features here, in collaboration with Fraunhofer HHI: semanticlens.hhi-research-insights.de/umap-view