Nicholas Krämer

@pnkraemer

Probabilistic numerics, state-space models, differentiable linear algebra, and of course a healthy dose of figure-making.

ID: 1235551063782158336

https://pnkraemer.github.io 05-03-2020 13:01:48

115 Tweet

543 Takipçi

337 Takip Edilen

Did you know Vector’s new Postdoc Fellow Agustinus Kristiadi moved all the way from Tübingen, Germany to Toronto this past month? Learn more about why he decided to join Vector in his full YouTube interview here🧵👉 youtu.be/ql68hhFZ6vs #VectorResearcherFridays

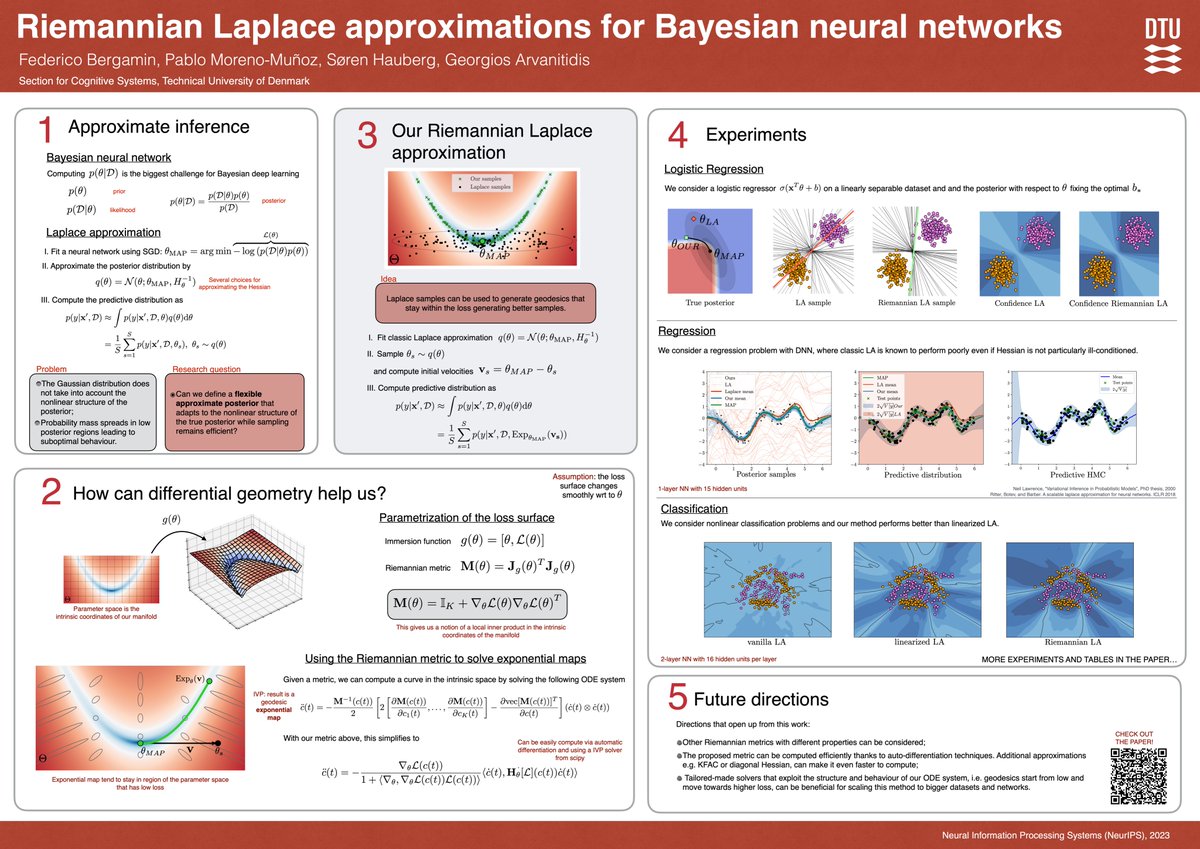

On my way to #NeurIPS23 for the first time. I’ll be there presenting our work "Riemannian Laplace approximations for Bayesian Neural Networks". Work done together with Pablo Moreno-Muñoz, Søren Hauberg, and Georgios Arvanitidis. I’ll be at poster #1223 on Wednesday from 17pm (Session 4).

Excited to share that our paper on reducing variance in diffusion models with control variates is published at the SPIGM ICML Conference workshop. Come check it out! Thanks a lot to will grathwohl Jes Frellsen @carlhenrikek Michael Riis for the collaboration! openreview.net/pdf?id=YqFIzHA…

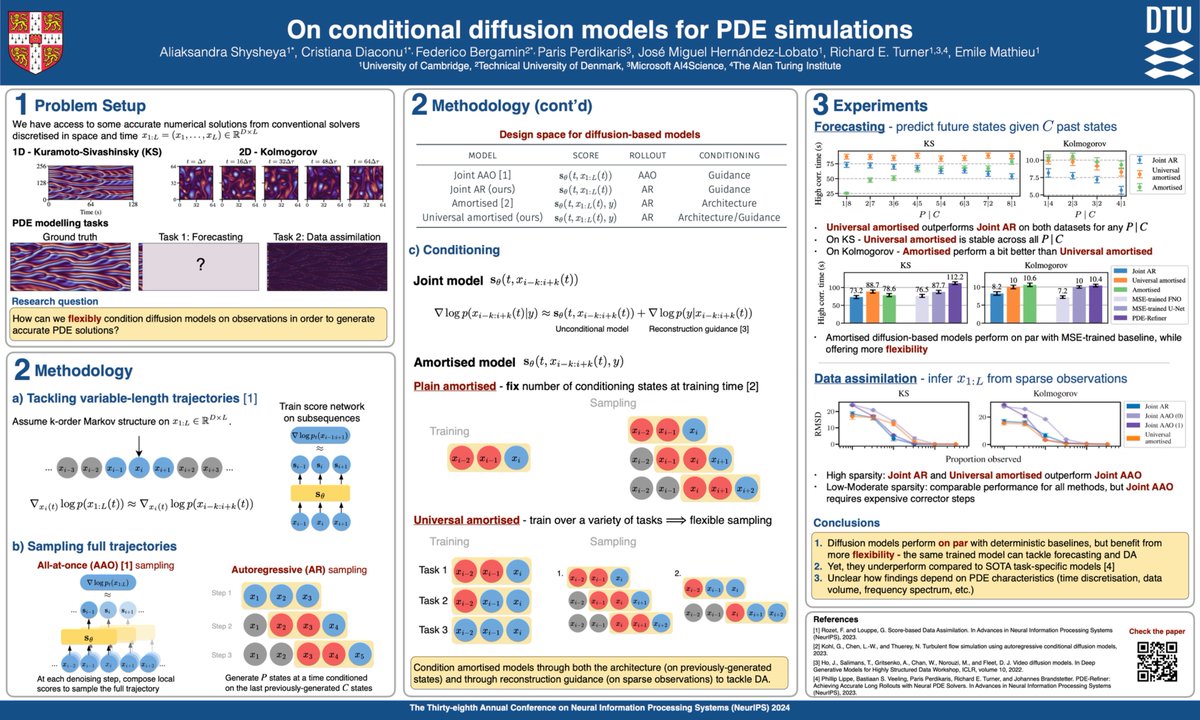

Heading to Vancouver for NeurIPS to present our paper “On Conditional Diffusion Models for PDE Simulation”. I'll be together with Sasha and Cristiana Diaconu at poster 2500 during Thursday’s late afternoon session. Looking forward exciting discussions and meeting new people!