Piotr Piękos

@piotrpiekosai

PhD student with @SchmidhuberAI at @KAUST. Interested in systematic generalization and reasoning.

ID: 561139601

https://piotrpiekos.github.io 23-04-2012 13:39:19

25 Tweet

49 Takipçi

144 Takip Edilen

Proud to announce our AdaSubS algorithm, which is "notable-top-5%" on #ICLR2023! Kudos to Michał Zawalski Michal Tyrolski Konrad Czechowski who led the project and to all the other wonderful co-authors Tomek Odrzygóźdź @Dstauchur Piotr Piękos Yuhuai (Tony) Wu Łukasz Kuciński youtu.be/7GZbPB1Gu0E

1/x Proud to announce our AdaSubS: RL algorithm, which is "notable-top-5%" on `#ICLR2023`! Congratulations to the team of wonderful co-authors: Michał Zawalski, Konrad Czechowski Tomek Odrzygóźdź @Dstauchur Piotr Piękos Yuhuai (Tony) Wu Łukasz Kuciński Piotr Miłoś

The project I was involved in has been selected as "notable-top-5%" on #iclr2023, check it out! Congratulations to all authors and thanks for the fantastic collaboration: Michał Zawalski Konrad Czechowski Michal Tyrolski @Dstauchur Tomek Odrzygóźdź Yuhuai (Tony) Wu Łukasz Kuciński Piotr Miłoś

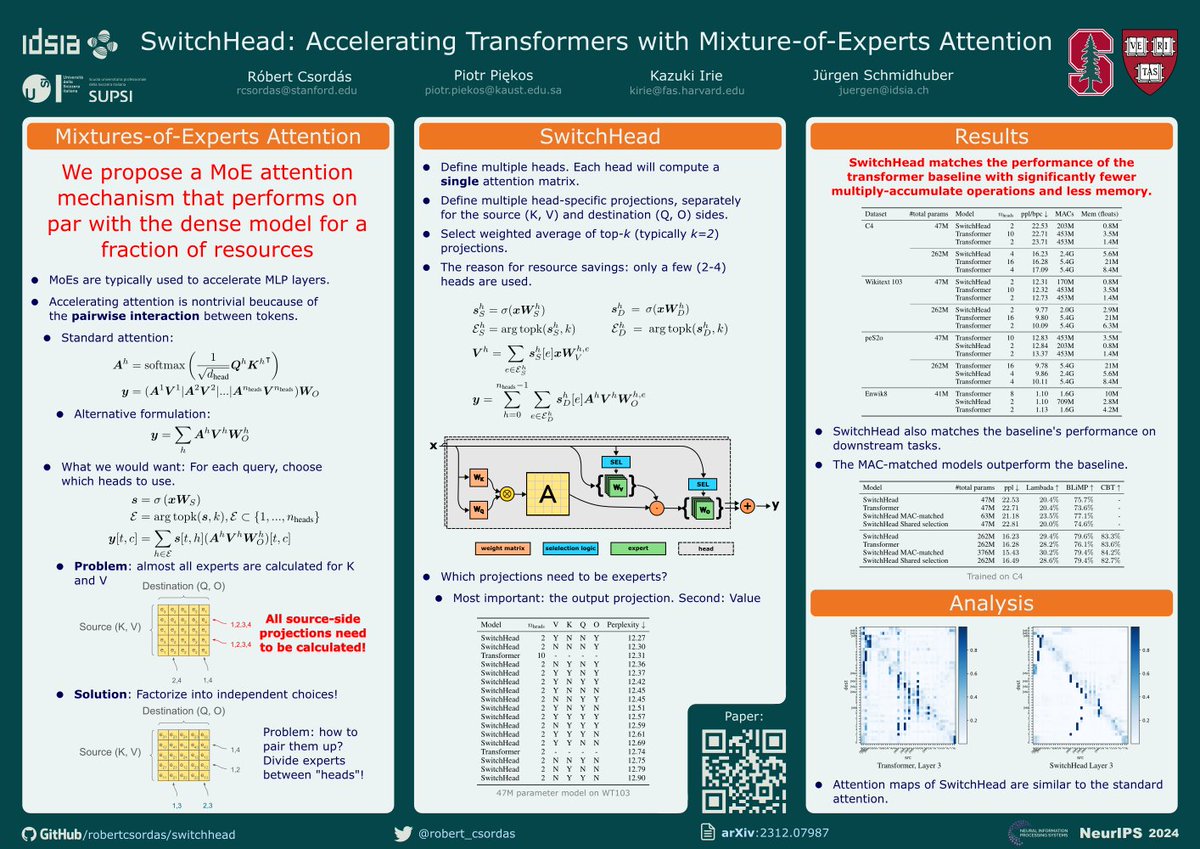

Come visit our poster "SwitchHead: Accelerating Transformers with Mixture-of-Experts Attention" on Thursday at 11 am in East Exhibit Hall A-C on #NeurIPS2024. With Piotr Piękos, Kazuki Irie and Jürgen Schmidhuber.

One of my rejected ICML 2025 (ICML Conference) papers. Can anyone spot any criticism in the metareview? What a joke 🙃

![fly51fly (@fly51fly) on Twitter photo [LG] Mixture of Sparse Attention: Content-Based Learnable Sparse Attention via Expert-Choice Routing

P Piękos, R Csordás, J Schmidhuber [KAUST & Stanford University] (2025)

arxiv.org/abs/2505.00315 [LG] Mixture of Sparse Attention: Content-Based Learnable Sparse Attention via Expert-Choice Routing

P Piękos, R Csordás, J Schmidhuber [KAUST & Stanford University] (2025)

arxiv.org/abs/2505.00315](https://pbs.twimg.com/media/Gp-dDZObkAAuwWb.jpg)