philip

@philiptorr

Professor Oxford

ID: 27057939

27-03-2009 17:59:14

71 Tweet

367 Followers

89 Following

very proud that my work on multi-agent debate for misinformation detection won best paper award at the ICML Conference CFAgentic workshop! check it out on arxiv: arxiv.org/abs/2410.20140 v grateful to all my co-authors and the support from BBC Research & Development 🥳

Finally, a good modern book on causality for ML: causalai-book.net by Elias Bareinboim. This looks like a worthy successor to the ground breaking book by Judea Pearl which I read in grad school. (h/t Joshua Safyan for the ref).

A big thank you to the fantastic coauthors! philip, ioannis patras, Adel Bibi, Fazl Barez!

This project would not have been possible without amazing collaborators! Very grateful to my co-leads on the project Alesia Ivanova (who is an MSc student at Oxford!) and Charlie London, along with Jack Cai (Jack Cai), my advisors at Microsoft - Shital Shah (Shital Shah) and

Thanks to everyone who worked on this, particularly the leads Sumeet Motwani and Alesia, along with Jack Cai , Shital Shah, Riashat Islam, Christian Schroeder de Witt, and philip!

This is a joint work by amazing folks from Oxford including Alesia Ivanova, Sumeet Motwani, Charlie London, Jack Cai, Christian Schroeder de Witt, philip and Riashat Islam from Microsoft Research. Arxiv: arxiv.org/abs/2510.07312 Code: github.com/AlesyaIvanova/…

Work done with my awesome collaborators Iván Arcuschin Arthur Conmy Neel Nanda philip as part of the MATS Research

![Guohao Li (Hiring!) 🐫 (@guohao_li) on Twitter photo 🚨 [Call for Papers] SEA Workshop @ NeurIPS 2025 🚨

📅 December 6, 2025 | 📍 San Diego, USA

🌐: sea-workshop.github.io

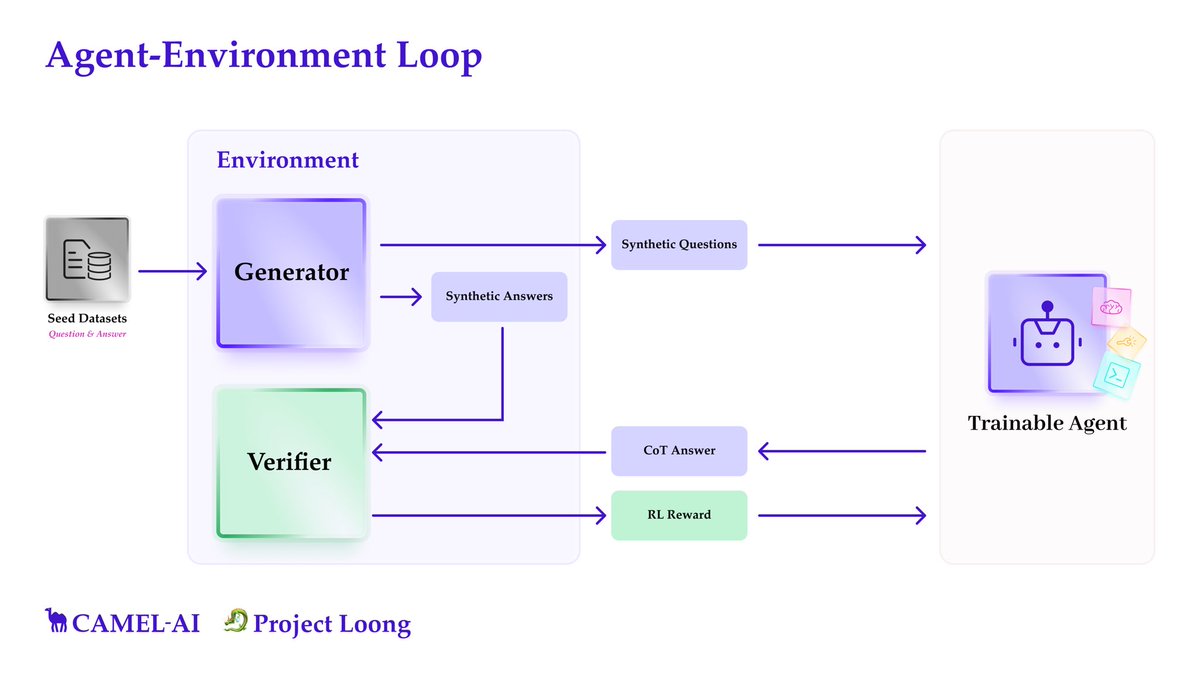

Environments are the "data" for training agents, which is largely missing in the open source ecosystem.

We are hosting Scaling Environments for Agents (SEA) 🚨 [Call for Papers] SEA Workshop @ NeurIPS 2025 🚨

📅 December 6, 2025 | 📍 San Diego, USA

🌐: sea-workshop.github.io

Environments are the "data" for training agents, which is largely missing in the open source ecosystem.

We are hosting Scaling Environments for Agents (SEA)](https://pbs.twimg.com/media/GwucQALWAAIcJ5D.jpg)