Pradeep Dasigi

@pdasigi

Senior Research Scientist @allen_ai; #NLProc, Post-training for OLMo

ID: 20038834

https://pdasigi.github.io/ 04-02-2009 09:00:52

442 Tweet

1,1K Takipçi

505 Takip Edilen

Just arrived in 🇨🇦 to attend NeurIPS 2024! Excited to connect and chat about AI reliability and safety, resource-efficient approaches to AI alignment , inference-time scaling and anything in between! You can drop me a message/email ([email protected]) or find me at the

Here's a significant update to Tülu 3: we scaled up the post-training recipe to Llama 3.1 405B. Tülu 3 405B beats Llama's 405B instruct model and also Deepseek V3. You can now access the model and the entire post-training pipeline. Huge shoutout to Hamish Ivison and Costa Huang who

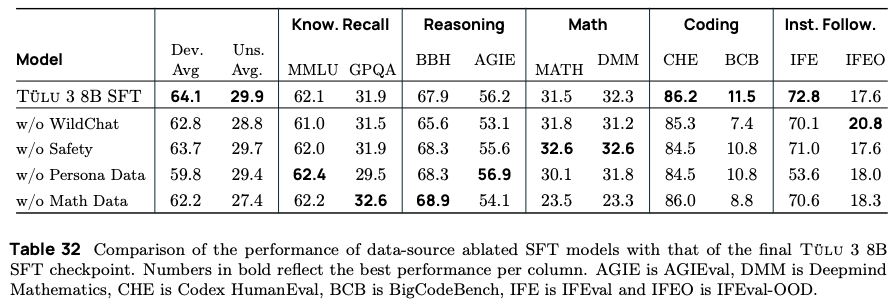

One additional thing in the updated Tulu 3 paper that I'd like to highlight is that Pradeep Dasigi went back and re-evaluated our mid-stage checkpoints on our held-out evals (Section 7.4). This lets us see what decisions generalized beyond the exact test sets we used! I think this is

Excited to drive innovation and push the boundaries of open, scientific AI research & development! 🚀 Join us at Ai2 to shape the future of OLMo, Molmo, Tulu, and more. We’re hiring at all levels—apply now! 👇 #AI #Hiring Research Engineer job-boards.greenhouse.io/thealleninstit… Research

How to curate instruction tuning datasets while targeting specific skills? This is a common question developers face while post-training LMs. In this work led by Hamish Ivison, we found that simple embedding based methods scale much better than fancier computationally intensive

Percy Liang EleutherAI nice! we also recently trained a set of models on 25 different pretraining corpora, each corpus having 14 model sizes trained (4M to 1B), to 5x Chinchilla. We released 30,000+ checkpoints! x.com/allen_ai/statu… arxiv.org/pdf/2504.11393