Pavankumar Vasu

@pavankumarvasu

ID: 1586729245

11-07-2013 20:12:38

24 Tweet

136 Takipçi

117 Takip Edilen

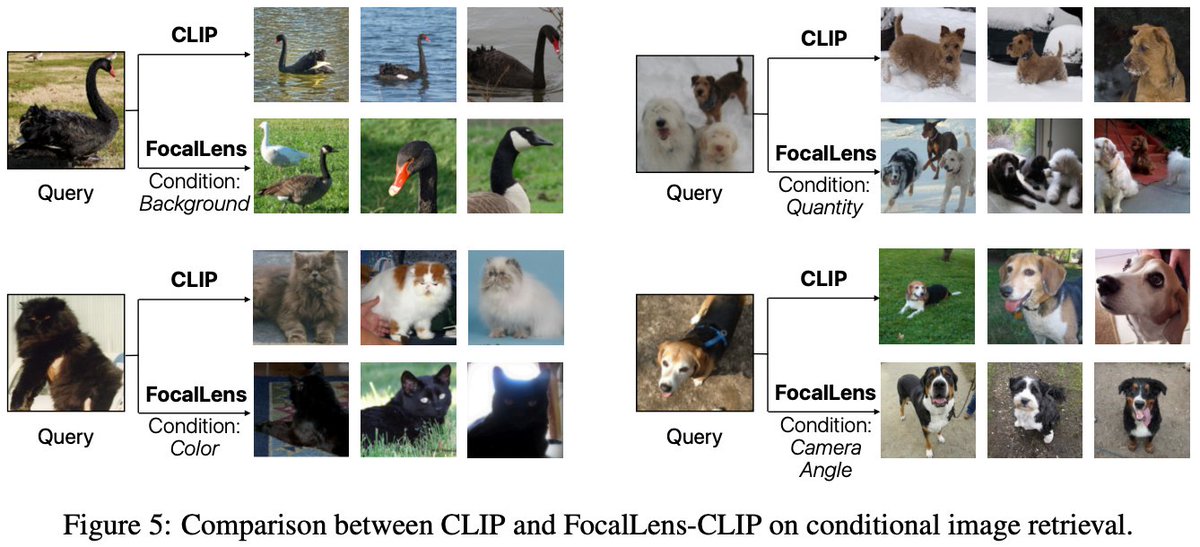

Excited to introduce FocalLens: an instruction tuning framework that turns existing VLMs/MLLMs into text-conditioned vision encoders that produce visual embeddings focusing on relevant visual information given natural language instructions! 📢: Hadi Pouransari will be presenting