Parishad BehnamGhader

@parishadbehnam

NLP PhD student at @Mila_Quebec and @mcgillu

ID: 828506588902256640

http://parishadbehnam.github.io 06-02-2017 07:32:18

56 Tweet

145 Takipçi

99 Takip Edilen

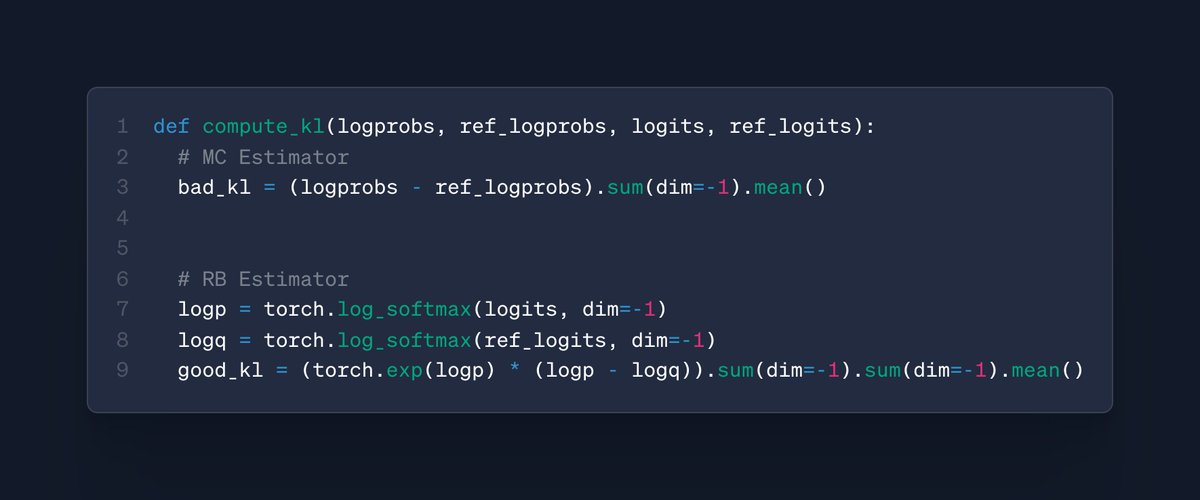

Current KL estimation practices in RLHF can generate high variance and even negative values! We propose a provably better estimator that only takes a few lines of code to implement.🧵👇 w/ Tim Vieira and Ryan Cotterell code: arxiv.org/pdf/2504.10637 paper: github.com/rycolab/kl-rb

![Amirhossein Kazemnejad (@a_kazemnejad) on Twitter photo Introducing nanoAhaMoment: Karpathy-style, single file RL for LLM library (<700 lines)

- super hackable

- no TRL / Verl, no abstraction💆♂️

- Single GPU, full param tuning, 3B LLM

- Efficient (R1-zero countdown < 10h)

comes with a from-scratch, fully spelled out YT video [1/n] Introducing nanoAhaMoment: Karpathy-style, single file RL for LLM library (<700 lines)

- super hackable

- no TRL / Verl, no abstraction💆♂️

- Single GPU, full param tuning, 3B LLM

- Efficient (R1-zero countdown < 10h)

comes with a from-scratch, fully spelled out YT video [1/n]](https://pbs.twimg.com/media/GnoErcuXsAA4Yxa.jpg)