Oscar Davis

@osclsd

PhD ML @UniofOxford; generative modelling; previously at MSR, EPFL, Imperial

ID: 1794032046622277632

https://olsdavis.github.io/ 24-05-2024 15:45:55

34 Tweet

250 Followers

150 Following

A little late, but happy to announce that our paper on Rough Transformers ⛰️ has been accepted at NeurIPS Conference! We present a way to make Transformers for temporal data more efficient and robust to irregular sampling through path signatures! Read on! #neurips2024 (1/7)

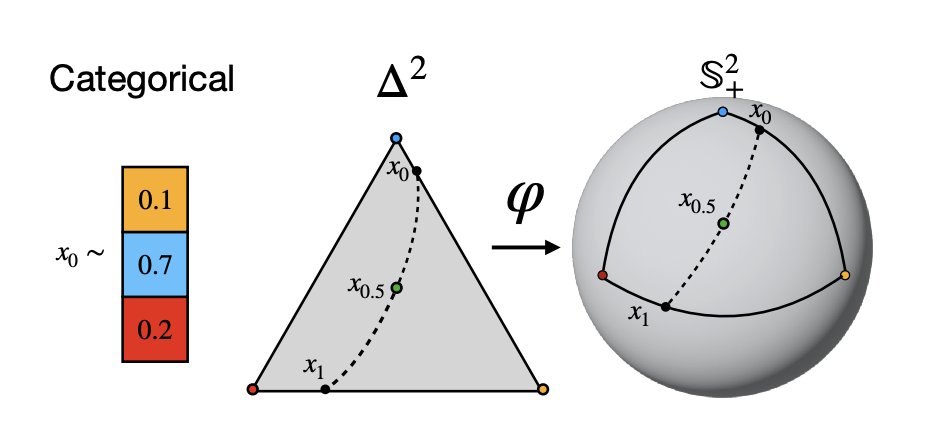

Fisher Flows for discrete generative modeling led by Oscar Davis arxiv.org/abs/2405.14664

Fisher Flow Matching for Generative Modeling over Discrete Data nips.cc/virtual/2024/p… Oscar Davis Samuel Kessler Mircea Petrache İsmail İlkan Ceylan Joey Bose

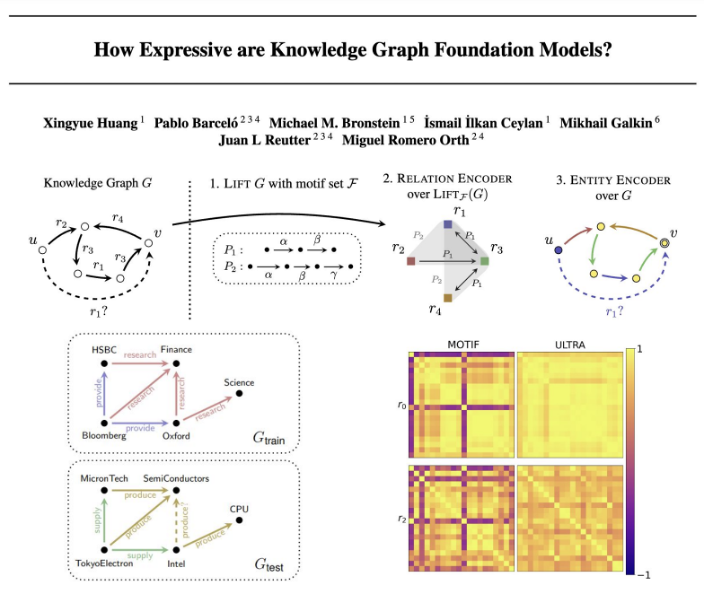

Knowledge Graph Foundation Models (KGFMs) are at the frontier of graph learning - but we didn’t have a principled understanding of what we can (or can’t) do with them. Now we do! 💡🚀 🧵 with Pablo Barcelo, İsmail İlkan Ceylan, Michael Bronstein, Michael Galkin, Juan Reutter, Miguel

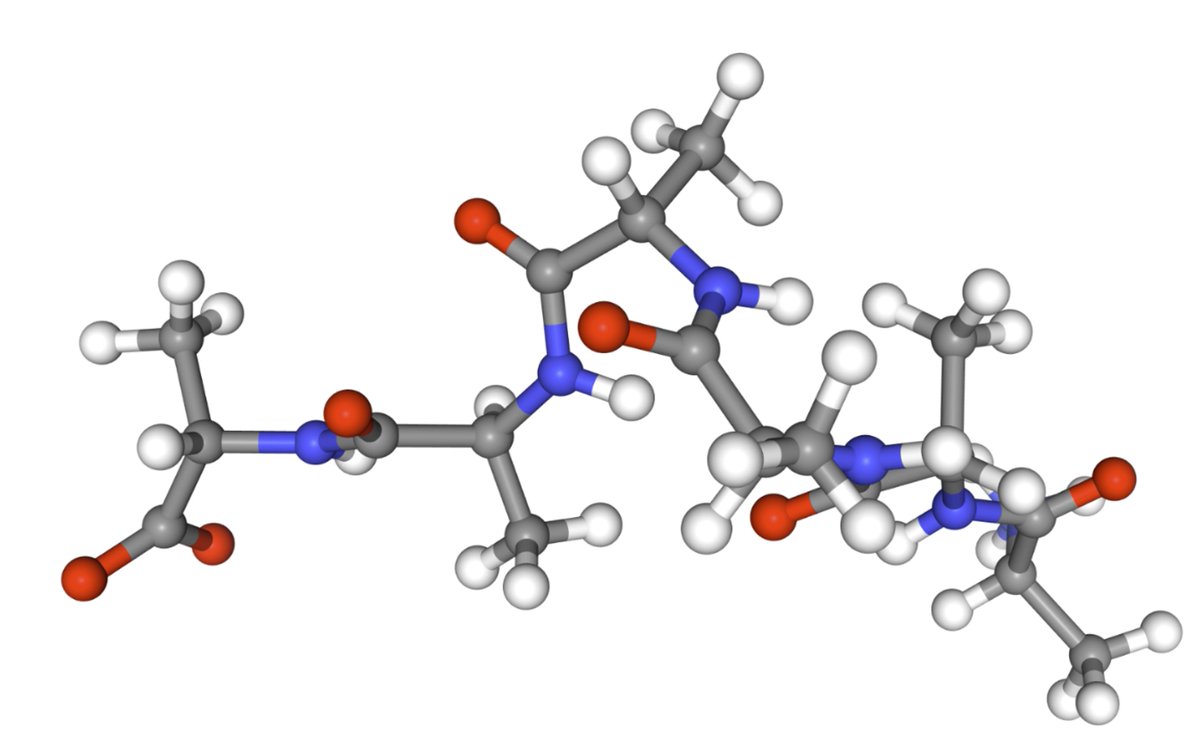

New preprint! 🚨 We scale equilibrium sampling to hexapeptide (in cartesian coordinates!) with Sequential Boltzmann generators! 📈 🤯 Work with Joey Bose, Chen Lin, Leon Klein, Michael Bronstein and Alex Tong Thread 🧵 1/11

Great final lecture in Michael Bronstein GDL course given by Joey Bose 🚀feat famous smiley gif by Oscar Davis 😄

Excited to release FORT, a new regression-based approach for training normalizing flows 🔥! 🔗 Paper available here: arxiv.org/abs/2506.01158 New paper w/ Oscar Davis Jiarui Lu Jian Tang Michael Bronstein Yoshua Bengio Alex Tong Joey Bose 🧵1/6

🎉Personal update: I'm thrilled to announce that I'm joining Imperial College London Imperial College London as an Assistant Professor of Computing Imperial Computing starting January 2026. My future lab and I will continue to work on building better Generative Models 🤖, the hardest

GenBio Workshop ORAL Presentation 📜 Title: FORT: Forward-Only Regression Training of Normalizing Flows 🕐 When: Fri 18 Jul 🗺️ Where: East Exhibition Hall A 🔗 arXiv: arxiv.org/pdf/2506.01158 w/ Danyal Rehman Oscar Davis Jiarui Lu Jian Tang Michael Bronstein Yoshua Bengio

Wrapping up #ICML2025 on a high note — thrilled (and pleasantly surprised!) to win the Best Paper Award at GenBio Workshop @ ICML25 🎉 Big shoutout to the team that made this happen! Paper: Forward-Only Regression Training of Normalizing Flows (arxiv.org/abs/2506.01158) Mila - Institut québécois d'IA

📢Interested in doing a PhD in generative models 🤖, AI4Science 🧬, Sampling 🧑🔬, and beyond? I am hiring PhD students at Imperial College London Imperial Computing for the next application cycle. 🔗See the call below: joeybose.github.io/phd-positions/ And a light expression of interest:

Very excited to share this! We introduce a new approach to knowledge graph foundation models built on probabilistic equivariance. The model is simple, expressive, and probabilistically equivariant — and it works remarkably well! Collaboration led by Jinwoo Kim and Xingyue Huang.