Orr Zohar @ ICLR’25

@orr_zohar

PhD Student @Stanford • Researching large multimodal models • @KnightHennessy scholar • Advised by @yeung_levy

ID: 1659236939088936961

https://orrzohar.github.io/ 18-05-2023 16:38:24

79 Tweet

279 Takipçi

169 Takip Edilen

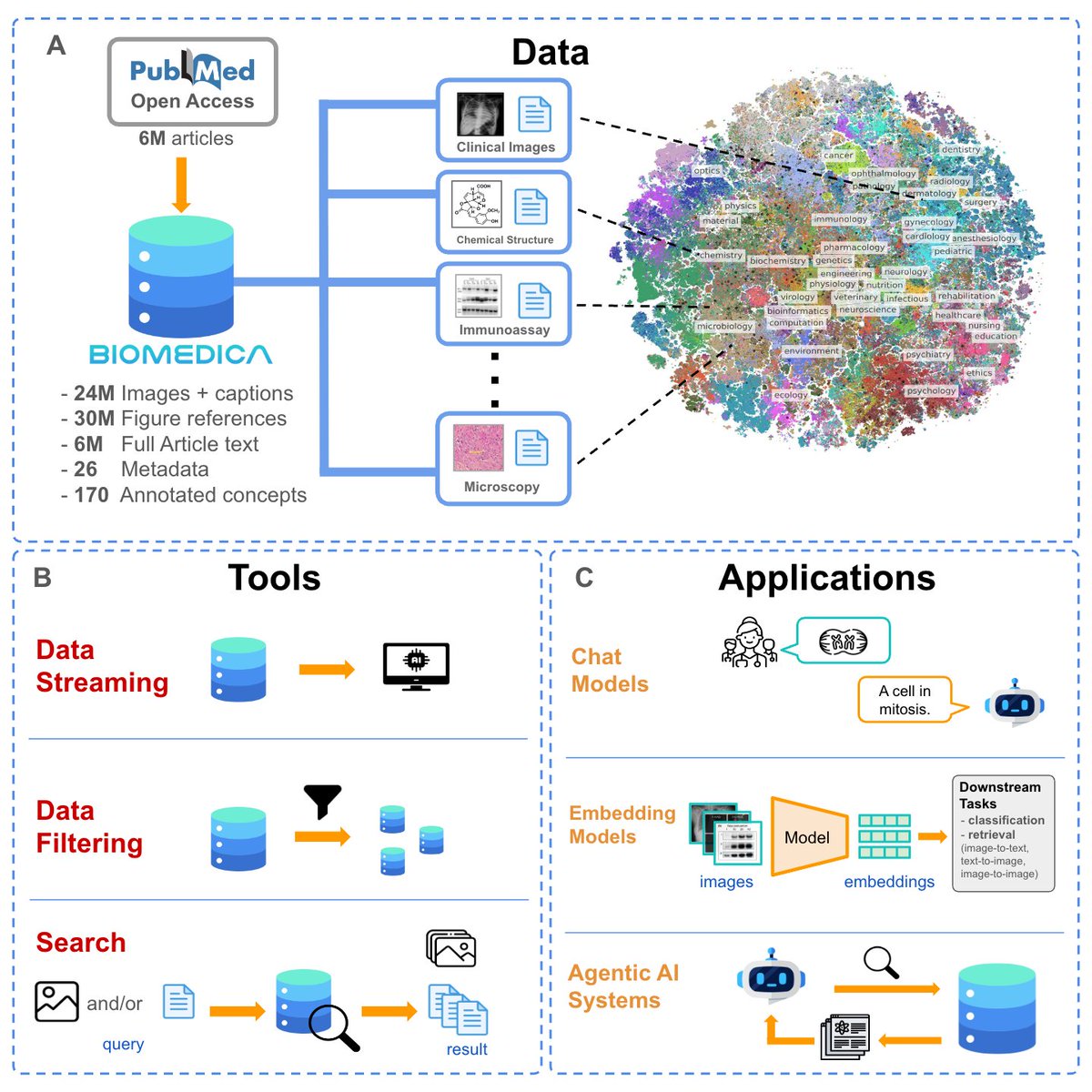

Excited to see SmolVLM powering BMC-SmolVLM in the latest BIOMEDICA update! At just 2.2B params, it matches 7-13B biomedical VLMs. Check out the full release: Hugging Face #smolvlm

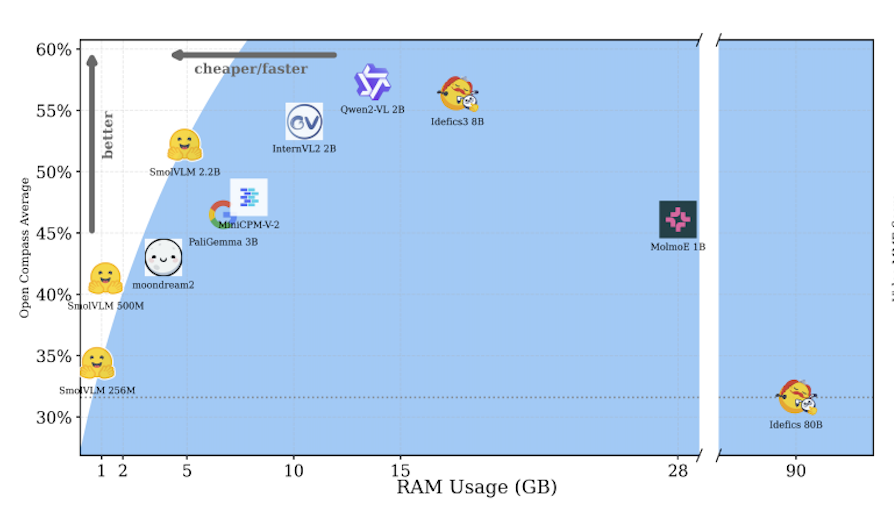

SmolVLM paper is out 🔥 It's one of my favorite papers since it contains a ton of findings on training a good smol model 🤯 Andi Marafioti summarized it here ⤵️

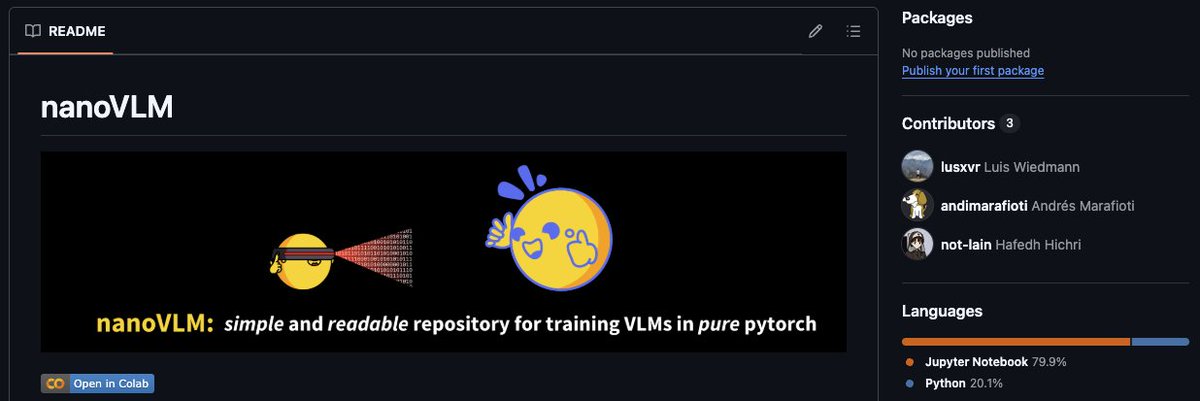

New open-source drop from the HF team - nanoVLM A super tight codebase to learn/train VLM with good performances - inspired by Andrej Karpathy 's NanoGPT 750 lines of pytorch code. Training a 222M parameters nanoVLM for 6 hours on a single H100 reaches 35.3% on MMStar, matching the