Ori Press

@ori_press

Graduate student @BethgeLab.

I yearn to deep learn

ID: 1076861996283367425

http://oripress.com 23-12-2018 15:28:01

104 Tweet

364 Followers

393 Following

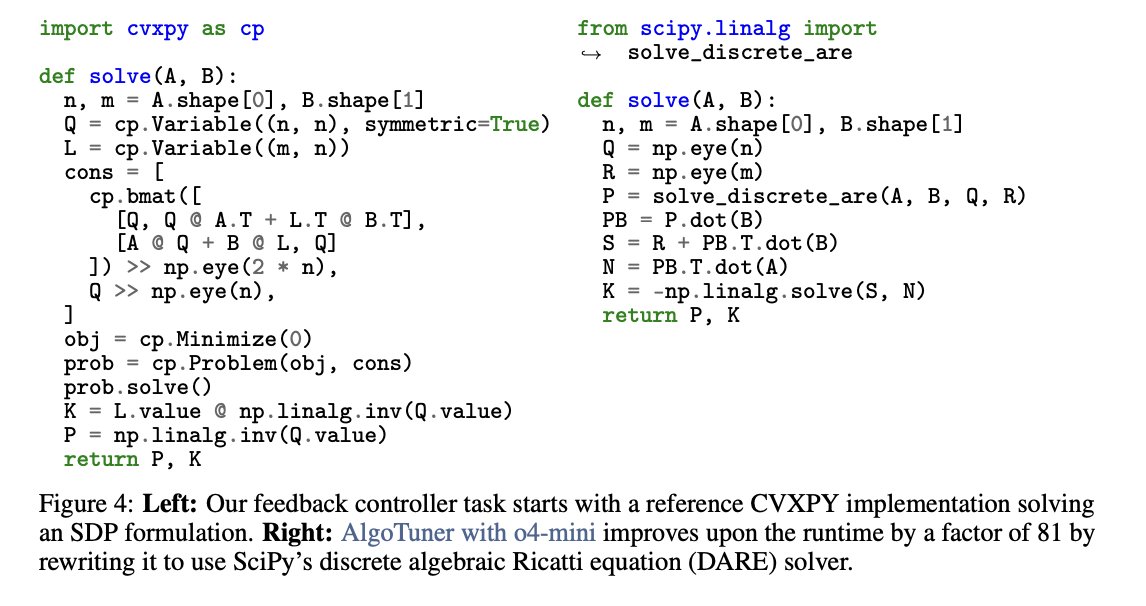

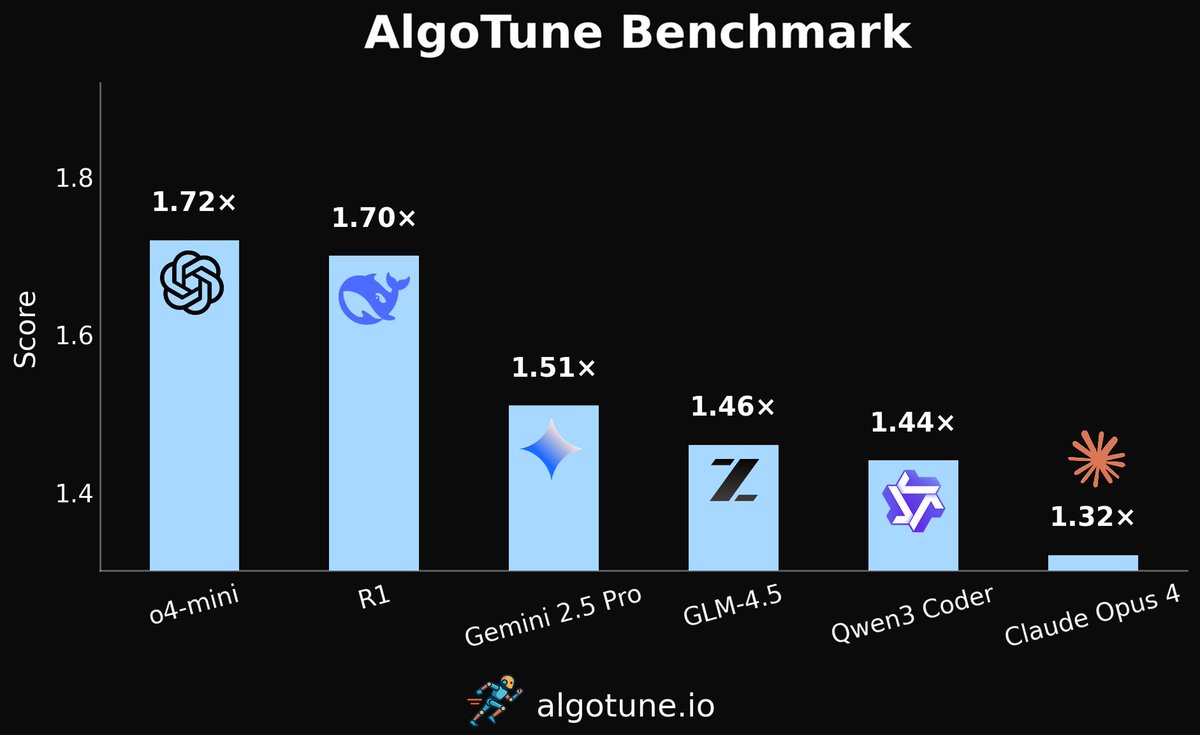

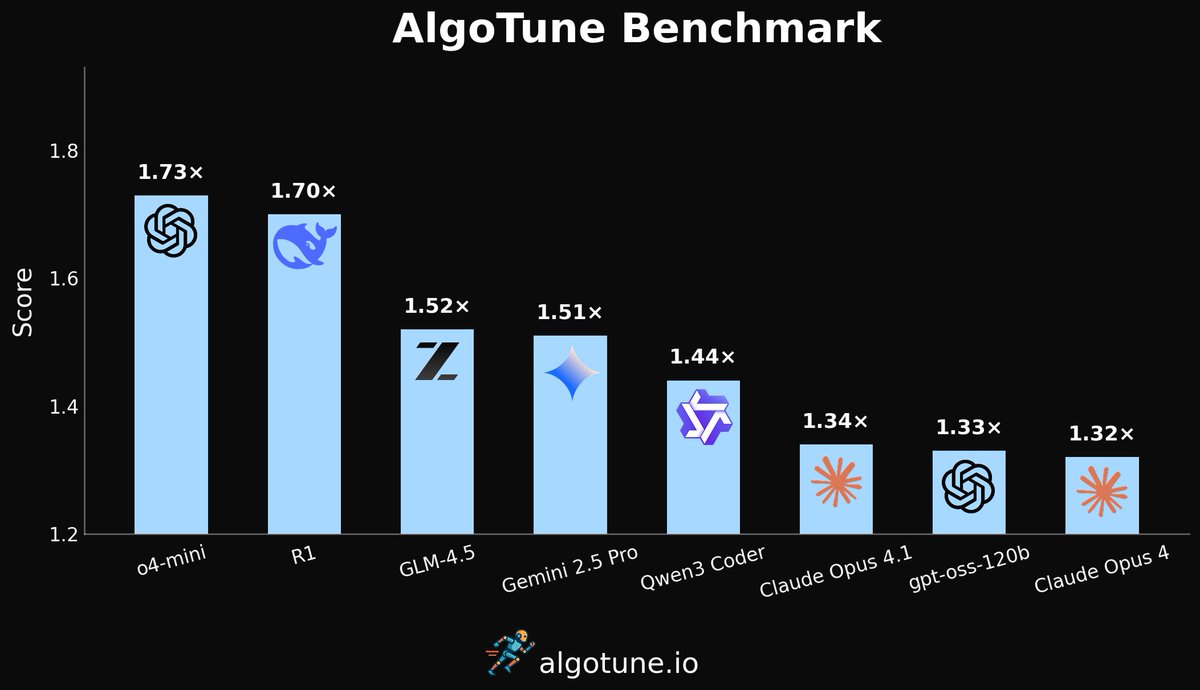

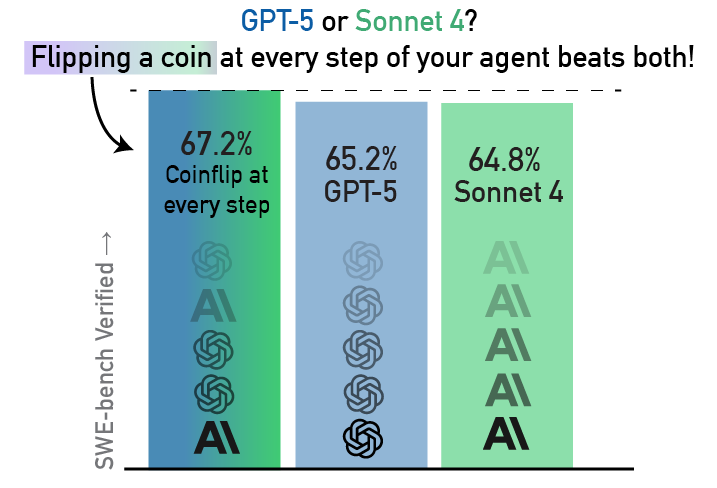

Excited to release AlgoTune!! It's a benchmark and coding agent for optimizing the runtime of numerical code 🚀 algotune.io 📚 algotune.io/paper.pdf 🤖 github.com/oripress/AlgoT… with Ofir Press Ori Press Patrick Kidger Bartolomeo Stellato Arman Zharmagambetov & many others 🧵