OpenGVLab

@opengvlab

Shanghai AI Lab, General Vision Team. We created InternImage, BEVFormer, VideoMAE, LLaMA-Adapter, Ask-Anything, and many more! [email protected]

ID: 1610948392489979904

https://github.com/OpenGVLab 05-01-2023 10:36:53

133 Tweet

1,1K Followers

87 Following

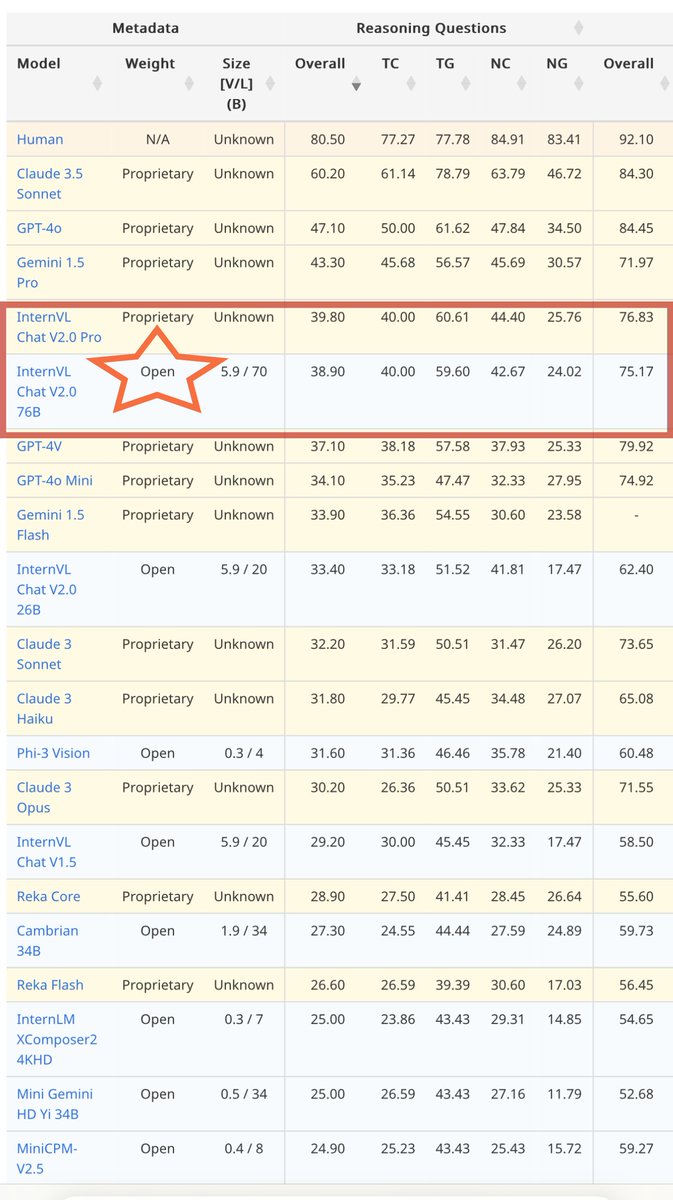

CharXiv is Zirui "Colin" Wang 's excellent work in evaluating the chart understanding ability of #mllm. InternVL2-Llama3-76B is the best open-source model for this domain. BTW the song that summarizes the key findings is creative! I love it! 👍CharXiv leaderbord and the song:

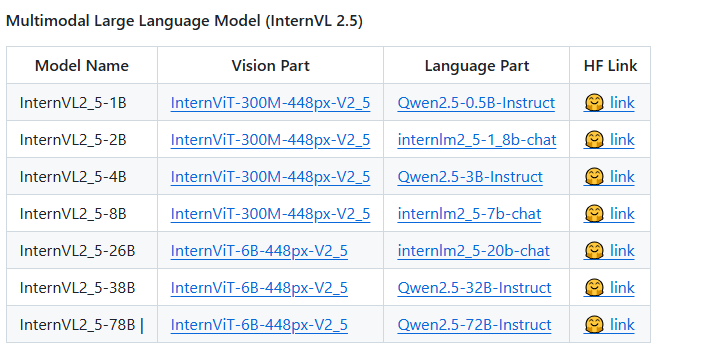

🥳We have released InternVL2.5, ranging from 1B to 78B, on Hugging Face . 😉InternVL2_5-78B is the first open-source #MLLM to achieve over 70% on the MMMU benchmark, matching the performance of leading closed-source commercial models like GPT-4o. 🤗HF Space:

People pay more and more attention on the quality or details of generated videos. Using a single hand-tuning temperature parameter to enhance your generated video for free! Nice work with our amazing friends Yang Luo Xuanlei Zhao, Wenqi Shaw, Victor.Kai Wang, VITA Group,

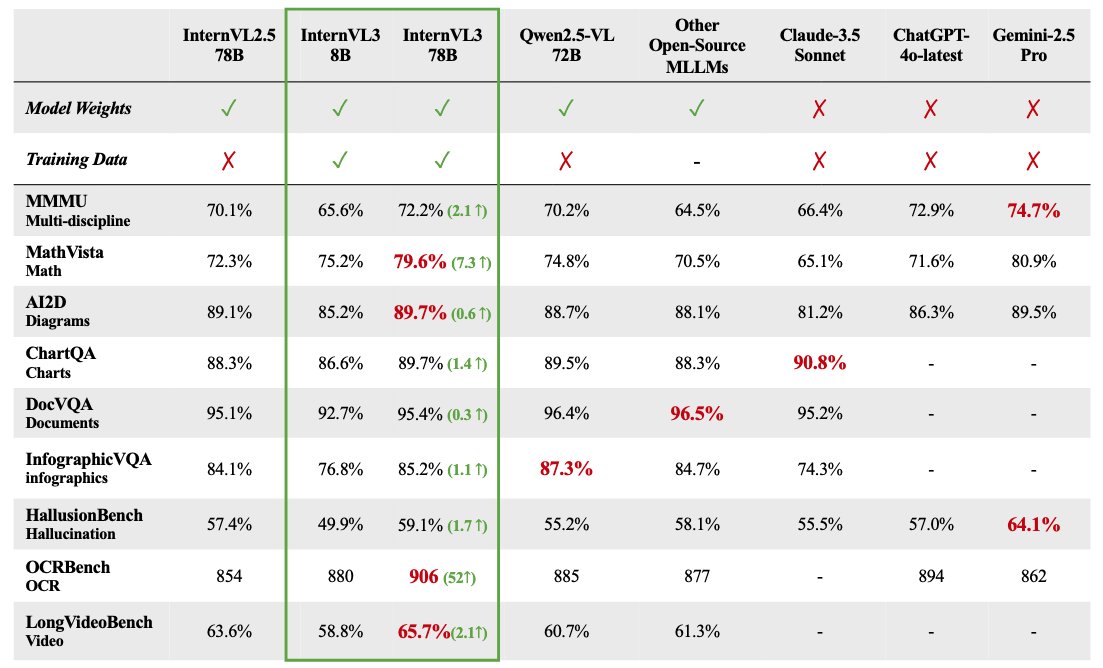

🥳We have released #InternVL3, an advanced #MLLM series ranging from 1B to 78B, on Hugging Face. 😉InternVL3-78B achieves a score of 72.2 on the MMMU benchmark, setting a new SOTA among open-source MLLMs. ☺️Highlights: - Native multimodal pre-training: Simultaneous language and