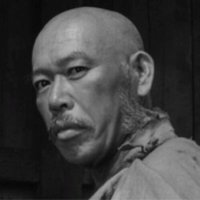

Onur Güngör

@onurgu_ml

Part-time faculty - Bogazici University Comp. Eng.,

Data scientist and ML engineer

ID: 3303819562

https://www.cmpe.boun.edu.tr/~onurgu/ 30-05-2015 15:15:11

1,1K Tweet

991 Followers

1,1K Following

TURNA: the biggest Turkish encoder-decoder model up-to-date, based on UL2 architecture, comes in 1.1B params 🐦 😍 The researchers also released models fine-tuned on various downstream tasks including text categorization, NER, summarization and more! 🤯 Great models Onur Güngör

We would like to compare it with our model TURNA and maybe others. What about working together to have a benchmark for Turkish? Ahmet Üstün

Good news! Our LLaMA-2-Econ model with Ömer Turan Bayraklı accepted at inetd_org by İÜ on Feb 24 🎉 We used supervised fine-tuning for adapting the model to economics papers in academic tasks and surpassed previous models. Special thanks to Onur Güngör for the feedback!

We will host Onur Güngör from Boğaziçi Üni. and Udemy with his talk “TURNA: A Turkish Encoder-Decoder Language Model for Enhanced Understanding and Generation" on March 4 at 8 PM (GMT+3)!