Chris Krempel

@nudelbrot

I'm hacking transformers on arch btw.

ID: 80350379

https://ohmytofu.ai 06-10-2009 17:07:14

1,1K Tweet

136 Followers

354 Following

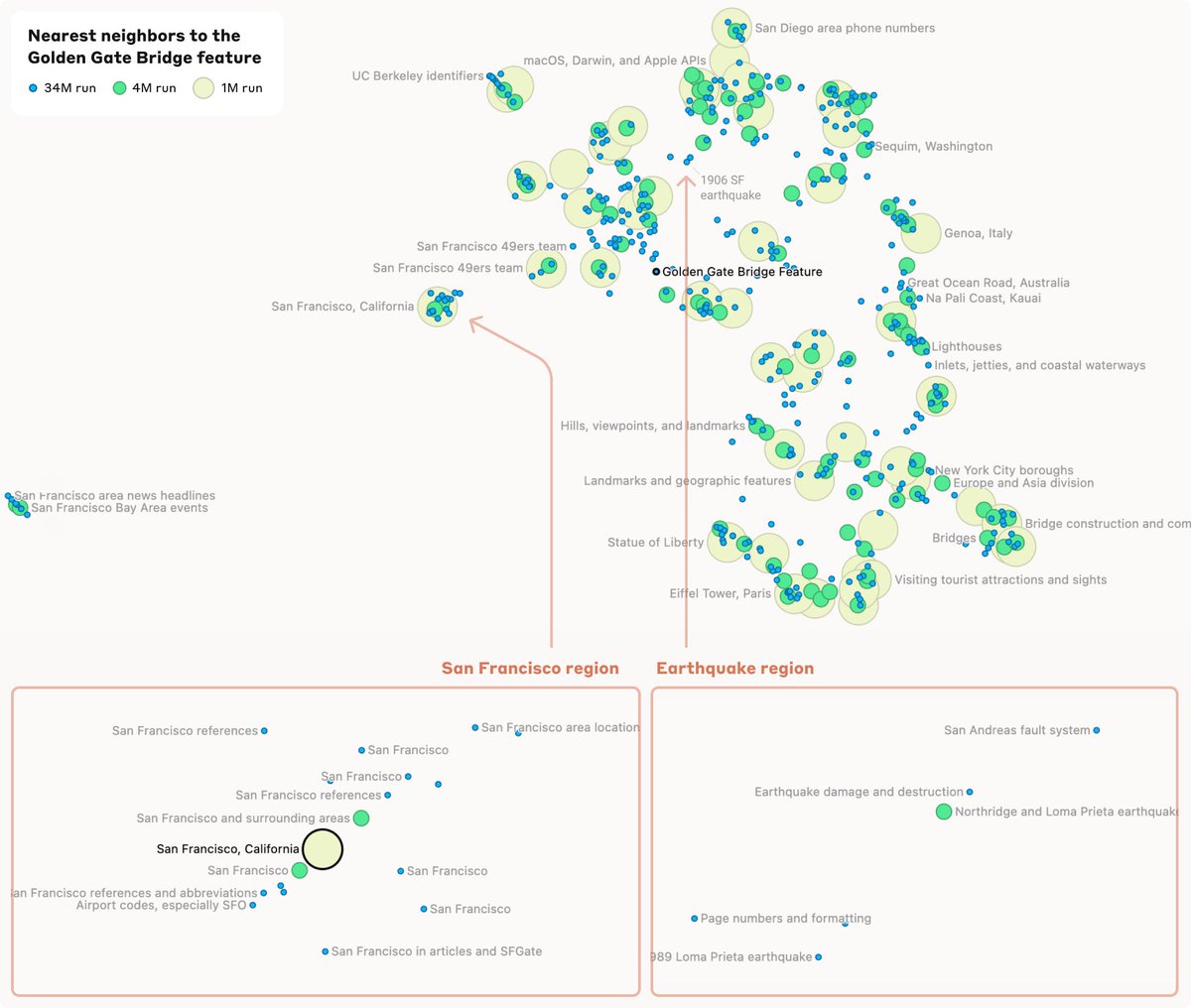

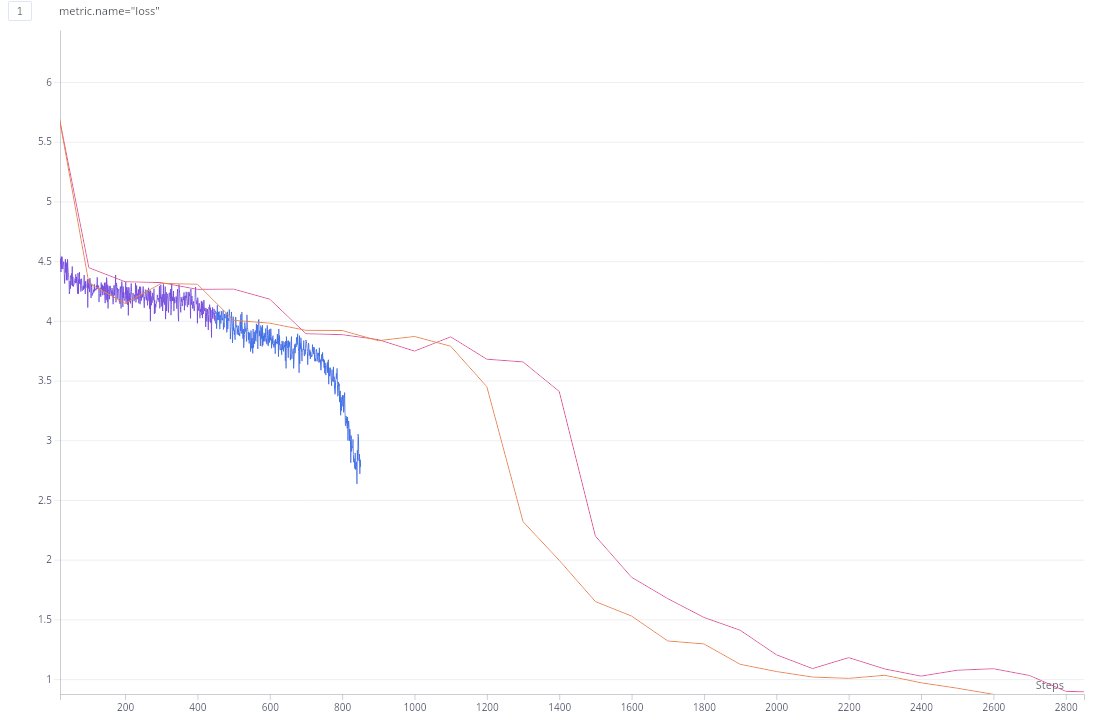

Started embedding the Hugging Face FineWeb dataset - wishing for some compute grand 😂