Nicolas Zucchet

@nicolaszucchet

PhD student @CSatETH

prev. student researcher @GoogleDeepMind | @Polytechnique

ID: 936865625233817600

https://nicolaszucchet.github.io 02-12-2017 07:52:25

140 Tweet

415 Followers

325 Following

I really like this new op ed from David Duvenaud on how so many different kinds of pressures could drive towards loss of human control over AI. It's rare to read anything well written on this topic but this piece was elegant and smart enough that I wanted to keep on reading.

Smooth predictable scaling laws are central to our conceptions and forecasts about AI -- but lots of capabilities actually *emerge* in sudden ways. Awesome work by Nicolas Zucchet Francesco D'Angelo bringing more predictability to emergent phenomena, by studying one type: sparse attention

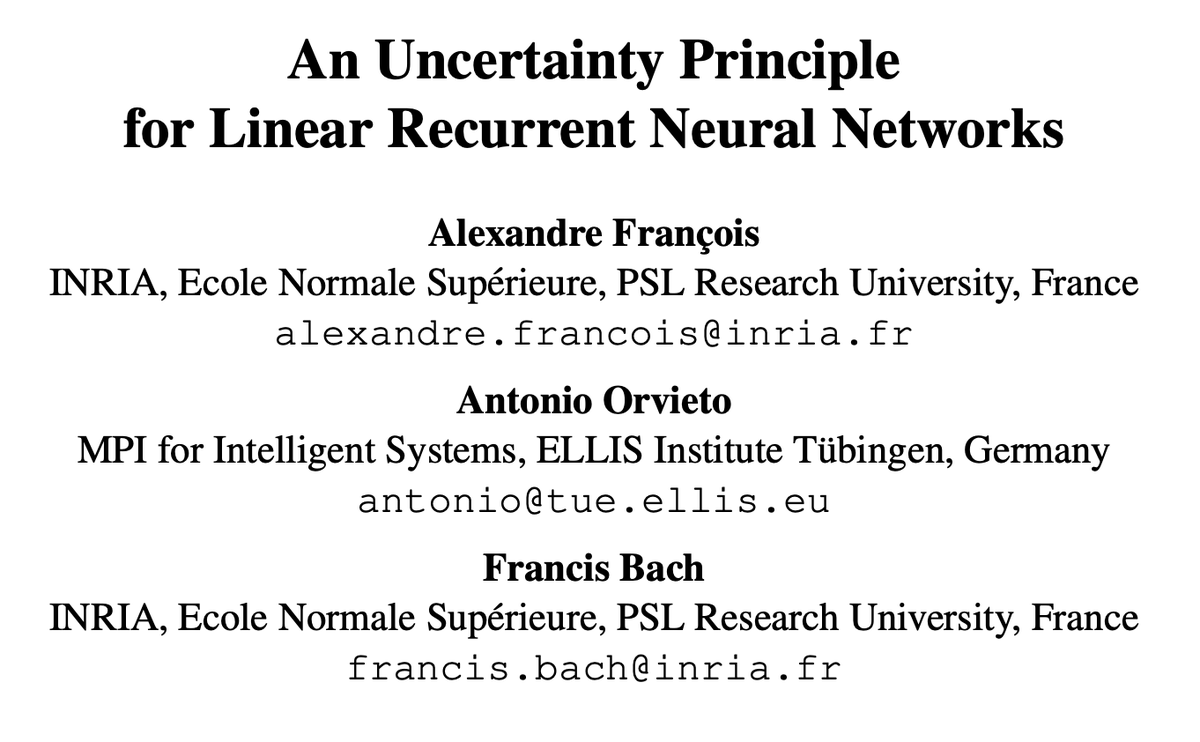

We have a new SSM theory paper, just accepted to COLT, revisiting recall properties of linear RNNs. It's surprising how much one can delve into, and how beautiful it can become. With (and only thanks to) the amazing Alexandre and Francis Bach arxiv.org/pdf/2502.09287

Super excited to host a student researcher together with Johannes Oswald this year! Please sign up if you wanna have some research fun with us :)