Nicholas Roberts

@nick11roberts

Ph.D. student @WisconsinCS. Working on foundation models and breaking past scaling laws. Previously at CMU @mldcmu, UCSD @ucsd_cse, FCC @fresnocity.

ID: 558161705

http://nick11roberts.science 19-04-2012 23:55:34

469 Tweet

1,1K Followers

1,1K Following

Heading to #ICML! I’ll be representing SprocketLab at UW–Madison and Snorkel AI. Reach out if you want to chat about data-centric AI, data development, agents, and foundation models.

“Rubrics” have become a buzzword in AI, but the concept predates the hype. At Snorkel AI, we’re excited to share a fun primer on what rubric‑based evaluation is—and why it’s critical for today’s generative and agentic models.

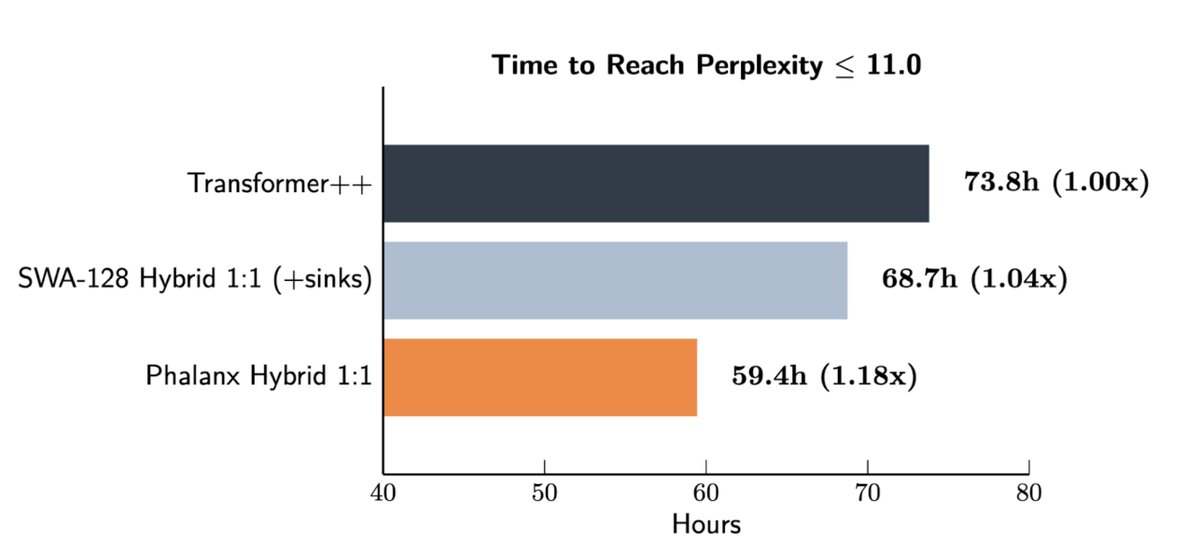

Excited for Conference on Language Modeling next week! I'm taking meetings on scaling laws, hybrid LLMs (Transformer↔SSM/Mamba), agents 🎓I'm also graduating and open to chatting about future opportunities. Grab a slot! docs.google.com/forms/d/e/1FAI… FYI: Tue 4:30–6:30 I’ll be at my poster #COLM2025

Super excited to present our new work on hybrid architecture models—getting the best of Transformers and SSMs like Mamba—at #COLM2025! Come chat with Nicholas Roberts at poster session 2 on Tuesday. Thread below! (1)

Hello world, we wanted to share an early preview of what we're building at Radical Numerics!

The coolest trend for AI is shifting from conversation to action—less talking and more doing. This is also a great opportunity for evals: we need benchmarks that measure utility, including in an economic sense. terminalbench is my favorite effort of this type!

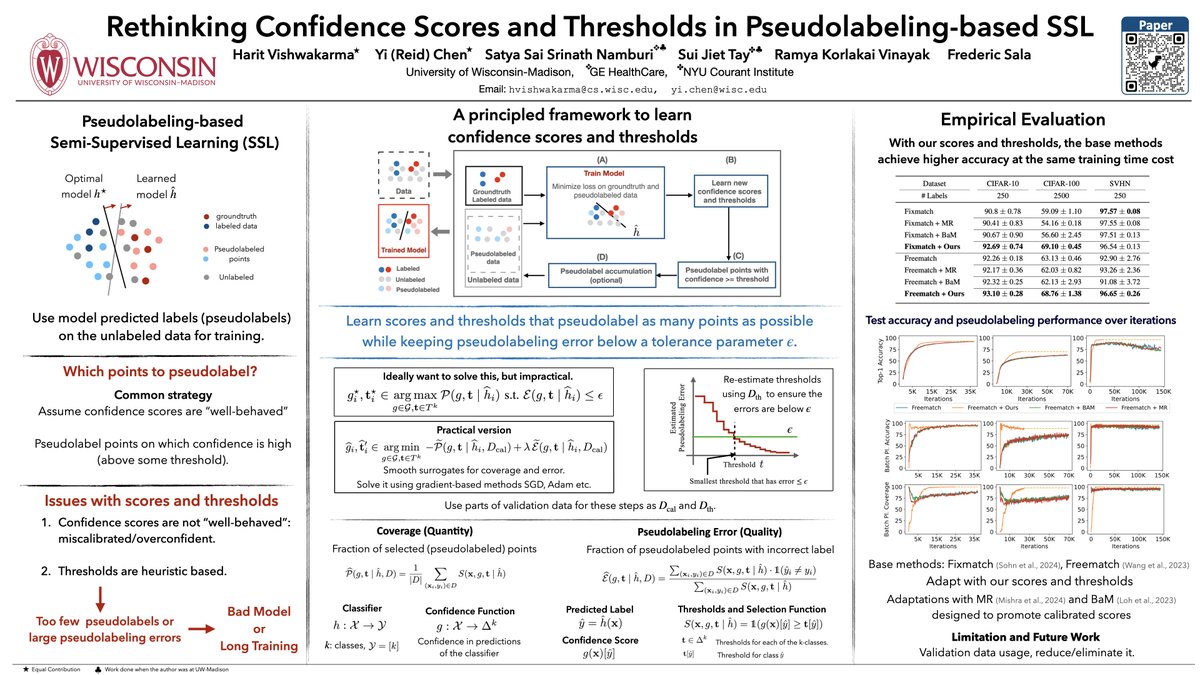

![Harit Vishwakarma (@harit_v) on Twitter photo Excited to be at ICML’25!!! I'll present papers on improving LLM inference and evaluation and pseudolabeling-based semi-supervised learning.

Come and say hi during these sessions, or chat anytime during the week!

[C1]. Prune 'n Predict: Optimizing LLM Decision-making with Excited to be at ICML’25!!! I'll present papers on improving LLM inference and evaluation and pseudolabeling-based semi-supervised learning.

Come and say hi during these sessions, or chat anytime during the week!

[C1]. Prune 'n Predict: Optimizing LLM Decision-making with](https://pbs.twimg.com/media/Gv20IwFX0AAIyZK.jpg)