Nicholas Malaya

@nicholasmalaya

Computational Scientist, AMD. To Exascale, and beyond!

ID:1895743321

https://nicholasmalaya.github.io/ 23-09-2013 01:15:15

2,7K Tweets

987 Followers

922 Following

Join us on the road to #exascale El Capitan! Our multi-part article series continues with installments 6 through 10. 🎉

computing.llnl.gov/livermore-comp…

#HPC #supercomputer #computing

LLNL distinguished member of technical staff & Spack lead Todd Gamblin / @[email protected] is one of the HPCwire #PeopletoWatch 😎

llnl.gov/article/51011/…

#HPC #computing #nationallab #opensource

Why America Must Invest in DOE Labs To Win the #AI Race Against China

realcleardefense.com/articles/2024/…

#HPC U.S. Department of Energy DOE Office of Science

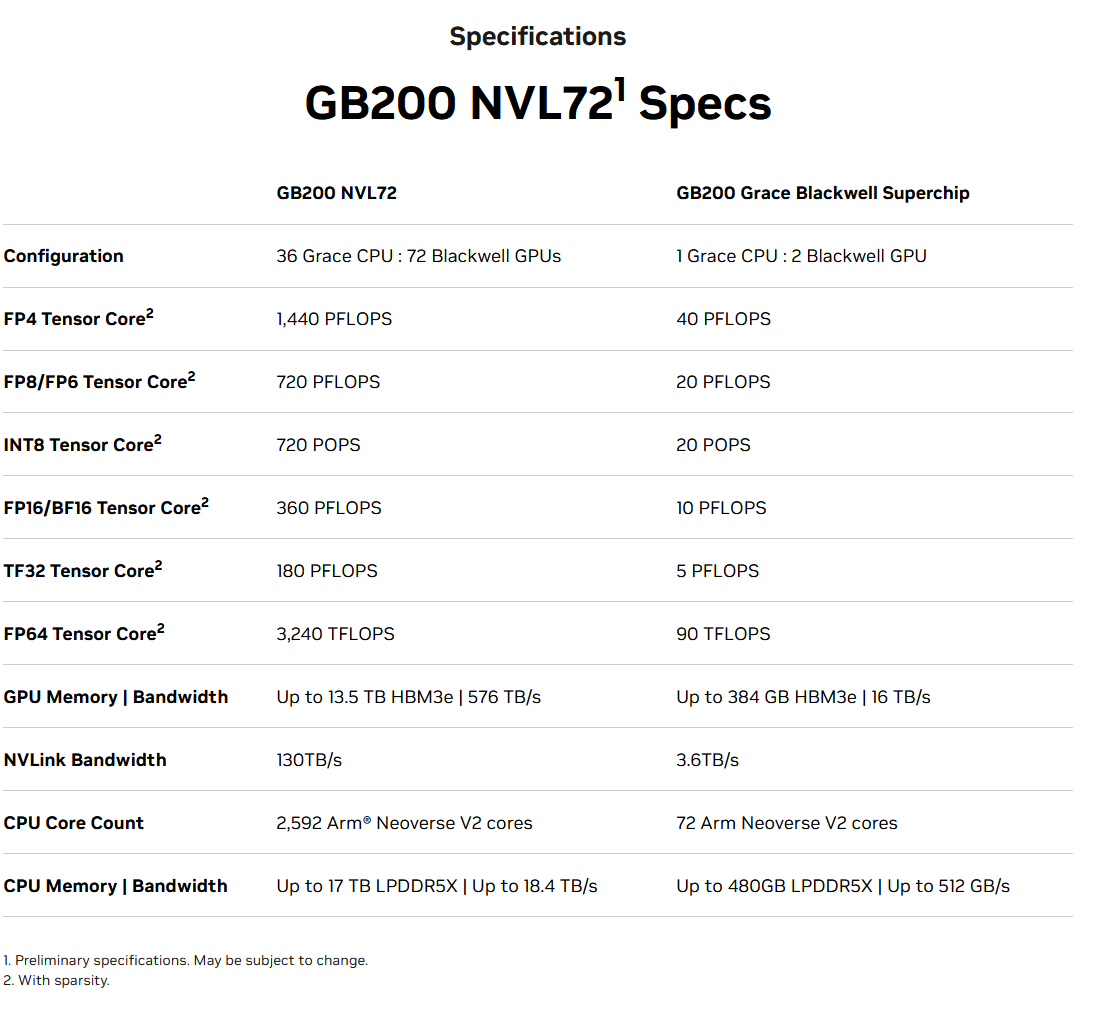

name cannot be blank 🐀 HPC Guru (Taking a break) NVIDIA Nvidia as 100% of inference is just not true.

Mi250X, MI300, CPU by Intel/AMD and TPUs from Google and others.