Nick Comly US

@ncomly_nvidia

ID: 1537914501818814464

17-06-2022 21:46:17

3 Tweet

6 Followers

44 Following

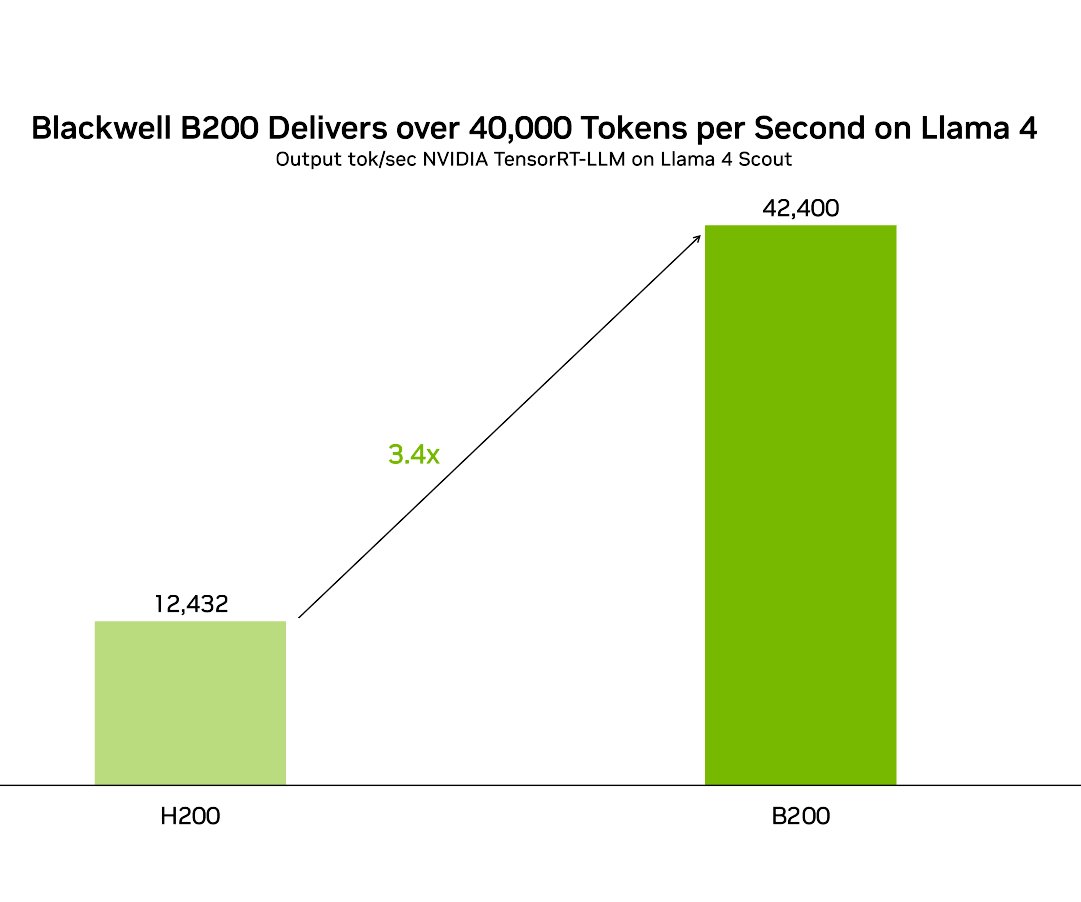

👀 Accelerate performance of AI at Meta Llama 4 Maverick and Llama 4 Scout using our optimizations in #opensource TensorRT-LLM.⚡ ✅ NVIDIA Blackwell B200 delivers over 42,000 tokens per second on Llama 4 Scout, over 32,000 tokens per seconds on Llama 4 Maverick. ✅ 3.4X more

🎉 A new generation of the AI at Meta Llama models is here with Llama 4 Scout and Llama 4 Maverick.🦙 ⚡ Accelerated for TensorRT-LLM, you can achieve over 40K output tokens per second on NVIDIA Blackwell B200 GPUs. Tech blog to learn more ➡️ developer.nvidia.com/blog/nvidia-ac…

🔢 ✨ Bring your data and try out the new Llama 4 Maverick and Scout multimodal, multilingual MoE models from AI at Meta. 🎉 Available now on the free multimodal playground for Llama 4 using our NVIDIA NIM demo environment on the API catalog. ➡️ build.nvidia.com/meta

vLLM🤝🤗! You can now deploy any Hugging Face language model with vLLM's speed. This integration makes it possible for one consistent implementation of the model in HF for both training and inference. 🧵 blog.vllm.ai/2025/04/11/tra…

We’ve seen a lot of interest in B200s after our launch. Our lead DevRel, Philip Kiely, wrote a blog explaining some of their performance benefits and the components needed to build an inference platform on top of B200 GPUs. More details in 🧵

Thank you NVIDIA AI Developer Nebius and DataCrunch_io for providing the development machines H100 and H200. Your support greatly contributed to the fast execution speed of SGLang's optimization!